What are AI Agents, and How Do They Work?

What is an AI agent? It's a question I hear frequently—both in formal training sessions and casual conversations. Even leading AI researchers and company CEOs struggle to provide a clear definition, as you'll notice if you listen to industry podcasts.

While I explored this topic in a paper I presented last fall, I've recently encountered two insights that offer helpful clarity. Here's what I've learned:

___

Traditional AI systems like ChatGPT primarily respond to questions—they "say" things through text or voice. But AI agents represent a fundamental shift: they can act in the digital world to accomplish tasks autonomously.

As Mustafa Suleyman (Microsoft's VP of AI and DeepMind co-founder) explained in August 2023, we're moving beyond AI that just talks to AI that actually does things.

What Are AI Agents?

Google defines AI agents as autonomous software systems with three core capabilities:

1. Perceive - They gather information from their environment through sensors or data inputs

2. Reason - They analyze what they've perceived and make decisions based on their knowledge

3. Act - They take concrete actions in their environment to achieve specific goals

In simple terms: An AI agent is a goal-oriented smart program that can work independently, making decisions and taking actions without constant human guidance.

[This came from a Google course I took on AI Agents; the blurry screenshot is below]

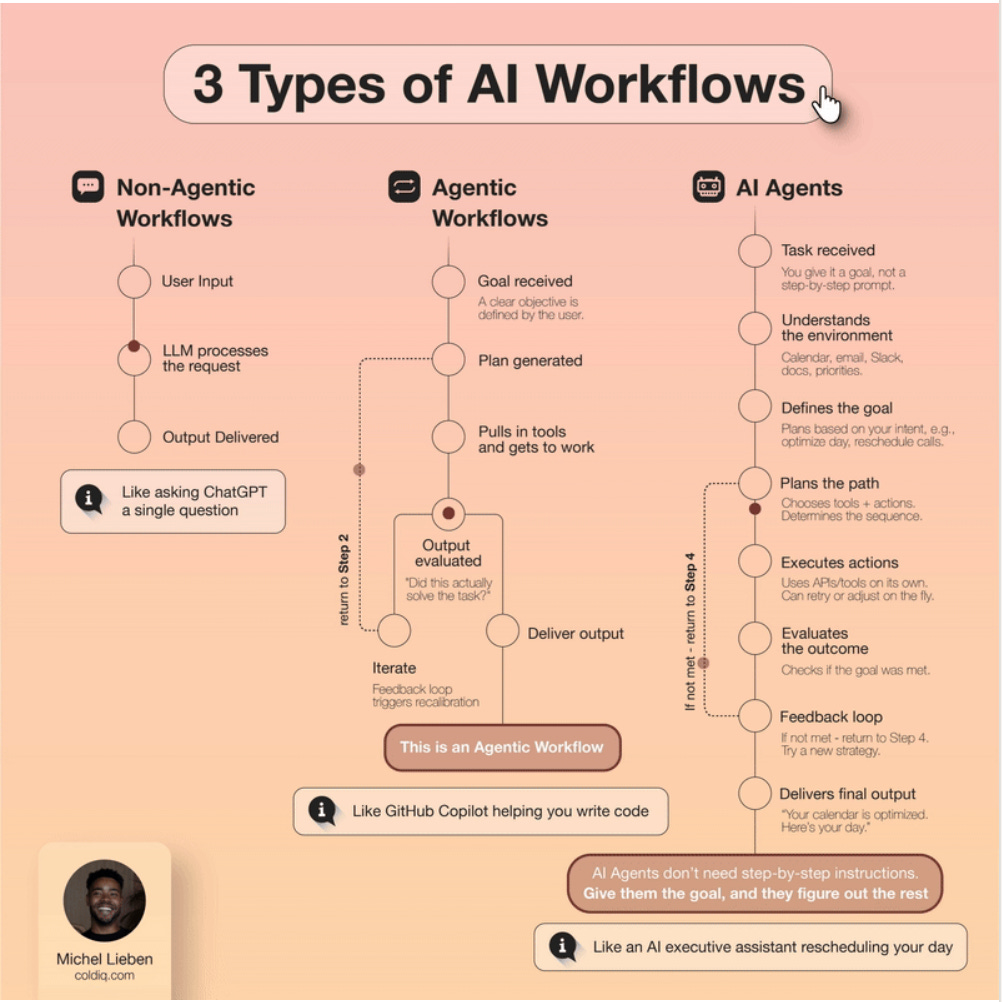

Michael Lieben created this nice flowchart.

How Long Can Agents Work?

As of now, most agents, at least. Outside of coding, they can only operate autonomously for 30 minutes, but already in the area of coding, agents can operate for up to 8 hours and can operate in narrow areas (Pokemon Go) for 24 hours. These capabilities are available in the new Claude model, but also in others. The time at which AI agents can operate autonomously in a reliable way doubles every 7 months, so even if we take the minimum of 30 minutes, we can see 30→1 hr->2->4->16>32. So, within 3-4 years, we can at least expect agents to operate autonomously across many domains for 1.5 days.

Real-World Example: Anthropic's Claude Research Agent

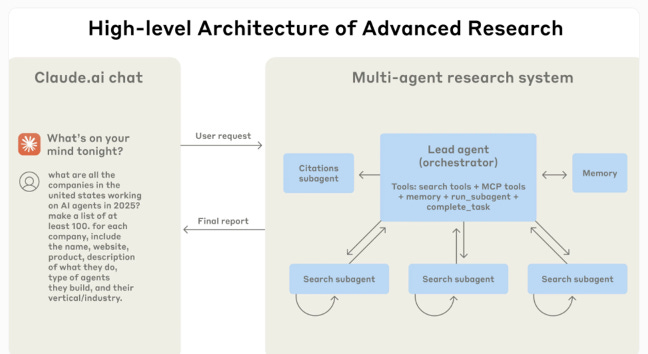

To understand how this works in practice, consider Anthropic's Claude Research agent, released in June 2025.

The Multi-Agent Architecture

Instead of one AI trying to handle everything, the system uses a team approach:

Lead Agent (Claude Opus 4): Acts as the project manager, interpreting your request and creating a research plan

Specialized Sub-Agents (Claude Sonnet 4): Work in parallel as research specialists, each focusing on different aspects of the search

Orchestrator: Coordinates the entire team's efforts

Memory Module: Combines all findings into a comprehensive, well-sourced report.

In internal tests, this multi-agent setup outperformed a standalone Claude Opus 4 agent by 90.2%. Claude Opus 4 serves as the lead coordinator, while Claude Sonnet 4 powers the sub-agents. To evaluate performance, Anthropic uses an LLM-as-judge framework, scoring outputs based on factual accuracy, source reliability, and effective tool use. They argue this method is more consistent and efficient than traditional evaluation approaches, positioning large language models as managers of other AI systems.

Why This Approach Works Better

This multi-agent system outperformed a single Claude Opus 4 agent by 90.2% in internal testing. The key advantages:

Speed: Multiple agents work simultaneously rather than sequentially

Specialization: Each sub-agent can focus on what it does best

Comprehensiveness: Parallel research covers more ground more thoroughly

Quality: Better coordination leads to more accurate, well-sourced results

Measuring Success

Anthropic evaluates their agents using an "LLM-as-judge" framework, scoring outputs on:

Factual accuracy

Source reliability

Effective tool usage

This represents a shift toward AI systems managing other AI systems—a glimpse into how complex AI workflows might operate in the future.

The Bigger Picture

AI agents mark a transition from AI as a conversational tool to AI as an autonomous digital workforce, capable of perceiving, reasoning, and acting to accomplish complex, multi-step tasks with minimal human oversight.

So now we have agents that can do things.

See Also

__

”AI Agent” screenshot from class.

Fascinating. Thank you Stefan. A really clear explainer