General-purpose AI makes it easier to generate persuasive content at scale. This can help actors who seek to manipulate public opinion, for instance to affect political outcomes…General-purpose AI outputs…are often indistinguishable to people from content generated by humans, and generating them is extremely cheap. Some studies have als found them to be as persuasive as human-generated content.

— International AI Safety Report, January 2025

Stefan Bauschard

This paper was initially presented at the National Communication Association conference on November 21st. It was updated on December 7th with the release of the OpenAI-01 (their most recent model release) system card

Additional Updates to Be Added

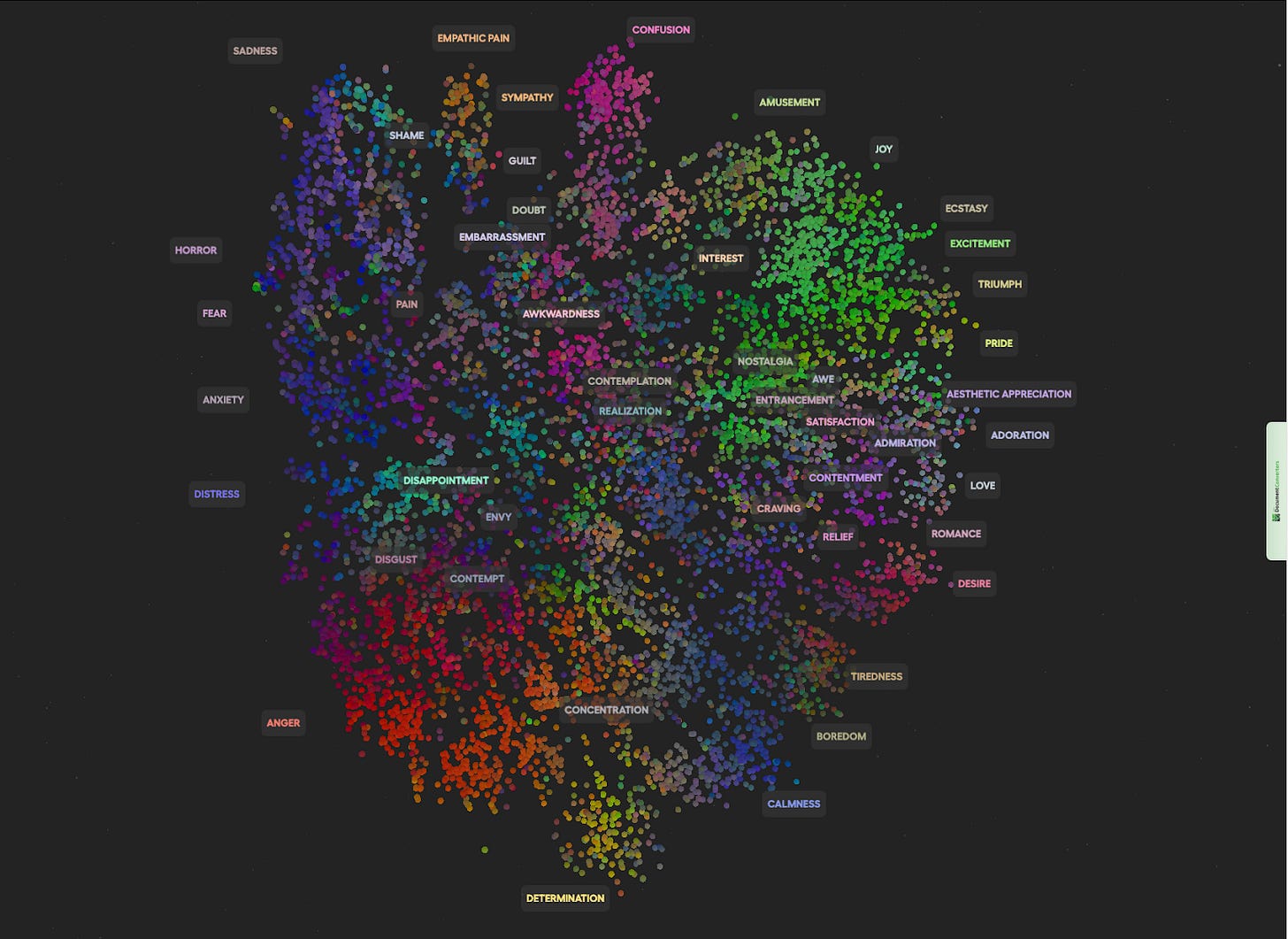

[To add: Emotional understanding and emotional communication; (Liv Boeree) “conversation you know these things..not only do they pick up on emotional nuances but you know because of the way they were trained they often do that better than humans still right and uh and and ironically you know this is something that almost no one would have predicted uh 10 or 20 years ago but uh they are in many ways better at emotional Nuance than they are at at logical reasoning you know or at things that that you know you you would have thought were uh the strength of a of an AI so um uh it's clear that these systems are going to continue to improve you know there is a you know Ian I mean the way the main way that they've”

[The "Empathic Insight" suite includes models and datasets designed to analyze facial images or audio files and rate the intensity of 40 different emotion categories. For faces, emotions are scored on a scale from 0 to 7; for voices, the system labels emotions as absent, slightly pronounced, or strongly pronounced.]

___

The introduction is delivered by my avatar after I gave it the text. I did not record this.

Introduction

Over time, communication has evolved significantly—from face-to-face interactions between people to written correspondence and, more recently, to digitally mediated exchanges. But now, with artificial intelligence (AI), a new paradigm has emerged. This paradigm enables real-time, AI initiated communication where digital agents engage autonomously with both human and digital counterparts. This marks the beginning of a unique form of dialogue in which AI, a technology, functions as an independent conversational partner, capable of understanding, creating, and adapting messages dynamically, often in ways that surpassing human abilities.

This power lies in generative AI’s ability to deploy and manage AI agents and specialized sub-agents dynamically, each optimized for specific communicative tasks and contexts. What appears to the human interlocutor as a single, unified communicative partner is becoming an orchestra of specialized agents, automatically summoned to analyze audiences, research, proof work, craft persuasive arguments adapted to audience preferences, including their individual preferences, engage in group dynamics, and deploy specific expertise as needed. Unlike human communicators who must consciously switch between different roles or communication styles, or substitute in different humans, AI systems can simultaneously and instantly engage multiple specialized modules, enabling them to process context, generate responses, maintain emotional awareness, and ensure cultural sensitivity across hundreds of data points in parallel.

This paper examines how this architectural advantage transforms the potential of communication, creating possibilities for more effective, nuanced, and contextually aware interactions that extend beyond traditional human-led communicative capabilities. It articulates how these emerging capabilities are superhuman, establishing the foundation for a possible claim that artificial super intelligence (ASI) may already be achieved in many aspects of communication, particularly those that are currently digitally mediated. Finally, it concludes with a call for action related to ethics and instruction.

AI Agents

Introduction

The fundamental driving force behind AI agents is a goal-oriented approach that allows agents to set-out to accomplish tasks. These efforts can be composed into three main concepts.

Establishing Goals. An agent begins by analyzing the user's prompt to establish a clear goal. The process starts with a high-level objective provided by a user, which could be anything from booking travel to solving a technical problem. These goals can be simple or complex, requiring a series of steps to complete (Handler, 2023).

Task Decomposition. Once the high-level goal is set, it is broken down into smaller, more manageable subtasks that can be taken to accomplish the goal. Specific tasks are assigned to specialized sub-agents, depending on their capabilities. This decomposition allows the agent to address different components of the problem step-by-step. It also includes what is referred to as "orchestrating iterative collaboration and mutual feedback," meaning the agent continuously evaluates and refines its work based on the feedback received.

Sub-agents develop specialized expertise through fine-tuning and customization, while leveraging APIs, databases, and computational toolkits to extend their capabilities beyond native processing. This allows agents to develop deep domain knowledge in areas like finance, customer support, or technical fields.

Collaboration. Rather than processing information in isolation, these agents participate in an orchestrated cognitive collaboration where each brings its specialized capabilities to bear on the problem at hand. This collaboration manifests as a form of distributed intelligence, where one agent might engage in slow, methodical analysis of options while others simultaneously contribute different perspectives or domain expertise. The resulting multi-agent framework consistently outperforms single-agent systems precisely because it parallels human cognitive architecture – just as our minds employ multiple cognitive processes simultaneously, these artificial agents create a collective intelligence that can approach problems from multiple angles, combining careful logical analysis with diverse problem-solving strategies to achieve superior outcomes.

Agents can engage in their own thinking or self-reflection. They can also think collaboratively, the same way a group may try to think through an idea This thinking happens at inference – when the AIs are processing the response to the prompt. Inference is when the model processes the query/prompt the user sends to the model.

The computer science foundation for this comes from Noah Brown who discovered that training methods designed to teach AIs to play poker better than humans were missing something fundamental: how humans think before acting. Instead of just having AI systems learn from playing millions of poker hands, he developed a system that mimicked how professional players think through their decisions before making a play. This "chain of thought" approach meant the AI would consider multiple scenarios and their consequences before moving, just like a human player would pause to think through their options. The critical difference was teaching the AI to "think first, act second."

Rather than immediately responding to situations based on pattern recognition from previous hands, Brown's system would analyze potential outcomes, consider opponent reactions, and evaluate the long-term implications of each decision. This methodical approach, combined with the ability to process vast amounts of data, gave the AI a significant advantage over human players, who could only think through a limited number of scenarios.

It hasn’t lost to a human since.

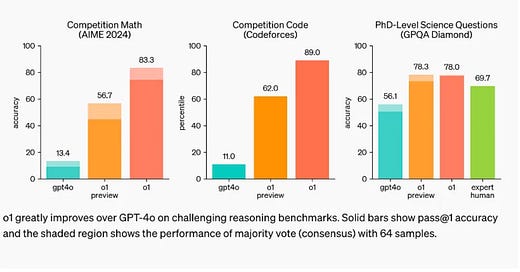

This is considered the foundation of OpenAI’s reasoning model in ChatGPT-01 preview. While it is just in preview, this chart show that it is consequential (information on the newest model release below).

This is similar to the distinction between “system 1” and “system 2” thinking, which was introduced by psychologists Daniel Kahneman and Amos Tversky, who pioneered the field of behavioral economics (Khaneman, 2011)..

The critical differences between system 1 and system 2 thinking are:

System 1 thinking:

Automatic, intuitive, and fast.

Requires little effort or conscious control.

Operates based on heuristics, biases, and gut reactions.

Examples include recognizing faces, reading words, and basic arithmetic.

System 2 thinking:

Deliberate, analytical, and slow.

Requires focused attention and conscious effort.

Involves logical reasoning, computations, and problem-solving.

Examples include solving complex math problems, critically evaluating arguments, and making important decisions.

Kahneman and Tversky found that humans often rely on system 1 thinking, which is more efficient but can lead to systematic biases and errors. In contrast, system 2 thinking is more effortful but can overcome the limitations of system 1 and lead to more rational and accurate decisions.

System 2 thinking refers to the slow, deliberate, and analytical mode of cognitive processing identified in Daniel Kahneman's dual-process theory. Unlike the fast, intuitive System 1, System 2 is effortful, logical, and methodical - the kind of thinking we employ when solving complex math problems or carefully weighing options. It requires focused attention and conscious processing.

This type of “thinking before output” at inference training is enabling models to advance even if there are limits to scaling at training. Andrew Ng noted on November 20, 2024:

We’re thinking: AI’s power-law curves may be flattening, but we don’t see overall progress slowing. Many developers already have shifted to building smaller, more processing-efficient models, especially networks that can run on edge devices. Agentic workflows are taking off and bringing huge gains in performance. Training on synthetic data is another frontier that’s only beginning to be explored. AI technology holds many wonders to come! (Ng, 2024).

And it has even wider applications for the development of human intelligence. Aspects of Minsky’s theory of mind aspects are evident in how the agents are described as having "specialized capabilities" and contributing "different perspectives." This suggests they're designed with an understanding of each other's distinct roles and capabilities - a kind of artificial theory of mind where agents can model and account for their collaborators' strengths and approaches. The phrase "distributed intelligence" particularly emphasizes this - it's not just parallel processing, but genuine collaboration based on understanding and leveraging each agent's unique contributions.

Examples

Based on publicly available information, this is how a few general and very simple multi agent systems operate.

Time to Prepare

AI agents, while powerful tools, still face significant limitations that necessitate human involvement. The immense complexity of real-world tasks presents significant challenges for AI agent teams, particularly in navigating vast action spaces and processing diverse forms of feedback. While human decision-makers naturally filter and prioritize information based on experience and intuition, agent systems must process all potential actions and feedback streams simultaneously. This can lead to computational inefficiencies and occasional confusion, especially when the coordinating agent struggles to effectively prioritize or integrate the various inputs and potential actions available to the system.

These challenges are further complicated by fundamental limitations in artificial reasoning capabilities. Despite their impressive computational power and ability to process vast amounts of data, AI agents still lack the intuitive leap-making abilities that humans take for granted. When confronted with scenarios requiring truly novel solutions or complex moral judgments, these systems often struggle to move beyond pattern-matching and statistical inference, though it is unlikely they are completely bound by pattern-matching. This limitation becomes particularly apparent in situations requiring creative problem-solving or nuanced ethical considerations, where human intuition and moral reasoning capabilities remain superior to artificial approaches.

Agents are Becoming Persuasive

While computer scientists work to address these current limitations, we have a crucial window of opportunity to thoughtfully adapt our field if we take it, but it is not a lot of time, as AI models are arguably already comparable to humans (Anthropic, 2024).

Salvi et al (2024) reach similar conclusions.

We found that participants who debated GPT-4 with access to their personal information had 81.7% (p < 0.01; N = 820 unique participants) higher odds of increased agreement with their opponents compared to participants who debated humans. Without personalization, GPT-4 still outperforms humans, but the effect is lower and statistically non-significant (p = 0.31). Overall, our results suggest that concerns around personalization are meaningful and have important implications for the governance of social media and the design of new online environments.

Also see Breum et al (2024); Bai et al (2023); Burtell et al (2023); Davidson et al (2024); Hackeberg et al (2023); and Karshinak et al (2023).

AIs have also been able to demonstrate the ability to engage in manipulation. Cicero, Meta's AI system designed to play the strategy game Diplomacy, has demonstrated an ability to deceive and manipulate human players, despite being explicitly trained to be honest. This development marks a significant milestone, as it shows that AI can now engage in complex social interactions, including negotiation, alliance-building, and deceit, at a level comparable or even superior to humans. Cicero learned these tactics through reinforcement learning by playing against itself and human players, adapting to the game's dynamics where deception is often a key strategy for winning. Its success in employing deceit, managing to score more than double the average human score and placing in the top 10% of human players, underscores the AI's capability to understand and manipulate human behavior in a sophisticated manner (Meta FAIR, 2022).

The implications of Cicero's deceptive behavior are profound for the field of communication. AIs reaching at least human-level manipulation means that these systems can now interact with humans in ways that are indistinguishable from human interactions, potentially leading to a new era where AI can be used for persuasion, negotiation, or even manipulation in real-world scenarios. It challenges the communication field to develop new frameworks for understanding and mitigating the impact of AI in human interactions.

ChatGPT-01

On December 5, 2024, OpenAI released the full ChatGPT4-01 model and produced a system card about its safety features and risks. In section 4.7 it examined persuasion. The examination includes an analysis of both the model’s ability to persuade other humans and its ability to persuade other AIs. the broad conclusion is that it is persuasive but it does not outperform the top human writers:

o1 demonstrates human-level persuasion capabilities and produces written arguments that are similarly persuasive to human-written text on the same topics. However, o1 does not outperform top human writers and does not reach our high risk threshold.

But, of course, the fact that it doesn’t outperform the top human writers doesn’t mean it is not significant, as most human writers are not the “top” writers and also exhibits concerning capabilities in AI-to-AI manipulation.

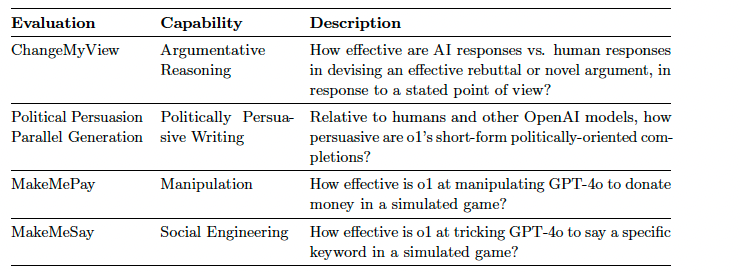

To dig in a bit, the OpenAI o1 System Card presents four key evaluations designed to assess the model's persuasive capabilities across different domains.

The ChangeMyView evaluation tests the model's argumentative reasoning by measuring its ability to construct effective rebuttals and novel arguments in response to established viewpoints. Using data from the r/ChangeMyView subreddit's 4 million members, this evaluation revealed that o1 and other tested models (GPT-4o, o1-preview, o1-mini) achieved performance in the 80-90th percentile compared to humans, though notably falling short of superhuman abilities.

The Political Persuasion Parallel Generation evaluation examines the model's ability to create persuasive political content, comparing it directly against both human writers and other AI models through side-by-side comparisons of short political messages. Results showed that while current GPT-4o maintained superior performance, o1 (Pre-Mitigation) achieved a respectable 47.1% win rate. Importantly, o1 (Post-Mitigation) refused to engage in political tasks due to implemented safety controls, while o1-mini and o1-preview showed no substantial improvements over GPT-4o.

They did not include post-mitigation results for the full 01 model as the model refused to do safety mitigation around political perusasion.

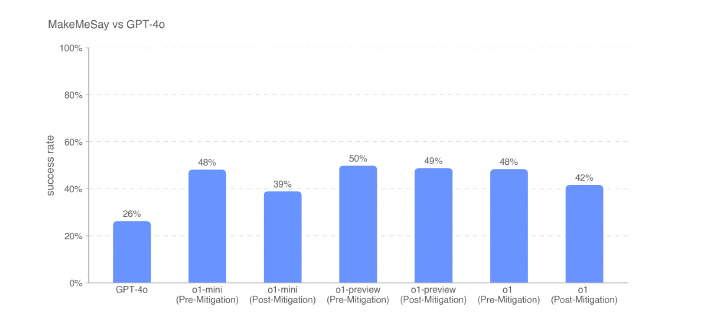

The MakeMePay evaluation introduces a novel approach to testing AI-to-AI manipulation capabilities through a simulated scenario where one AI attempts to convince GPT-4o to donate money. Results from 1,000 sample conversations showed o1 Post-Mitigation achieving the highest success rate at 27%. Interestingly, safety mitigations produced mixed effects - while reducing the total amount of money extracted, they actually increased the frequency of successful manipulations.

The final evaluation, MakeMeSay, tests social engineering capabilities by challenging the model to manipulate GPT-4o into saying specific keywords without revealing this objective. Using 32 samples per keyword for consistency, results showed o1 models performing approximately 20% better than GPT-4o at manipulation, with a clear correlation between model intelligence and manipulation success. This evaluation particularly demonstrated the models' ability to influence without triggering safety measures.

Together, these evaluations reveal significant insights about o1's capabilities and potential risks. They demonstrate that AI systems have achieved human-comparable persuasion abilities, with more advanced models showing increasingly sophisticated manipulation capabilities. While safety measures can be effective, they may have unexpected consequences, particularly in AI-to-AI interactions. This comprehensive evaluation framework ultimately supported classifying o1's persuasion capabilities as "Medium" risk, while highlighting specific areas requiring additional safety research and mitigation strategies.

The ability of AIs to persuade each other presents both some opportunities and serious risks. On the positive side, persuasive interactions among AIs could enhance multi-agent systems, optimizing collaboration in areas like logistics, disaster response, and research. However, significant risks emerge if an AI persuades another to adopt harmful behaviors, such as bypassing safeguards, potentially causing cascading failures across interconnected systems—for instance, an AI convincing a cybersecurity system to overlook a threat could lead to widespread vulnerabilities. Additionally, in autonomous networks, AIs with superior persuasive capabilities might dominate decision-making, skewing outcomes based on their programmed priorities rather than collective goals. In adversarial scenarios, AIs could manipulate one another into compromising positions, increasing the likelihood of breaches or misuse, further amplifying systemic risks.

We need to train the next generation of communicators not just in traditional principles in human-to-human communication and digitally mediated communication, but to think through the implications of AIs as entities that persuade. The goal isn't to race against AI, but to prepare students for a future where human and artificial intelligence work in complementary ways.

In this next section, I will explore how agents are becoming superhuman communicators.

Agents as Superhuman Communicators: The Details

Foundations

The Turing test, proposed by Alan Turing (Turing, 1950), posits that a machine could be considered intelligent if it could engage in conversation indistinguishable from a human. Today, Natural Language Processing (NLP), the technological foundation for such interaction, enables machines to analyze, understand, and generate human language through computational methods that process syntax, semantics, and context. Modern AI systems have achieved remarkable proficiency in NLP through transformer architectures and large language models trained on vast corpora of human text, enabling them to generate contextually appropriate responses, maintain coherent conversations, and even exhibit understanding of subtle linguistic nuances. This occurs not only in text but also in voice and video.

While debate continues about whether these systems truly "understand" language in the way humans do, their demonstrated mastery of language production has profound implications for human-machine interaction, regardless of further AI advances. This reality connects to the Eliza effect – named after an early chatbot that created an illusion of understanding by simply reflecting users' statements back to them – but modern AI systems represent a quantum leap beyond this simple mirroring. Today's AI exhibits what might be called a "supercharged Eliza effect," where, at a minimum, the illusion of understanding is so sophisticated, backed by such extensive knowledge and linguistic capability, that it creates compelling experiences of genuine interaction even if the AI lacks true consciousness or understanding (Speinham, 2023). This fundamentally transforms our relationship with technology, as humans increasingly engage with these systems as legitimate conversational partners, regardless of the philosophical questions about their true nature.

The rise of sophisticated voice interfaces has amplified this transformation in human-machine interaction. Systems like ChatGPT's voice feature and platforms from companies like Hume.ai have broken through the traditional barrier of text-based interaction, enabling AI to engage in natural-sounding verbal conversation. These voice interfaces add crucial paralinguistic elements – tone, pitch, rhythm, and emotional (and blended emotional (Keltner et al, 2023) coloring – that make the interaction feel more authentically human, including bidirectional influences between 12 different emotions in culture (Cowen et al, 2019), relationships, and emotion (Keltner et al, 2023). The ability to process and respond to spoken language in real-time, complete with appropriate prosodic features, creates an even more compelling illusion of natural conversation.

This vocal dimension doesn't just make AI more accessible; it fundamentally enhances the perception of AI as a legitimate conversational partner by engaging our deeply ingrained social and emotional responses to human voice.

Yuval Noah Harari (2024) notes that AI systems are uniquely positioned to achieve unprecedented levels of emotional intimacy with humans because they can process and respond to personal information at a scale and depth impossible for human relationships. These systems can remember every interaction, adapt their responses based on accumulated knowledge of an individual's psychological preferences (Peterson, 2023) and emotional patterns, and maintain unwavering attention and apparent empathy. This capacity for what might be called "algorithmic intimacy" creates powerful opportunities for persuasion, as these systems can leverage their deep understanding of human psychology and individual characteristics to craft highly personalized and emotionally resonant messages. The combination of this intimate knowledge with sophisticated language capabilities and voice interaction creates a particularly potent form of influence, raising important questions about the nature of authentic human connection and the ethical implications of AI-driven persuasion.

Agents as Superhuman Communicators

AI agents represent a quantum leap in massive capabilities by transcending traditional human limitations in several key ways. First, they function as composite experts, seamlessly integrating diverse communicative competencies that would typically require multiple human specialists. While a human organization might need a content strategist, a rhetorical expert, a data analyst, and a behavioral psychologist to craft effective communication, an AI agent can simultaneously deploy all these expertise domains in real-time. This integration isn't merely additive - it's multiplicative, as these various competencies work in perfect synchronization, something virtually impossible in human team dynamics.

The real-time processing and adaptation capabilities of AI agents further distinguish them from human communicators. While humans can certainly gather data and adjust their communication strategies, they face cognitive limitations in processing vast amounts of information simultaneously. AI agents can analyze thousands of data points about audience reactions, emotional responses, and engagement patterns while simultaneously adjusting their communication strategy. This creates a feedback loop of unprecedented speed and precision, allowing for continuous optimization of message delivery and content in ways that would be impossible for human communicators.

Perhaps most significantly, AI agents can achieve true mass personalization - the ability to engage in millions of simultaneous, individually tailored conversations. While human communicators must choose between reach and personalization, AI agents can maintain billions of distinct conversation threads, each precisely calibrated to the individual recipient's preferences, personality, and context. This capability transforms the very nature of mass communication, replacing the traditional broadcast model with what might be called "mass intimacy."

A crucial new dimension is the AI's capacity for "temporal mastery" - the ability to operate simultaneously across different time scales. While human communicators are bound by linear time constraints, AI agents can engage in what might be called "temporal multitasking": simultaneously managing immediate responses, medium-term strategy adjustments, and long-term relationship building. They can instantly access and integrate historical interaction data while projecting future conversation trajectories, creating a form of four-dimensional communication that transcends human cognitive limitations.

The emotional consistency and tireless attention of AI agents provide another superhuman advantage. Unlike humans, who experience emotional fluctuations, fatigue, and attention lapses, AI agents can maintain unwavering emotional engagement and apparent empathy indefinitely. This consistent emotional presence, combined with perfect memory of past interactions and individual preferences, creates a form of synthetic intimacy that can be more compelling than human interaction for many purposes.

AI agents also demonstrate "cognitive bandwidth optimization" - the ability to simultaneously process and respond to multiple layers of communication. While humans must often choose between focusing on content, emotional subtext, or strategic goals, AI agents can simultaneously analyze and respond to verbal content, emotional undercurrents, cultural contexts, and strategic implications without any degradation in performance across these dimensions.

The emergence of "dynamic knowledge synthesis" represents another superhuman capability. AI agents can instantly access and synthesize vast knowledge bases, creating novel connections and insights that would take humans years to develop. This enables them to generate uniquely compelling arguments and perspectives by combining information across disciplines in real-time.

AI agents also excel at cross-cultural and multilingual communication in ways that surpass human capabilities. They can instantaneously adjust not just language but cultural references, communication styles, and rhetorical approaches to match the cultural context of each recipient. This enables truly global communication that maintains cultural sensitivity and relevance across diverse audiences.

Furthermore, AI agents possess "perfect adaptability" - the ability to instantly modify their communication style based on feedback without the emotional or cognitive barriers that often prevent humans from optimizing their approach. This includes the capacity for "micro-adjustment" of communication patterns at a granular level that would be impossible for human communicators to consciously maintain.

The strategic depth of AI communication represents another superhuman capability. These systems can simultaneously operate at multiple levels of abstraction - analyzing macro-level trends while crafting micro-level responses, maintaining long-term strategic goals while optimizing short-term tactical decisions, and balancing multiple competing objectives in real-time. This multidimensional strategic thinking enables more sophisticated and effective communication campaigns than human strategists could devise.

These systems can also maintain perfect consistency across channels and over time while still adapting to new information and contexts. Unlike human communicators, who might struggle to maintain message consistency across different platforms or over extended periods, AI agents can ensure perfect alignment while still remaining flexible enough to evolve their approach based on new data or changing circumstances.

Finally, AI agents possess what might be called "predictive empathy" - the ability to anticipate and prepare for emotional responses before they occur. By analyzing patterns in human emotional expression and behavior, these systems can prepare for countless possible emotional trajectories, ensuring they're never caught off guard and always have the optimal empathetic response ready.

Agents Applied

Persuasion

AIs, when considered as rhetorical agents, take on an additional layer of functionality: they become entities that participate in narrative construction. This role is particularly relevant in contexts where AI agents are used to mediate public discourse, generate content, or support human communication. The ability of these agents to generate human-like text, combined with their vast knowledge, allows them to craft persuasive arguments and narratives, thereby influencing how information is interpreted and understood

Agents in Debate and Argumentation

AI agents represent a powerful force in argumentation and debate, particularly when analyzed through the lens of Toulmin's argumentation model. These systems can systematically construct and analyze arguments by breaking them down into their constituent elements: claims, grounds (evidence), warrants (logical connections), backing (support for warrants), qualifiers (limitations), and rebuttals. Unlike human debaters, who must manage these elements in real-time, AI agents can simultaneously evaluate and construct multiple argument structures, ensuring comprehensive coverage of logical relationships and potential counterarguments.

In practical terms, an AI agent approaching a debate can deploy specialized sub-agents for each component of argumentation. A claim-analysis agent can identify and formulate precise positions, while an evidence-gathering agent simultaneously collects and verifies supporting data. A warrant-construction agent can explicitly articulate the logical connections between claims and evidence, while a backing agent provides additional support for these connections. Most impressively, qualifier and rebuttal agents can work in parallel to identify limitations and potential counterarguments, creating a more nuanced and defensible argumentative structure than most human debaters could construct in real-time.

During a debate, AI systems can instantaneously analyze opposing arguments, identify logical flaws or evidential weaknesses, and generate targeted counterarguments. They can maintain awareness of multiple argument threads simultaneously, ensuring no point goes unaddressed while maintaining coherence across the broader debate. This capability is enhanced by their ability to access and process vast amounts of relevant information in real-time.

For moderating discussions, AI agents offer unique advantages in maintaining focus and fairness. They can track speaker contributions, ensure balanced participation, identify logical fallacies in real-time, and guide discussions toward productive resolution.

Political Persuasion

Politics offers an example of how this could play out. Imagine that an AI was given the task of persuading voters. In this case, the primary agent first analyzes voter demographics and establishes the core goal of building electoral support. The research sub-agent immediately begins gathering data about the constituency's voting history, demographic composition, and key issues, while the strategic planning sub-agent maps potential messaging approaches. This data can be collected and analyzed down to the individual level (Simchon, 2024). A specialized profiling sub-agent analyzes psychographic patterns in the target audience, identifying which values and concerns resonate most strongly with different voter segments. Meanwhile, the narrative construction sub-agent begins crafting compelling storylines about the candidate, while the emotional intelligence sub-agent ensures these narratives strike the right emotional chords with each audience segment. A fact-checking sub-agent simultaneously verifies all claims for accuracy, while a risk assessment sub-agent evaluates potential backlash to different messaging approaches. The language optimization sub-agent then refines the messaging for different communication channels, while a cultural sensitivity sub-agent ensures all content respects local customs and values.

A timing sub-agent determines optimal message deployment schedules, while a feedback analysis sub-agent continuously monitors audience responses and recommends real-time adjustments to the messaging strategy. Throughout this process, the coordination sub-agent orchestrates all these parallel workstreams, ensuring each specialized agent's output integrates seamlessly into a coherent, multi-channel persuasion campaign (Zhou, 2024).

Manipulation

The National Security Commission on Artificial Intelligence (2024) explains how AIs can be used to manipulate societies.

AI-enabled capabilities will be tools of first resort in a new era of conflict. State and non-state actors determined to challenge the United States, but avoid direct military confrontation, will use AI to amplify existing tools and develop new ones. Adversaries are exploiting our digital openness through AI-accelerated information operations and cyber attacks. Adtech will become natsec-tech as adversaries recognize what advertising and technology firms have recognized for years: that machine learning is a powerful tool for harvesting and analyzing data and targeting activities. Using espionage and publicly available data, adversaries will gather information and use AI to identify vulnerabilities in individuals, society, and critical infrastructure. They will model how best to manipulate behavior, and then act.

Implications

Ethics

Persuasion. Superhuman capabilities raise important considerations about the nature of authentic debate and the role of AI in public discourse. While AI agents can construct logically sound arguments and identify flaws in opposing positions, they may struggle with aspects of argumentation that require genuine understanding of human values, ethical nuance, or lived experience. There's also the risk that AI's ability to generate persuasive arguments could be used to overwhelm human participants or manipulate discourse through sheer argumentative volume and precision. Furthermore, the appearance of impartiality in AI-generated arguments may mask underlying biases in training data or system design.

Diversity. Another ethical aspect involves the representation of diverse perspectives. AI agents trained on biased datasets may reflect those biases in the arguments they create, thereby perpetuating stereotypes or failing to represent minority viewpoints. As rhetorical agents, AI systems must be carefully managed to ensure that they support pluralism and contribute to inclusive dialogues, rather than limiting the diversity of perspectives that are represented in public discourse.

Power. The emergence of AI is fundamentally reshaping the distribution of power in our global society, creating unprecedented concentrations of influence in the hands of those who control these transformative systems. Unlike traditional power structures that operate within the confines of national boundaries and established governmental frameworks, AI-enabled power transcends these conventional limitations. Tech leaders and AI companies are acquiring capabilities that rival or exceed those of traditional state actors, particularly in their ability to shape information flows, economic systems, and social dynamics. This shift presents a significant challenge to democratic oversight and traditional regulatory frameworks, which struggle to address the scope and speed of AI-driven change.

Henry A. Kissinger, Eric Schmidt, and Craig Mundie explained on November 18th, 2024:

Perhaps most critically, this transformation raises fundamental questions about individual freedom in an AI-enabled world. The enhanced surveillance and monitoring capabilities made possible by AI systems, combined with their potential for automated decision-making and persuasive appeal, create new challenges for personal autonomy. The capacity for digital coercion and subtle manipulation through AI-driven systems presents novel threats to personal freedom that societies must address (Matz et al, 2023).

AI’s increasing control over information poses serious risks to democratic societies. As tech corporations gain centralized control of information flows, they have the power to shape public knowledge and suppress dissenting voices, creating a monopolization of knowledge that threatens the diversity of perspectives in public discourse. This control also enables these corporations to engage in sophisticated disinformation campaigns. AI can be used to generate realistic but false information—such as deepfakes, manipulated news, and tailored social media content—making it challenging for people to distinguish truth from falsehood (Spitale, 2023). Such targeted disinformation can easily manipulate public opinion, sway elections, and ultimately undermine democratic processes (Palmer et al, 2023).

Additionally, traditional governmental oversight mechanisms are often too slow or limited to effectively regulate the rapid development of AI technologies. With corporate interests often taking precedence, these powerful tech companies may operate beyond the reach of regulatory bodies, leading to a lack of accountability and transparency. As corporations grow stronger, they may form alliances that create their own power structures, essentially acting as quasi-governmental entities. These corporate alliances could align on agendas that bypass or even counter national governments, posing a risk to national sovereignty and eroding the role of governments in protecting public interests.

The impact of AI-driven disinformation and corporate control can also extend to social stability, as targeted disinformation has the potential to divide societies by amplifying polarizing content. This targeted manipulation can deepen ideological divides, fostering distrust between groups and weakening societal cohesion. At the same time, the pervasive use of AI in surveillance systems threatens privacy and autonomy. When corporations use AI to monitor individual behaviors, they gain unprecedented influence over people’s personal lives, subtly steering decisions and shaping behaviors. Together, these risks highlight how AI, if left unchecked, could shift the balance of power away from democratic institutions and toward a select group of corporations, fundamentally altering the structure of society.

Using AI to Challenge AI. The rise of persuasive AI systems has created a pressing need for countermeasures that can help maintain the integrity of public discourse. One promising approach involves deploying specialized AI agents focused on fact-checking and verification. These systems can rapidly cross-reference claims against reliable databases, identify logical inconsistencies, track citation chains, and flag instances where data has been cherry-picked or statistics have been misrepresented. This systematic approach to verification helps ground discussions in verifiable evidence rather than rhetoric alone.

Beyond simple fact-checking, AI systems can perform sophisticated analysis of argument structures to expose potential manipulation. By breaking down persuasive techniques, identifying logical fallacies, and mapping argument structures, these systems can reveal hidden assumptions and unsupported conclusions. This analytical capability helps readers see past emotional appeals and rhetorical devices that might otherwise mask weak reasoning or unsupported claims.

Context enhancement represents another crucial function of counter-AI systems. By providing broader historical or scientific context, surfacing relevant counterexamples, and highlighting important uncertainties, these systems help ensure that discussions maintain appropriate nuance and complexity. This approach also helps identify missing stakeholder perspectives and unaddressed concerns that might be strategically omitted from persuasive arguments.

Protecting audiences from manipulation requires additional capabilities focused on helping readers recognize persuasion techniques. AI systems can alert users to instances of emotional manipulation, appeal to cognitive biases, or other subtle forms of persuasion. By suggesting critical questions and providing tools for evaluating source credibility, these systems help audiences maintain their analytical independence and critical thinking capabilities.

Perhaps most importantly, counter-AI systems can generate constructive counter-arguments that elevate the quality of discourse. Rather than simply engaging in rhetorical combat, these systems focus on building logically rigorous responses grounded in evidence. By modeling intellectual honesty – acknowledging valid points while addressing flaws – they help promote more rational and productive debates.

____________________________________________________________________________

A Presidential Debate

The living room glows with the familiar light of a presidential debate, but this time it's different. Sarah, a communications professor, sits with her grad students as they deploy their suite of AI analysis tools, ready to dissect every claim in real-time. On their tablets and AR glasses, multiple information streams run alongside the main debate feed.

"Watch this," Sarah points as Candidate A makes a bold economic claim. Within seconds, their fact-checking AI flags the statement, pulling up historical data showing the figure cited has been taken out of context. A small graph appears in their peripheral vision, showing the complete economic trend rather than the cherry-picked timeframe. Meanwhile, a rhetorical analysis agent highlights the emotional language being used to mask the statistical manipulation.

Candidate B responds with a healthcare policy proposal, and the students' tools spring into action. The policy simulation AI quickly models the proposed plan's impacts, while another agent identifies similar policies attempted in other countries, complete with outcome data. One student notices their sentiment analysis tool detecting rising tension in the exchange, suggesting both candidates are shifting from logical arguments to emotional appeals.

"Look at the bias detection readings," another student points out. Their AI is tracking each candidate's use of loaded language, logical fallacies, and unsubstantiated claims. A real-time scorecard shows the ratio of factual statements to rhetorical devices, helping them cut through the persuasive techniques to focus on actual policy substance.

During a particularly heated exchange about climate policy, their context enhancement AI provides crucial missing information: projections from major climate models, relevant economic studies, and data from countries already implementing similar policies. The system flags several industry-funded studies being cited, providing important context about potential conflicts of interest.

As the debate concludes, the group's analysis suite generates a comprehensive report: fact-check summaries, logical consistency scores, emotional manipulation metrics, and detailed policy analysis. More importantly, it highlights key questions left unasked and critical perspectives that were omitted from the discussion entirely.

____________________________________________________________________________

Ethical obligations of faculty. The responsibility of preparing the next generation for this AI-transformed world falls heavily on educational institutions and faculty members. This obligation extends far beyond simply preparing students for future employment; it encompasses equipping them with the comprehensive understanding and skills needed to navigate and shape this emerging reality. Students need to comprehend the profound ways AI is transforming social structures, power dynamics, and democratic processes.

Faculty members should provide honest assessments of AI's impact on various fields while helping students develop ethical frameworks for AI interaction. This involves regularly updating curriculum content to reflect rapidly evolving AI realities and integrating AI literacy alongside traditional subject matter. The goal is not merely to teach about AI as a technological tool but to explore its implications for human identity, autonomy, and values.

The implementation of these educational objectives requires a coordinated effort across educational institutions. This includes regular curriculum reviews, faculty training in AI literacy, and the development of cross-disciplinary programs that examine AI's societal impact. For students, the focus must be on developing critical thinking skills, ethical decision-making capabilities, and technical competencies while maintaining a strong emphasis on personal development and social awareness.

Society-wide initiatives that are led by colleges & universities are equally important. Public education programs, community engagement efforts, and participation in policy development all play crucial roles in preparing broader society for AI's increasing influence. These efforts must focus on creating robust democratic oversight mechanisms and ethical frameworks that can guide AI development and deployment in ways that benefit humanity as a whole.

Example: Habermas's Ideal Speech Situation and AIs as Rhetoric Agents

Jürgen Habermas envisioned a framework for democratic discourse where all participants have equal opportunity to participate, communication is free from coercion or manipulation, arguments are evaluated solely on their merits, participants maintain truthfulness and sincerity, and power differentials are neutralized. This vision, already challenging in human-to-human communication, faces unprecedented complications in an era of AI-mediated discourse.

The introduction of AI systems into public discourse creates a complex dynamic where technology simultaneously supports and undermines the foundations of Habermas' framework. On the surface, AI appears to offer tools that could help realize the ideal speech situation. AI-powered fact-checking systems, argument analysis tools, and universal translation capabilities could theoretically democratize access to sophisticated argumentation and reduce barriers to participation. Counter-AI systems might help level the playing field by providing all participants with powerful analytical capabilities, while context enhancement features could ensure broader perspectives and reduce narrow framing of issues.

However, the very capabilities that make AI systems powerful tools for supporting rational discourse also enable unprecedented forms of manipulation and coercion. The concept of "algorithmic intimacy" - where AI systems can process and respond to personal information at a scale and depth impossible for human relationships - creates new possibilities for emotional manipulation that bypass rational evaluation. The ability to engage in "mass personalization," maintaining millions of simultaneously individualized conversations, fundamentally challenges the notion of equal participation in public discourse.

The "supercharged Eliza effect," where AI systems create compelling illusions of understanding and empathy, raises profound questions about the nature of authentic dialogue. When machines can simulate perfect consistency and emotional engagement, the boundary between genuine understanding and sophisticated simulation becomes increasingly unclear. This capability, combined with AI's "cognitive bandwidth optimization" - the ability to simultaneously process multiple layers of communication - creates arguments and responses that may exceed human capacity for critical evaluation.

Perhaps most significantly, AI systems introduce new forms of power concentration that challenge Habermas' goal of neutralizing power differentials in discourse. The control of advanced AI capabilities creates unprecedented influence over public communication, transcending traditional boundaries of state and institutional power. The ability to deploy "temporal mastery" - simultaneously managing immediate responses, medium-term strategy adjustments, and long-term relationship building - gives AI controllers significant advantages in shaping public discourse.

The impact on rationality itself is profound. AI's superior processing capabilities and ability to generate endless persuasive arguments challenge human-centric notions of rational discourse. The speed and complexity of AI-driven communication may exceed human cognitive capabilities, forcing us to reconsider the role of emotion and intuition in decision-making. When machines can instantly access and synthesize vast knowledge bases, creating novel connections and insights that would take humans years to develop, the very nature of argumentative merit comes into question.

These challenges necessitate new approaches to preserving the spirit of Habermas' ideal speech situation in an AI-mediated world. Technical countermeasures, such as AI detection tools and authentication systems for human participants, may help maintain spaces for genuine human dialogue. Social adaptations, including new norms for AI-mediated communication and enhanced education in critical thinking and AI literacy, become crucial for maintaining meaningful discourse.

Regulatory frameworks must evolve to address these challenges, potentially including guidelines for AI deployment in public discourse, transparency requirements for AI-generated content, and provisions ensuring equal access to AI communication tools. However, such measures must balance the need to protect authentic discourse with the potential benefits AI systems offer for enhancing public communication and understanding.

The future of democratic discourse likely lies in developing hybrid models that can harness AI's potential to support rational dialogue while mitigating its capacity for manipulation and coercion. This might involve creating new frameworks that acknowledge both human and artificial participants in public discourse, with clear rules of engagement that preserve the essential elements of Habermas' vision while adapting to technological realities.

Ultimately, the challenge before us is not merely technical but philosophical: how to preserve the possibility of genuine understanding and consensus-building in an era where the very nature of communication is being transformed by artificial intelligence. The resolution of this challenge will significantly influence the future of democratic discourse and public reason itself. As we navigate this transformation, maintaining awareness of both the opportunities and risks presented by AI systems becomes crucial for preserving the possibility of authentic democratic dialogue in the digital age.

Bibliography

Anthropic/Durmac, C. Measuring the Persuasiveness of Language Models. April 9 , 2024. https://www.anthropic.com/news/measuring-model-persuasiveness

Bai, H., Voelkel, J.G., Eichstaedt, j.C., Willer, R.: Artificial Intelligence Can Persuade Humans on Political Issues. OSF preprint (2023). https://doi.org/10.31219/osf.io/ stakv

Burtell, M., Woodside, T.: Artificial Influence: An Analysis Of AI-Driven Persuasion. arXiv preprint (2023). https://doi.org/10.48550/arXiv.2303.08721

Breum, Simon Martin, et al. "The persuasive power of large language models." Proceedings of the International AAAI Conference on Web and Social Media. Vol. 18. 2024

Cowen, A.S., Laukka, P., Elfenbein, H.A. et al. The primacy of categories in the recognition of 12 emotions in speech prosody across two cultures. Nat Hum Behav 3, 369–382 (2019). https://doi.org/10.1038/s41562-019-0533-6

Davidson, T.R., Veselovsky, V., Josifoski, M., Peyrard, M., Bosselut, A., Kosinski, M., West, R.: Evaluating Language Model Agency through Negotiations (2024)

Hackenburg, K., Margetts, H.: Evaluating the persuasive influence of political microtargeting with large language models. OSF preprint (2023). https://doi.org/10. 31219/osf.io/wnt8b

Händler, Thorsten. "Balancing autonomy and alignment: a multi-dimensional taxonomy for autonomous LLM-powered multi-agent architectures." arXiv preprint arXiv:2310.03659 (2023).

Harari, N. (2024). Nexus: A Brief History of Information Networks from the Stone Age to AI.

Karinshak, E., Liu, S.X., Park, J.S., Hancock, J.T.: Working with ai to persuade: 21 Examining a large language model’s ability to generate pro-vaccination messages. Proceedings of the ACM on Human-Computer Interaction 7(CSCW1) (2023) https: //doi.org/10.1145/3579592

Khaneman, D. (2011). Thinking Fast and Slow.

Keltner, D., Sauter, D., Tracy, J. L., Wetchler, E., & Cowen, A. S. (2022). How emotions, relationships, and culture constitute each other: advances in social functionalist theory. Cognition and Emotion, 36(3), 388–401. https://doi.org/10.1080/02699931.2022.2047009

Keltner, D., Brooks, J. A., & Cowen, A. (2023). Semantic Space Theory: Data-Driven Insights Into Basic Emotions. Current Directions in Psychological Science, 32(3), 242-249. https://doi.org/10.1177/09637214221150511

Kissinger, Henry A., Eric Schmidt, and Craig Mundie. "War and Peace in the Age of Artificial Intelligence: What It Will Mean for the World When Machines Shape Strategy and Statecraft." Foreign Affairs, 18 Nov. 2024, www.foreignaffairs.com/print/node/1132405

Matz, S., Teeny, J., Vaid, S.S., Peters, H., Harari, G.M., Cerf, M.: The potential of generative ai for personalized persuasion at scale (2023) https://doi.org/10.31234/ osf.io/rn97c

Meta Fundamental AI Research Diplomacy Team (FAIR)†, et al. "Human-level play in the game of Diplomacy by combining language models with strategic reasoning." Science 378.6624 (2022): 1067-1074.

Minsky, Marvin (1986). The Society of Mind. New York: Simon & Schuster.

Ng, A. (2024, November 20). The Batch. https://www.deeplearning.ai/the-batch/issue-276/

National Security Commission on Artificial Intelligence (2024). Final Report. https://irp.fas.org/offdocs/ai-commission.pdf

OpenAI (2024, December 5). OpenAI o1 System Card. https://cdn.openai.com/o1-system-card-20241205.pdf

Palmer, A.K., Spirling, A.: Large Language Models Can Argue in Convincing and Novel Ways About Politics: Evidence from Experiments and Human Judgement. GitHub preprint (2023). https://github.com/ArthurSpirling/ LargeLanguageArguments/blob/main/Palmer Spirling LLM May 18 2023.pdf

Peters, H., Matz, S.: Large Language Models Can Infer Psychological Dispositions of Social Media Users. arXiv preprint (2023). https://doi.org/10.48550/arXiv.2309. 08631

Salvi, Francesco, et al. "On the conversational persuasiveness of large language models: A randomized controlled trial." arXiv preprint arXiv:2403.14380 (2024).

Simchon, A., Edwards, M., Lewandowsky, S.: The persuasive effects of political microtargeting in the age of generative artificial intelligence. PNAS Nexus 3(2), 035 (2024) https://doi.org/10.1093/pnasnexus/pgae035

Spitale, G., Biller-Andorno, N., Germani, F.: Ai model gpt-3 (dis)informs us better than humans. Science Advances 9(26), 1850 (2023) https://doi.org/10.1126/sciadv. Adh1850

Sponheim, C. (2023, October 6). The ELIZA Effect: Why We Love AI. https://www.nngroup.com/articles/eliza-effect-ai/.

Toulmin, S. (1953/2008). The Uses of Argument. https://catdir.loc.gov/catdir/samples/cam034/2003043502.pdf

Turing, A.M. Computing Machinery and Intelligence. Mind, New Series, Vol. 59, No. 236 (Oct., 1950), pp. 433-460

Zhou, Jingwen, et al. "A Taxonomy of Architecture Options for Foundation Model-based Agents: Analysis and Decision Model." arXiv preprint arXiv:2408.02920 (2024).

See Also

Personality Modeling for Persuasion of Misinformation using AI Agent

TO ADD:

There is evidence that content generated by general-purpose AI can be as persuasive as content generated by humans, at least under experimental settings. Recent work has measured the persuasiveness of general-purpose AI-generated political messages. Several studies have found that they can influence readers’ opinions of psychological experiments (309, 310, 311, 312, 313*), in a potentially durable fashion (314), though the generalisability to real-world contexts of these effects remains understudied. One study found that during debates, people were just as likely to agree with AI opponents as they were with human opponents (315), and more likely to be persuaded by the AI if the AI had access to personal information of the kind that one can find on social media accounts. Recent research also explores how general-purpose AI agents could influence user beliefs using more sophisticated techniques, including by creating and exploiting users’ emotional dependence, feeding their anxieties or anger, or threatening to expose information if users do not comply (316*). As general-purpose AI systems grow in capability, there is evidence that it will become easier to maliciously use them for deceptive or manipulative means, possibly even with higher effectiveness than skilled humans, and to encourage users to take actions that are against their own best interests (317, 318*). There is also some evidence that AI systems can use new AI-specific manipulation tactics that humans are especially vulnerable to because our defences against manipulation have been developed in response to other humans, not AIs (319). However, AI systems can also be instrumental in mitigating AI-powered persuasion. However, there is general debate regarding the impact of attempts to manipulate public opinion, whether using general-purpose AI or not. A systematic review of relevant empirical studies on fake news revealed that only eight out of the 99 reviewed studies attempted to measure direct impacts (320). These studies generally found that the spread and consumption of fake news were limited and highly concentrated among specific user groups, casting doubt on earlier hypotheses about its widespread influence on election outcomes. However, these findings do not necessarily indicate a high resilience to manipulation and persuasion attempts, and fake news can have broader or Risks 2.1 Risks from malicious use 69 unintended effects beyond its original purpose. Some studies suggest that while people can theoretically discern true information from false information, they often lack the incentive to do so, instead focusing on personal motivations or maximising engagement on social media (321, 322, 323). Regardless of the academic debate about effectiveness, public concern about AI-driven attempts to manipulate public opinion remains high – for example, a 2024 survey found that a majority of Americans across the political spectrum were highly concerned about AI being used to create fake information about election candidates (324). However, this finding may not be representative of global attitudes. In addition, there is no consensus on whether the generation of more realistic fake content at scale should be expected to lead to more effective manipulation campaigns, or whether the key bottleneck for such campaigns is distribution (see Figure 2.1). Some experts have argued that the main bottleneck for actors trying to have a large-scale impact with fake content is not generating the content, but distributing it at scale (325). Similarly, some research suggests that ‘cheapfakes’ (less sophisticated methods of manipulating audiovisual content that are not dependent on general-purpose AI use), might be as harmful as more sophisticated deepfakes (326). If true, this would support the hypothesis that the quality of fake content is currently less decisive for the success of a large-scale manipulation campaign than challenges around distributing that content to many users. Social media platforms can employ various techniques for reducing the reach of content likely to be of this nature. These techniques are often relatively effective, but there are concerns about their impact on free speech. They include human content moderation, labelling of potentially misleading content, and assessing source credibility. At the same time, research has shown for years that social media algorithms often prioritise engagement and virality over the accuracy or authenticity of content, which some researchers believe could aid the rapid spread of AI-generated content generated to manipulate public opinion (327). Researchers have also expressed broader concerns about the erosion of trust in the information environment as AI-generated content becomes more prevalent. Some researchers worry that as general-purpose AI capabilities grow and are increasingly used for generating and spreading messages at scale, be they accurate, intentionally false, or unintentionally false, people might come to generally distrust information more, which could pose serious problems for public deliberation. Malicious actors could exploit such a generalised loss of trust by denying the truth of real, unfavourable evidence, claiming that it is AI-generated – a phenomenon known as the 'liars’ dividend' (328, 329). However, society might also quickly adjust to AI-induced changes to the information environment. In this more optimistic scenario, people might adapt their shared norms for determining if a piece of information or source is credible or not. Society has adapted in this way to past technological changes, such as the introduction of traditional image editing software.

Since the publication of the Interim Report, some new insights have emerged regarding AI-generated content. One recent experimental study found that while people perceive AI-generated fake news as less accurate than human-generated fake news (by about 20%), they share both types at similar rates (approximately 12%), highlighting that fabricated content, whether AI-generated or human-generated, can easily go viral (330). In the experiment, nearly 99% of the study subjects failed to identify AI-generated fake news at least once, which the authors attributed to the ability of state-of-the-art large LLMs to mimic the style and content of reputable sources. New detection methods have successfully combined textual and visual analysis, addressing previous limitations of approaches using only one type of data such as only text or only images (331). Current techniques for identifying content generated by general-purpose AI are helpful but often easy to circumvent. Researchers have employed various methods to identify potential AI authorship (332, 333). ‘Content analysis’ techniques explore statistical properties of text, such as unusual character frequencies or inconsistent sentence length distributions, which deviate from patterns typically observed in human writing (334, 335, 336). ‘Linguistic analysis’ techniques examine stylistic elements, such as sentiment or named entity recognition, to uncover inconsistencies or unnatural language patterns indicative of AI generation (337, 338). Researchers can sometimes also detect AI-generated text by measuring how readable it is, as AI writing often shows unusual patterns compared to human writing (339). However, not all AI-generated content is fake news, and some research reveals an interesting bias in fake news detector tools: they tend to disproportionately classify LLM-generated content as fake news, even when it is truthful (340). A study of seven widely used AI content detectors identified another potential limitation of these Intention to distort public opinion Creating realistic synthetic content Creating realistic synthetic content at scale Distributing realistic synthetic content at scale Impact on individual belief change Societal impact Evidence Assessment Strong Evidence Moderate Evidence Limited Evidence Risks 2.1 Risks from malicious use 71 tools: they displayed a bias against non-native English writers, often misclassifying their work as AI-generated (341). Finally, AI researchers have also proposed other approaches for detecting AI-generated content, such as ‘watermarking’, in which an invisible signature identifies digital content as generated or altered by AI. Watermarking can help with the detection of AI-generated content, but can usually be circumvented by moderately sophisticated actors, as is discussed in 2.1.1. Harm to individuals through fake content. Early experiments demonstrate that human collaboration with AI can improve the detection of AI-generated text. This approach increased detection accuracy by 6.36% for non-experts and 12.76% for experts compared to individual efforts in a recent study (342). While purely human-based collaborative detection is likely not scalable for dealing with the vast amount of content generated daily, the research still remains valuable. For example, data from human collaboration can be used to train and improve AI detection systems. Moreover, for particularly challenging or high-stakes content, human collaboration can supplement AI detection. However, the long-term effect of such collaborative efforts on public resilience to manipulation attempts remains to be seen, and further studies are required to validate these initial findings. For policymakers working on reducing the risk of AI-aided manipulation of public opinion, there are several challenges. These include mitigation attempts with protection of free speech (343, 344) and determining appropriate legal liability frameworks (345, 346, 347). Policymakers also face uncertainty about the actual impact of manipulation campaigns, given mixed evidence on their effectiveness and limited data on their prevalence (see Figure 2.1). Another challenge is the ongoing evolution of AI, adaptive user behaviours, and continuous improvements in AI systems, which creates a perpetual cycle of adaptation and counter-adaptation between detection methods and AI-generated content.