North Carolina AI Education Guidance Release

"A" grade in my mind; it just needs to anticipate the near future a bit more

See also: West Virginia Guidance review

TLDR

The Guidance

*North Carolina has arguably issued the best AI guidance to date (IMHO), and I explain why below (my highlighted version is here). This is in no way a knock on the other guidance reports, as I think they offer a lot them of very important and essential guidance. I just really like how NC packages it and the emphasis they put on certain things. If a state asked me to write a guidance report, I would have written something similar, and I’ve said almost everything in here in different places over the last 9 months (blog, book, report). We (with Dr. Sabba Quidwai) unpack every idea in the NC guidance in our report, Humanity Amplified: The Fusion of Deep Learning and Human Insight to Shape the Future of Innovation, with a lot of detail. If you are looking for more information on what is in the NC report, I encourage you to check it out.

A couple of additional suggestions that I add at the bottom.

*Emerging, and potentially non-generative, AIs will present a significant challenge to a couple of the recommendations.

*Guidance should include recommendations for what more advanced systems mean for schools. Start-ups, for example, are being asked what their value proposition is in a world of AGI. This is a question schools need to think about and not because they don’t have one in that world, but because they need to figure out how to actualize that value. Continuing learning and assessment beyond K-6 the way it happened last year has substantially less value.

Many AI scientists consider AGI to be at least 5+ years away, but schools often plan five years out, and even if we never get to AGI, there will be some incredible AI capabilities developed over the next few years that will make AI competitive with human intelligence. We are already starting to see initial inklings of some of these advances, and I think guidance documents that are being issued should bring this to the attention of schools. If schools only adapt to generative AI capabilities, they will inevitably end up behind. I cover some of the broader trends related to AI in education in this podcast.

[P.S. If you think some of this is “hype,” remember that AI scientists were incredibly skeptical that AI could ever mimic human language, and the advances in AI over the last year have been incredible. As we explain in our paper, current advances in AI are experiencing exponential growth, and many of the leading AI scientists in the world both think that AGI is possible in 5 years and that even if it isn’t possible in that time-frame, getting anywhere in the ballpark of it will change the world]

North Carolina Generative AI Guidance

These are what I see as the key parts of the guidance

First, NC advocates for integrating AI into education, something I’ve been arguing for for over a year. In some areas of education, this is controversial, so it’s nice to have a state take a strong approach toward integration.

Second, NC recognizes the importance of removing bans, as it is critical for schools, especially public schools, to provide opportunities for students to learn how to use this technology. This is what motivated me to get so involved in this area in the first place, and it is becoming more and more apparent to me every day as I work with students who are both effectively blocked from the technology and those who are using it. There is a big difference in the quality of their work.

This is something I’ve been repeatedly warning about since last spring.

Third, North Carolina understands the importance of developing the 5C deep learning skills as part of efforts to prepare students for the AI World. We cover this in-depth in our popular report, and we have additional chapters on how AI can be used to augment these skills.

NC references the World Economic Forum’s Future of Work report, and you will find every future of work report – Microsoft, UK, McKinsey, Goldman Sachs, etc – making the exact same points.

The reality is that AIs can do a lot of knowledge work, and they are only getting better at it. Students who succeed in future jobs will be individuals who can work with technology and other people, using the technology to do most of the heavy lifting. And this just isn’t about the future. Industry is constantly saying that both high school and college graduates lack these essential skills and that they will be needed in the future. As Jim Nielsen points out, people want to interact with other humans: it’s a fundamental part of being human.

Most importantly to me :), it even suggests that AI can function as a student’s debate partner :) and using debates to introduce AI into the school and the community.

Fourth, North Carolina recognizes the importance of AI literacy.

The world of 2027 will be completely different than the world of 2024. The world of 2037 is almost unimaginable (see chapter 2 of our report).

Students need to understand this world; the presence of “AI Girlfriends” in the ChatGPT store makes this even more obvious. We spend a lot of time in school educating students about the past, we also need to educate them about the immediate future.

We cover the need for AI literacy and the specific things students need it for in great detail in Chapter 3 of our report.

We cover practical implementation considerations, including school-specific guidance, in Chapter 8.

Fifth, they understand that AI detectors have very limited utility. 🙂

Sixth, NC provides practical suggestions for integrating AI into your school, starting with building awareness.

Seventh, NC hits all the major highlights, covering strong uses for teachers and students, cyber & privacy issues, relevant laws, great uses for teachers (lesson plans, vocabulary lists, quizzes (a new study claims large language models can generate quizzes as well or better than teachers), great users, for students, how to prompt, etc. The ideas they cover are most of the ones covered in the literature.

Before I end, I do want to raise one issue, not because I disagree with them in principle but because I think it is going to be hard to sustain in practice.

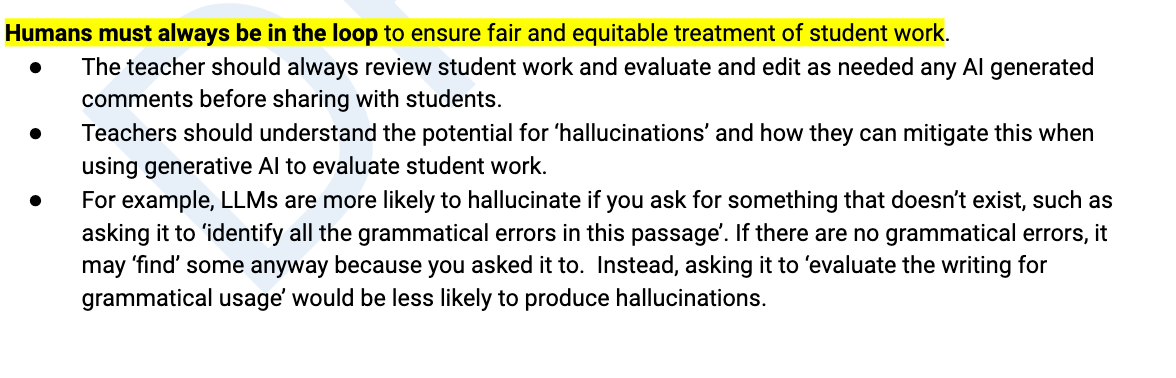

It’s basically the idea that humans remain in control, a popular idea. We see this reflected in the report.

There are a couple ways AIs will present a significant challenge to these ideas in the (near) future.

First, AIs are going to learn to reason (they arguably already can do this a bit) and plan, and when they do that, they will become autonomous agents. There is a debate as to how long that might take, and there is a very good debate about whether or not we may make new discoveries and even different models (objective-driven world models, for example) to get us there, but it’s 99% indisputable that that will occur, and we are arguably already seeing demonstrations of reasoning ability (covered in chapter 2 of our report). To continue the analogy, we will soon have E-bikes that can make their own decisions and decide to just go for a ride.

We can try to keep that level of AI out of schools, but, at this point, we all understand that is impossible. Students will inevitably, probably in the very near future, work with AIs that can reason, plan, and act autonomously.

Second, AIs will start to present, for better or worse, challenges to assessment and the role of humans remaining in control (which is often how “human in the loop” is defined). While humans currently exceed the abilities of AIs in assessment related to fact-checking (at least using common LLMs), AIs that are capable of analyzing larger sets of data will arguably be able to offer more consistent scoring of written work across buildings, districts, and states. They may even end up being less biased than human scorers. When human assessors are challenged by AI results that are more consistent across districts and potentially less biased, interesting conversations will emerge. The report does acknowledge this.

Of course, we, as humans, can decide to always be in control (debates about AI “taking over” aside), but once AI gets better that us at doing certain things we may want to default to the AIs.

In conclusion, it is important to consider all guidance, and those guidances also offer great food for thought, but any state that started by replicating this guidance would be off to a great start!.

Both of these points reinforce the idea that I put at the top with the TLDR: more advanced AI capabilities are coming or are nearly here, and schools need to think beyond generative AI, especially since they move much, much slower than technology that is advancing outside of companies that are literally trying to move as fast as they possibly can to incorporate this technology.