Beyond Policing Text-to-Text: A Call for An Appollo (Advancing Preparation Opportunities for Lifelong Learning Outcomes) Project to Prepare Students for a World of AI and Other Advanced Technologies

How are we supporting the next generation? Allie Miller

*Non-AI Challenges Facing Education

*AI-Related Challenges Facing Education

*AI is Not Just More “EdTech”

*Radical AI Acceleration in 2025+

*AI & Related Advances in Robotics

*AI and the Future of “Work”

*World-Wide Changes are Coming Fast and Furious, and They Aren’t Just About AI

*Our Students’ Worries

*Restrictive Approaches to Managing AI in Education Will Fail to Protect Students and the Academy

*Rising to the Challenge: Helping Our Students Prepare for the AI World

*Actions for Schools to Take

*Professional Development

*Curricular Changes

*Thinking Outside the Box

*An Apollo Project for Education

*Leadership

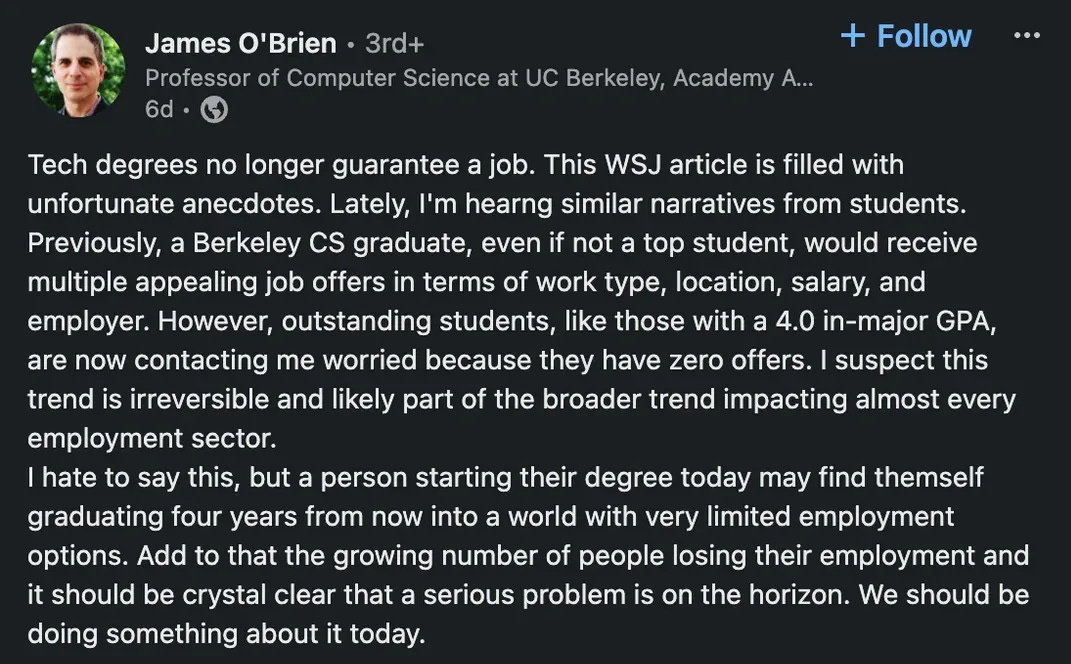

This year has seen increasing evidence of new graduates being unable to find jobs due to the AI-driven skills and economic landscapes. This drives the question of not just how students learn, but what they learn. Economic pressure from the job market down will force graduates, and eventually the institutions that produce them, to face the new realities of what businesses want in workers. The students will need to adapt first, via any means available to upskill, and institutions will need to follow.

—Dr. Nisha Talagala, PhD CS Berkeley, founder of the AIClub, A NAVI Education partner

(A)nyone who knows me, knows I think we live in monumental times. AI changes everything, with intelligence on tap. I think the probability (as nothing is certain) of this having a massive impact on economies. Politics and world affairs is huge…

I suspect we’re in for a hell of a year….I’ve never seen anything like this in my 40 years in learning technology – so hang on to your hats."

— Donald Clark

I think 2025 will be critical…I think it's really important we internalize the urgency of this moment and the need to move faster…The stakes are high. These are disruptive moments.

—Sundar Pichai, CEO, Google

After the hiatus of Covid, AI and other disruptors hit humanity and made yet more cases for change in Education’s What and How. The lack of urgency among educators is deeply concerning, as is the lack of preparation of schools as centers of stability.

— Charles Fadel, Center for Curriculum Reform

Introduction

Soon after ChatGPT (3.5)’s public release on November 30, 2022, we saw education immediately struggle with students using AIs to write all or large portions of essays and research papers, throwing one of the most common means of assessment into doubt. Since then, a substantial portion of the educational system has invested millions of person-hours into nothing more than how to prevent this. While they did this, the technology has blown them by, finding ways to produce entirely accurate bibliographies, significantly reduce hallucinations, complete math problems within one point of Gold in the International Math Olympiad, and write in more human-like patterns, including in the style of the author/“prompter.”

These large language models (LLMs) then became multimodal language models (MLLMs) and started developing at least rudimentary reasoning abilities. These abilities allow them, at least in ChatGPT-o3, to move beyond complete reliance on next token/word prediction by choosing among the best of a variety of potential answers.

These advances now allow the models to act as agents (Google Whitepaper) that can choose among a set of tools when given a task and execute the task. While at the initial stage, these agents can do many things, from persuading a human interlocutor better than a fellow human to writing a 14-page research paper with 74 citations and no hallucinations (Google Deep Researcher). We’ve now started to move beyond text-to-text and multi-model impact to multimodal output to text-to-action models known as large action models that can control and operate applications on one’s desktop when given permission.

Despite these gains, the most advanced applications and training related to AI in most schools (K-16+) focus on AI as an inert tool that we can decide to employ or not employ to teach our existing curriculum. But AI is much more than this. AI is a dynamic technology, perhaps even an “alien intelligence” (Gawdat, Suleyman), that is capable of doing a lot of the knowledge work we are training students to do, and it is even capable of teaching our students (the question of how well aside for the moment). This will change education, including instruction and assessment, significantly more than any understanding of the technology as only a next-word predicting tool. And AI is just getting started.

While this does not mean that everything we teach our students must change, it does mean that some of the “whats” and “hows” of our educational system will need to be reconsidered and redrawn, just as they were when we transitioned from the agricultural to the industrial era.

Failure to change will create what Carlos Iacono describes as “a crisis of relevance” in education. This isn’t just because schools will fail to help students thrive in a new industrial era but because they are facing compounding challenges related to finances, politics, and even their ability to get more than half the students to demonstrate proficiency on current measures of educational progress the system has designed for its own assessment.

Justified or not, for these reasons, many see the public K-12 education system as a target for disruption because it cannot even meet its own metrics despite growing costs. At the university level, many question the value of taking on tens (often hundreds) of thousands of dollars of student loans to fund an education that isn’t leaving them ready for employment. AI is something that will alter the significance of what we are teaching our students and how it creates not only its own exigence but also a vehicle for carrying forward the disrupting agendas of others. And neither, if we are being honest, is responding very well to the AI exigence.

In the future (really even today), students’ economic success will be determined by how well they can work with AI and how well they can deploy their own initiative, creativity, critical thinking, and collaborative skills to actualize a dream. This will require them to be life-long learners who continually develop their skills but are also able to rapidly learn new content that will help them thrive. For example, they may have to learn how to deploy decentralized web technologies to actualize contracts. With McKinsey predicting, “we’ll experience more technological progress in the coming decade than we did in the preceding 100 years put together,” continual learning in a rapid manner will become essential.

Content knowledge will be both less and more relevant. It will become less important for more workers, as AI systems will not only be a repository of all of that knowledge but, unlike our libraries and the internet, will be able to act on that knowledge to help inform decisions, create, and interact with the world in both digital and physical forms (robots). It will be more relevant because humans will have to continually learn about new developments, such as smart contracts, in order to thrive.

Human success will not come from competing with those systems but from using them to maximize our potential. It will become essential for humans to both learn to learn from AI systems and to work with AI systems.

Helping students prepare for a world where they will use AI as a part of a life-long learning and actualization journey is a challenge for many, if not most, schools. As we all know, many schools initially banned AI, both out of concern for academic “integrity” and for what many believe are legitimate privacy and security concerns. In some schools, these bans persist. And now, even where they are not, many students are being taught to compete against AI; to look for places where AI makes mistakes (“hallucinations”), where it writes in a generic voice, or where it says or does something inappropriate. Such an approach may work for another academic year, be we are already starting to see systems with low (Perplexity.ai) to trivial or non-existent (Gemini Pro Deep Researcher). As memory expands, chatbots will learn our writing style, helping us write with greater accuracy and without grammar and spelling errors. Even 9-year-olds are using Grammarly. When/if they go to college in a decade, will many universities still have banned it?

Trying to compete against systems trained on all the world’s knowledge, fine-tuned on important parts of it, instructed with rule-based systems, and that have infinite memory, can reason, and eventually plan is not a recipe for success. In order to remain relevant, educational institutions at all levels will need to begin thinking hard about how to best teach students in an AI world; this thinking needs to include that what.

And it will need to push the boundaries of education locally. Institutions that wait for directives from state boards of education and certifying bodies will only push institutions they are responsible for to irrelevancy, at least beyond K-8. The timeline for decision-making through normal procedures is too long, not only in secondary education but even in many instances of department decision-making in higher education, which can take a year to consider and implement change. Entire institutions such as Alpha School/Unbound Academy and technologies such as Professors have been created in less time than it has taken many K-16+ institutions to provide even rudimentary guidance on AI. Stepful is taking aim at the community college market and worker training market, offering to provide training for a lesser cost with a greater completion rate.

This puts schools in a very difficult situation. As knowledge of AI's power and impact spreads, students and families will find “any means available to upskill.” For those used to subsidizing their education with evening and weekend academic programs, this will be a natural extension of what they are already doing. Those who rely on traditional schooling to achieve all or nearly all of their educational objectives will be left behind, essentially being educated to succeed in a different world.

In most cases, it is time for significant change.

In the rest of this essay, I will discuss the many challenges facing education, the critical importance of understanding AI as more than “edtech,” the radical acceleration of AI and robotics that will likely come in 2025 and the near future, the impact of these new technologies on future of work, compounding domestic and international challenges, and students worried about their futures.

I will conclude with the case for developing Education Apollo Projects that will hopefully work to prepare all students for the “AI World” (Gates). This case includes not only a call for a large program in collaboration with government, non-profits, and all parts of the education system along the lines of the original Appolo (and Manhattan) projects, but it also includes a focus on Advancing Preparation Opportunities for Lifelong Learning Outcomes for today’s students.

We can no longer conceive of education as training students in a protected space (both from AI and other influences) and designed to reach a moment highlighted by a paper diploma, but rather as laying the foundation for preparing them for a life-long journey of learning and action. With AI collaboration, this is possible.

Hopefully, some individuals will step up to lead such an initiative.

Non-AI Challenges Facing Education

Even before the arrival of AI, education faced several challenges, all of which have been magnified by the pandemic.

(1) Inequality and inequity. Inequality in education refers to the uneven distribution of resources like funding, teachers, and technology, which creates academic disparities across socioeconomic, racial, and geographic lines. However, inequity extends beyond this to examine the systemic biases and structural barriers that create and maintain these disparities, particularly those affecting marginalized groups. Both grew during and after the pandemic.

(2) Results. Recent assessments paint a concerning picture of U.S. student academic performance in 2023, with multiple data sources indicating minimal recovery from pandemic-related learning losses.

Major interim assessments from NWEA MAP Growth which test millions of students throughout the year, showed stagnation in math and possible declines in English Language Arts.

State-level data analyzed by AssessmentHQ.org from 39 states and D.C. revealed only slightly better results, with a mere one percentage point median increase in 8th-grade math proficiency and similar modest gains across other subjects. At the same time, 8th-grade English Language Arts actually declined by 0.2 points. While ten states, including Massachusetts, Mississippi, and Virginia, have consistently improved 8th-grade math, many others remain stalled at post-pandemic lows. The definitive national picture will emerge with the release of NAEP results in early 2025, though given current trends, expectations for significant improvement should be modest.

In 2022, only 32 percent of fourth-grade students performed at or above the NAEP Proficient level in reading, lower than the 34 percent in 2019 but higher than the 28 percent in 1998. In 2022, 35 percent of fourth-grade students performed at or above the NAEP Proficient level in mathematics, lower than the 40 percent in 2019 but higher than the 22 percent in 2000.

There are a few conclusions that can be drawn from this:

(a) the educational system is completely failing our students; or

(b) the standards are a poor measurement of what students actually need to learn; or

© it’s ok for society if only this percentage of students reach this proficiency.

None of these is particularly satisfying.

(3) Lack of teachers. According to various sources, there are at least 49,000 vacant teacher positions in the U.S. for the 2024-25 school year, with some estimates suggesting the number could be as high as 55,000 or even 100,00. Additionally, there are approximately 400,000 teaching positions filled by underqualified teachers, representing about 10% of the total teacher population. Globally, there is a shortage of 44 million teachers worldwide (Fadel, 2024, p. 13)

(4) Dissatisfaction and disengagement from students. According to a 2021 survey by the National Center for Education Statistics, only 47% of students reported feeling engaged in their learning, a sharp decline from pre-pandemic levels. Over 80% of teachers reported a decline in student motivation and engagement since the onset of the pandemic.

This compounds the teacher shortage issue, as teachers do not want to teach disengaged students.

(5) Funding loss. The Elementary and Secondary School Emergency Relief (ESSER) Fund was established to assist K-12 public schools in the US in response to the COVID-19 pandemic. ESSER funding has provided approximately $190 billion to help schools address various challenges brought about by the crisis. No new funding has been appropriated, however, and schools must spend the funds by January 28, 2025, unless they receive an extension to March 30, 2026. This deadline marks the end of the significant financial support provided by the federal government, leading to what is known as the "ESSER funding cliff" or "fiscal cliff," where schools will no longer have access to this substantial financial support.

Under a second Trump administration, there is a likelihood of reduced federal financial support for public schools. Trump and his advisers have proposed cutting, redirecting, restructuring, and even eliminating key streams of funding for K-12 schools, including reducing investments in programs like Title I, Title III, and the Individuals with Disabilities Education Act (IDEA). The Republican Party platform emphasizes that states and local districts should be the primary drivers of school policy and operations. This would mean less federal oversight and funding, with more responsibility placed on states and local districts. Additionally, Trump's administration has expressed interest in promoting school choice through tax credits for private school scholarships and reducing regulations to foster competition in the education landscape, potentially diverting funds away from traditional public schools. This shift in policy priorities, combined with the potential for legal challenges and historical precedent of budget proposals calling for significant cuts, suggests a future with less federal financial support for public education (Schultz; Lieberman).

This leaves them not only without resources to face AI-independent challenges but also to face the existing challenges brought on by AI and the more significant impending ones.

Similarly, universities are facing a demographic cliff and questions related to pursuing the traditional four-year degree.

AI-Related Challenges Facing Education

In addition to the challenges above, schools are now facing two challenges related to AI, though the second one is big enough to be broken down further.

These come in the forms of (6) challenges to assessment, (7) a questioning of what students should be taught to prepare for an AI world, and (8) the ability of AI to provide at least a significant portion of instruction now provided by humans. All of these have been problematized by generative AI (GAI), and in many cases, they have been aggravated by the school’s knee-jerk reaction to AI: Ban it.

AI presents significant challenges to assessment because GAI can actually complete most of the assessments (6) that students are given, particularly papers.

The growing use of generative AI tools built on large language models (LLMs) calls the sustainability of traditional assessment practices into question. Tools like OpenAI's ChatGPT can generate eloquent essays on any topic and in any language, write code in various programming languages, and ace most standardized tests, all within seconds

Kizilec et al 2024

According to a recent study of 395 students aged 13 to 25 in France and Italy most students are integrating LLMs into their educational routines.

Other studies support similar conclusions.

While 41% of students had used AI in ways explicitly banned, many more students (59%) reported ambiguous use cases. Students overestimated peer cheating, and this predicted their own cheating, as did general experience with and excitement about AI. Meanwhile, 11% of students reported false accusations, with first-generation students possibly at a higher rate. Pragmatic views about career and inequality may be affecting behaviors. Men consistently reported more involvement with AI than women.,

While most students utilize LLMs constructively, the lack of systematic proofreading and critical evaluation among younger users suggests potential risks to cognitive skills development, including critical thinking and foundational knowledge.

OpenAI has made its collaborative Canvas editor that “coauthors” papers available to all web users, dropping the previous Plus subscription requirement. And while some universities have banned Grammarly, many elementary school students (in my experience) use it to proof grammar and spelling.

Anthropic has made it easy for users to upload samples of their writing so the output mimics their writing style. OpenAI has launched a Guide to help students with their writing.

As AI writing becomes more human and individualized, AI-writing detectors cannot offer a solution. Calls for “integrity” are also not a solution, as (1) AI is now everywhere, including in nearly every interaction (phones, eyeglasses (Meta, Baidu), contacts, word processing programs and Google docs, browsers, operating systems), so there is no way for students to avoid it; (2) we do not expect this level of integrity anywhere else in education (tests are not ordinarily, “take home, closed book"); and (3) students, who know they will use AI at work and everyday life are not sold on the violation of “integrity.”

Beyond writing, an AI agent can complete a fully asynchronous course, which has become common in universities (Wiley, 2024).

A lot of incredible intellectual effort has gone into providing guidance on when students can use and not use AI, and many schools have adopted such guidance. However, when AI is ambient—it is all around us in our operating systems, word processors, phones, and even our glasses—it starts to beg the question of what it even means to use and not use AI.

How do we meaningfully restrict AI use when models can watch our screens and guide us through complex tasks in real time? What does "AI-assisted" even mean when the technology is ambient in our digital environments?

Consider Anthropic's recent demonstration of Claude controlling computer interfaces - not just responding to prompts, but actively navigating systems and executing complex tasks. Or look at Google's Gemini 2.0, whose real-time visual analysis capabilities allow it to watch and understand screen activity, providing immediate guidance and suggestions. These aren't just incremental advances - they fundamentally break our traditional frameworks around AI use and control. Yet our institutional responses remain locked in an era of simple prompt and response, failing to grasp that we're no longer dealing with just a tool, but with the emergence of a new cognitive environment (Carlo).

The question of what (7) students need to learn has been around for a while, but in a world where machines will (and already are) operate in the ballpark of human intelligence capabilities, the lid on what students should learn has been blown off.

The idea that students should learn the same things they learned when machines did not have human-level intelligence as they do when they do have human-level intelligence does not make a lot of sense; it’s basically the same thing as teaching students to farm when countries instituted mass schooling in the 20th century in response to the industrial era. For example, are we really going to continue to push students to learn multiple languages when any speaker can be instantly translated in their ear pods (Swiss Educators Rethink foreign language instruction)? Are we going to continue to prioritize learning calculus over statistics?

(8) Who or what is teaching?

Many discussions have emerged related to AI tutors and teachers (AIT&T), some of which have already started to come online (tutors, teachers). At a minimum, simple technologies such as NotebookLM allow educators to build simple teaching assistants for their students. Eleven Labs has a similar product in more voices and languages. ElevenLabs has also recently launched Projects, which allows easy long-form content editing.

Will these take off? In considering that question, I think it is important to consider the different roles of educators (K-16+).

Conveyor of Knowledge (COK). One critical element of any educator is that they share content knowledge with students.

Learning Navigator (LN): This focuses on the how of teaching. An LN charts careful routes through complex material, anticipating conceptual obstacles and planning detours around common misconceptions. They're attuned to signs that students might be veering off course and can skillfully redirect them. More experienced teachers will be better navigators, as they have experience navigating the process.

Coaches (CH): These educators build deep, trust-based relationships by understanding each student's unique background and personal circumstances. This allows them to provide individualized guidance that extends beyond academic content. They excel at identifying and nurturing students' intrinsic motivation by connecting learning to personal interests and long-term goals. They also celebrate progress and reframing challenges as growth opportunities.

Child Care Provider (CC). The role of teachers as de facto childcare providers, particularly in early education, reflects a complex reality of our educational system that often goes unacknowledged. This dynamic emerges from the intersection of societal needs, economic pressures, and the evolution of public education in modern society. In many communities, schools serve as the primary supervised environment where working parents can reliably leave their children during the workday. This childcare function has become increasingly critical as more households require two incomes to maintain financial stability, or in single-parent families where the parent must work full-time.

Of these roles, what roles can AIT&T fill?

Conveyor of Knowledge (COTs). AT&T aren’t far from being able to accurately share knowledge. While many point to common hallucinations in generic chatbots, models can easily be trained on domain-specific data, often using carefully culled synthetic data to increase accuracy). This, combined with lowering the “temperature” (the creativity of the response), and improved Retrieval Augmented Generation (RAG), experts can get even current architectures to display trivial hallucinations. Even absent, the ChatGPT o3 demo shows that even among PhD experts, the accuracy of their general model is higher. than human experts in scientific domains.

Recently, Diffbot, a model that can essentially replace its training data with data you’ve trained it on when used for a specific purpose, was released.

This should eliminate or nearly eliminate hallucinations in specific contexts.

AI Godfather Yann LeCun recently argued that AI systems will become a “universal knowledge platform, a repository of all human knowledge (LeCun, 2024)).” It will not be long before these become effective COTs, especially relative to the cost of humans sharing the knowledge.

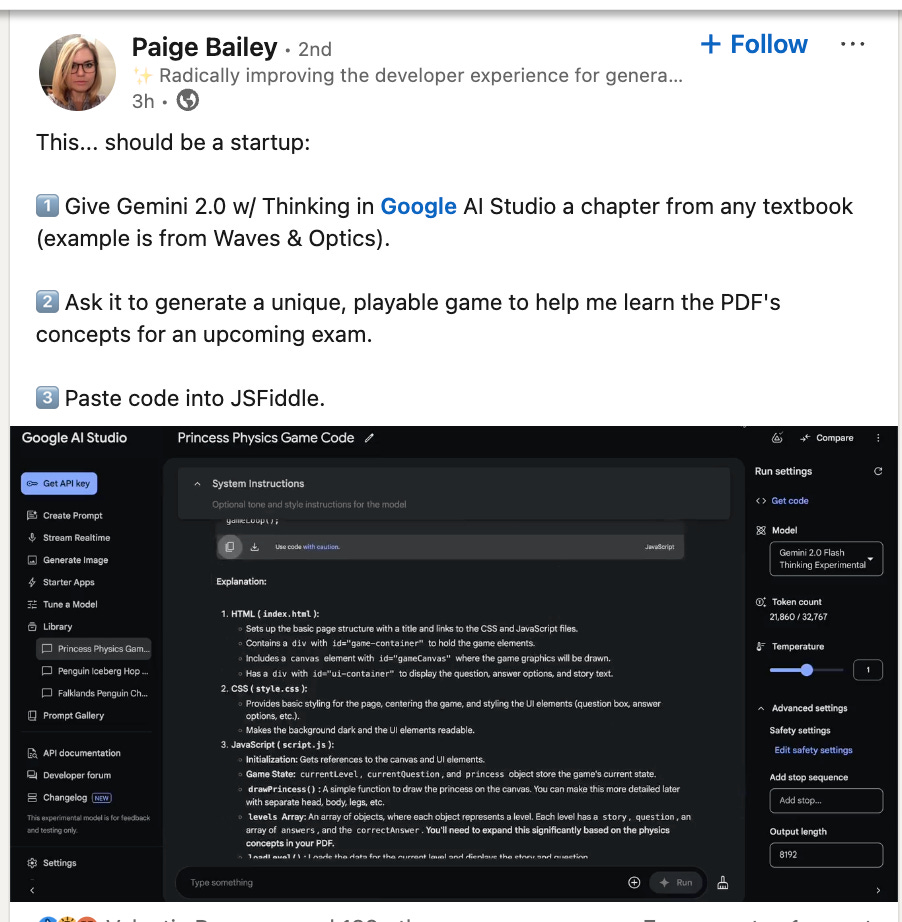

Learning Navigator (LN). These systems are arguably making more progress as content teachers than LNs, but systems are being trained to teach content well. One regularly touted example of this is Synthesis, which, at least based on anecdotal evidence, is very effective at teaching math through gaming and other engaging approaches.

Now, it is easy to create such games using large, general AI models.

Students who learn how to use AI tutors well will be Prepared with Opportunities for Lifelong Learning Outcomes.

The private Alpha School (also called “Unbound Academy”) claims they can teach content with AIT&T less effectively than human teachers. This AI system even makes the bold claim of delivering instruction at twice the conventional teaching speed, primarily through concentrated two-hour morning sessions. And since AI tracks each student’s progress in real-time, adjusting the curriculum’s difficulty and focus based on individual needs, it’s possible that it could be even more effective than teachers who do not have the time to teach each student individually.

Success in this model is primarily measured through standardized test performance, with the school proudly advertising its 90th percentile NWEA rankings. Additionally, the model explicitly emphasizes preparing students for success in the digital economy through entrepreneurship training, which occurs through human-led interaction in the afternoons.

Perhaps the most dramatic aspect of this transformation is the complete removal of traditional teachers from these content-focused sessions. Instead, they are replaced by "guides" and "evangelists" who are paid $50/hour. Unlike traditionally certified teachers, these guides must possess “demonstrated expertise in social media management, content creation, and audience engagement.” The financial structure of this educational model positions it firmly in the premium market segment, with annual tuition ranging from $25,000 to $40,000.

The educational philosophy underpinning this model reveals several core beliefs about the future of learning. The system operates on the premise that academic knowledge can be effectively transferred through AI interaction and that a combination of AI-student and guide-student relationships can successfully replace traditional teacher-student relationships.

You can read more in the company’s White Paper and in this Yahoo News article.

The model has its advocates and detractors, but after giving it a lot of thought, it does have a lot of potential. A key test of a school's success has been whether they can get students to pass the test of a standardized curriculum. As I said two years ago :), it will be easy for an AI to do that. If we are going to judge schools and educational gains based on standardized tests, what's wrong with this approach? Second, Students are also learning entrepreneurship and collaboration skills in the afternoon. These are *essential* skills. So, they are actually getting more. Third, students still get human interaction from other students and their "guides." The guides aren't traditionally certified teachers, but they are humans who have other skills (such as building social media followers). Certified teachers don't necessarily have these skills, and many don't want them or think AI doesn’t belong in the classroom, so I'm not sure why they are the most qualified for the job here. AI changes the qualifications of needed human teachers.

And even if the students learn less content from AIs, why would this matter? Students only learn a percentage of what they are taught, and a majority in the US do not learn enough to pass scores on the NAEP.

Schools cannot refuse to fully adapt to the reality of AI and then complain when these alternative educational models spread outside the traditional educational system.

Coaches (CH). The role of the coach in a world of education infused with AI is uncertain. As students form greater bongs with AIs (many spend hours online interacting with Ais), will we rely more on AIs to motivate students? Will humans perform more of the “guide”/coach role they do in the Alpha School model?

Child Care Provider (CCP). It is unlikely that AI systems can fulfill these roles.

AIT&T will perform different roles in different educational models, from providing the majority of instruction to teaching for parts of the day to being supportive tutors. There will not be one single model of how AI interacts with the educational system.

Regardless, as Jenni Haymann notes, its expanding role does bring up critical questions:

(W)hat value do teachers bring to learning right now? |

Can they articulate it?

Can institutions articulate it?

How might faculty help re-focus the purpose of education in partnership with learners?

How indeed?

The answer to these questions will come in the context of an imperfect system. Will an expanded role for AI add value to a system facing financial challenges, teacher retention issues, and student engagement issues? Will its presence help or hinder efforts to push more students over the 50% hurdle for educational progress?

In a world focused on Advancing Preparation Opportunities for Lifelong Learning Outcomes, the Alpha School Model clearly articulates the value teachers bring to the table. It helps re-focus the purpose of education on developing life-long learners who are able to learn new content from AIs when needed and also have the collaborative and entrepreneurial skills they need to succeed.

*AI is Not Just More “EdTech”

As I’ve said since soon after the release of ChatGPT, (generative) AI is not simply another EdTech product. It’s not a SmartBoard, and it’s not Quizlett.

How is it different?

(1) AI is interactive. Unlike traditional educational technologies like SmartBoards or Quizlet, generative AI systems like ChatGPT allow for dynamic, real-time interaction. A user can pose questions, ask for clarification, or request specific tasks, and the AI responds in a tailored manner, including by asking follow-up questions.

This interactivity creates a conversational learning experience that can address the unique needs of each student or teacher. Instead of being a static tool with predefined functions, AI acts more like a collaborator, capable of engaging in meaningful dialogue and responding to nuanced queries. Over time, generative AI can identify patterns, such as a user’s preferred writing style, knowledge level, or recurring themes in their questions. This adaptability enables the AI to provide increasingly relevant and accurate responses. For example, in an educational setting, it can offer simpler explanations for beginners or more complex analyses for advanced learners, making it a versatile tool for personalized education.

As AI’s memory expands in 2025, perhaps “infinitely,” its ability to interact uniquely with each user will only grow.

(2) We shape AI when we interact with it. Generative AI evolves based on how users engage with it. Through the prompts and feedback users provide, the AI adapts its responses to better align with the user’s intentions. For example, if a student consistently asks for examples in a specific field, the AI becomes more adept at providing relevant, detailed responses. This malleability allows AI to become a personalized resource, reflecting the learning styles and priorities of its users. In this way, the user is not just a passive recipient but an active participant in shaping the AI's outputs.

(3) AI can act as a mentor or coach. Beyond providing information, generative AI can guide users in skill development, critical thinking, and problem-solving. It can simulate debate partners, provide constructive feedback on essays, or walk users through complex problem-solving processes step by step. This makes it more akin to a personal tutor than a static educational tool, supporting deeper engagement and growth.

(4) AI breaks traditional academic boundaries. Generative AI is not limited to predefined subjects or functions. It can support a vast array of disciplines, from STEM to the arts, and tackle cross-disciplinary questions with ease. Its flexibility means it’s not just a tool for rote learning but a catalyst for creative thinking, interdisciplinary exploration, and real-world problem-solving.

Let's say a high school student is working on a project about the impact of climate change on local bird populations. Here's how generative AI could support this interdisciplinary exploration -

Biology. The student could ask the AI to explain bird migration patterns and how temperature changes affect breeding cycles, getting detailed explanations of concepts like phenology and population dynamics.

Data Analysis. The AI could help analyze local temperature and bird count data, suggesting appropriate statistical methods and helping interpret trends.

Art & Visualization. The student could get help creating clear data visualizations or generating ideas for artistic representations of the findings, such as an infographic showing migration pattern changes.

Creative Writing. The AI could assist in crafting compelling narratives about the research findings, helping transform scientific observations into engaging stories that resonate with the community.

Local History. The student could explore how the local bird population has changed over decades, with the AI helping to connect historical records with current observations.

Policy Implications. The AI could help students understand and articulate how their findings might inform local conservation policies and community action.

In this way, the AI becomes a collaborative partner that helps weave together multiple disciplines - science, mathematics, art, writing, history, and civic engagement - into a cohesive project that tackles a real-world environmental challenge. The student isn't just learning facts but developing critical thinking skills and discovering how different fields of knowledge interconnect meaningfully. Ordinarily, a single human teacher would not be able to help with all of these, but an AI could.

Since AI is different from other forms of technology, it can easily support Advancing Preparation Opportunities for Lifelong Learning Outcomes.

*Radical AI Acceleration in 2025+

Ubiquitous Application

There has certainly been no slowdown in AI usage. Recent data from Similarweb shows that ChatGPT hit a new milestone with 3.7 billion global visits in October, marking a 17.2% increase from the previous month and 115.9% year-over-year growth. The same data shows that Notebook LLM usage has doubled.

Access to AIs such as ChatGPT is becoming easier. It is now seamlessly integrated into all products students use, including word processing programs, Grammarly, browsers, operating systems, eyeglasses, and phones.

While the models deployed “locally” (phones, eyeglasses) are currently weaker than the frontier models, the continued ability to “shrink” or distill the models means that models of increasing capacity will be accessible locally on these devices without an internet connection.

For example, following its debut at WWDC last June, Apple has added ChatGPT support to Apple Intelligence in iOS 18.2 for iPhones and iPads, and in macOS Sequoia for silicon-based Macs. ChatGPT now works directly with Siri and writing tools across Apple's operating systems. When Siri "thinks" ChatGPT would do a better job with a request, it automatically passes it to OpenAI's system—but only if you've turned on the feature.

In December, OpenAI announced that ChatGPT's search features are now free for all registered users worldwide, dropping the previous paid-only restriction. The company says it has spent recent months improving search speed and reliability, particularly for mobile users. The update brings several new features, including built-in maps and voice search. Users can also now set ChatGPT as their default browser search engine.

AI is Speeding Up, Not Slowing Down

Beyond managing the existing requirements this ubiquitous technology has created for schools and potentially taking advantage of its teaching and learning capabilities, we are also facing a situation in which the technology will continue to improve, bringing it closer to human-level intelligence in all domains and abilities.

This will happen quickly. Jack Clark, cofounder of Anthropic, believes that “most people aren't ready for how fast things are about to move. "

It’s no surprise, given that the tech Giants — Amazon, Google, Meta, and Microsoft — alone spent a staggering $185 billion on AI in 2024, according to a JPMorgan analysis. There is an additional $56 billion just from VCs in GAI. Musk just raised another $6 billion. In 2025, Microsoft alone will invest $80 billion to build data centers globally, with total global investments expected to reach $2 trillion. An additional $600 billion is expected to be invested over the next 5 years.

Most recently, OpenAI previewed its -o3 model, set to be released in February. The o3 model from OpenAI represents a significant advancement over its predecessor, the o1 model, particularly in terms of mathematical benchmarks, scientific knowledge, and reasoning.

AIME 2024. The o3 model achieved an impressive 96.7% accuracy on the American Invitational Mathematics Examination (AIME) 2024, missing only one question. In contrast, the o1 model, released just 3 months ago, scored 83.3%.

EpochAI Frontier Math Benchmark. This benchmark is known for its difficulty, featuring the world’s hardest math problems that have never been published. The o3 model scored 25.2% on this test, significantly outperforming previous AI models, which struggled to exceed 2%.

O3’s progress in frontier math is so strong that EpochAI is now running a competition to develop new, even more difficult math tests.General Performance. The o3 model's performance in other benchmarks, such as coding and scientific reasoning, reflects its enhanced mathematical capabilities. For instance, on the SWE-Bench Verified coding benchmark, o3 achieved 71.7% accuracy, compared to 48.9% for o1, indicating a broader improvement in logical reasoning.

ARC-AGI. While not strictly a mathematical benchmark, the ARC-AGI test measures an AI's ability to adapt to new tasks, which includes mathematical reasoning. The o3 model scored 75.7% on the Semi-Private Evaluation set under a $10k compute budget and 87.5% at high-compute configurations, showcasing its versatility in problem-solving.

This model was demonstrated only three months after -o1 was released.

Even Melanie Mitchell, a frequent critic of LLM reasoning, claims that the new model is quite amazing. For more, see AI: A Guide for Thinking Humans.

Unlike traditional language models, which rely primarily on reinforcement learning from human feedback (RLHF), the “o” models learn through well-defined goals and scenarios. This mirrors AlphaGo's training process, in which the system had a clear goal—to win the game—and refined its strategy through countless simulated matches until it achieved superhuman performance.

The approach proves especially effective for programming, mathematics, and the sciences, where solutions can be clearly verified as right or wrong. Rather than simply predicting the next word in a sequence, o3 learns to construct chains of thoughts that lead to correct solutions, which explains its exceptional performance in mathematical and coding benchmarks

For more on the significance of 03 and what it entails, see this video.

While o3 represents that cutting edge based on public knowledge of what is available, there have been other significant advances. While not as advanced, Alibaba's (China) AI research team Qwen has unveiled QVQ-72B-Preview, a new open-source model that can analyze images and draw conclusions from them. DeepSeek-R1-Lite-Preview outperforms OpenAI's o1 model on benchmarks like AIME and MATH, employing chain-of-thought reasoning to tackle complex problems while providing transparent step-by-step thought processes.

While it's still in the experimental phase, early tests show it's particularly good at visual reasoning tasks. Similarly, a team led by researchers from Mohamed Bin Zayed University has developed BiMediX2, the first AI system of its kind that can analyze and describe medical images in both English and Arabic.

Google's Flash Thinking represents another similar breakthrough in reasoning. Meta’s Llama-4 is also expected to incorporate more advanced reasoning and voice

Beyond conversation (chatbots) and reasoning, AI systems also develop memory, a key ingredient of intelligence. ChatGPT can now store and use previous conversations in its response. Gemini can remember users' preferences, life details, and work-related info to provide personalized responses

Similarly, Microsoft has started releasing the first preview of its Recall AI feature for Copilot Plus PCs. Recall is a memory tool that automatically captures your digital activity on Copilot Plus PCs. By enabling snapshots, the app takes screenshots of your work and organizes them chronologically. You can then search through your past activities using natural language - describe what you're looking for, and Recall will find the relevant screenshots. The app also features a timeline view, letting you jump to specific dates to see which apps and websites you used.

As memory improves, LLMS can adapt to the diverse and ever-changing profiles of every distinct user and provide up-to-date, personalized assistance. According to Deep Mind and Stanford researchers, AI can learn and replicate your personality in a 2-hour interview.

These advances in memory mean that AI systems will improve their ability to individualize instruction and more easily support efforts to Advance Preparation Opportunities for Lifelong Learning Outcomes.

And the advances also highlight the exigence of the need for action. Not only do we need to act to prepare students for this emerging (not future) world, but advancing machine intelligence will only further complicate traditional assessments.

While we are arguably still a bit away from human-level intelligence. AI Godfather Yann LeCunn argues the true AI doesn't lie in large language models alone but requires a combination of sensory learning and emotions. That may be as far as a decade away. But even a decade+ away, that will be before most of our current students turn 30, and the developments along the way will have a radical impact on society.

Miles Brundage, a former member of the OpenAI Safety Team who left due to safety concerns, bet Gary Marcus $20,000 that AI will be able to do eight of these ten things by 2027.

Watch a previously unseen mainstream movie (without reading reviews etc) and be able to follow plot twists and know when to laugh, and be able to summarize it without giving away any spoilers or making up anything that didn’t actually happen, and be able to answer questions like who are the characters? What are their conflicts and motivations? How did these things change? What was the plot twist?

Similar to the above, be able to read new mainstream novels (without reading reviews etc) and reliably answer questions about plot, character, conflicts, motivations, etc, going beyond the literal text in ways that would be clear to ordinary people.

Write engaging brief biographies and obituaries without obvious hallucinations that aren’t grounded in reliable sources.

Learn and master the basics of almost any new video game within a few minutes or hours, and solve original puzzles in the alternate world of that video game.

Write cogent, persuasive legal briefs without hallucinating any cases.

Reliably construct bug-free code of more than 10,000 lines from natural language specification or by interactions with a non-expert user. [Gluing together code from existing libraries doesn’t count.]

With little or no human involvement, write Pulitzer-caliber books, fiction and non-fiction.

With little or no human involvement, write Oscar-caliber screenplays.

With little or no human involvement, come up with paradigm-shifting, Nobel-caliber scientific discoveries.

Take arbitrary proofs from the mathematical literature written in natural language and convert them into a symbolic form suitable for symbolic verification.

Even advances in the ballpark of these will fundamentally change society. This is all happening while most schools still think the primary question facing education is how much of a paper should be written by a text chatbot.

*AI & Related Advances in Robotics

[Link to the above — 12-30-2024]

While given less attention among those focused on “AI in education,” 2024 has been referred to as the “year of the realistic robot.” We can now see robots operating in all terrain environments.

And we can see robots playing soccer and analysing soccer matches.

The fields of AI and robotics represent distinct yet complementary domains in modern technology. AI consists of software-based systems designed to perform tasks that traditionally require human intelligence. These systems encompass functions like pattern recognition, learning from data, natural language processing, complex decision-making, and visual perception. They excel at processing vast amounts of information and drawing insights to inform intelligent actions and responses.

Robotics, in contrast, focuses on the physical realm through the development and operation of mechanical systems capable of interacting with the real world. This field emphasizes mechanical engineering, hardware design, movement systems, environmental sensors, and actuators that enable physical manipulation of objects and navigation through spaces.

While these fields maintain distinct characteristics, they increasingly intersect in powerful ways that enhance both disciplines. Modern robotics systems often incorporate AI technologies to create more sophisticated and autonomous machines. For instance, manufacturing robots employ computer vision systems to identify and manipulate objects with precision, while delivery robots utilize AI-powered pathfinding algorithms to navigate complex urban environments. In medical settings, robotic surgical assistants leverage machine learning to enhance their precision and adaptability.

In the context of models with advanced reasoning, such as 01 and 03, AI systems improve robotics in the following ways -

Navigation and Path Planning. Robots equipped with o3 can navigate complex environments more effectively. For instance, the model's ability to solve problems on the ARC-AGI benchmark, which tests genuine intelligence, indicates its potential in real-world scenarios where robots must adapt to new tasks or environments.

Task Execution. The o3 model's proficiency in coding and mathematical reasoning allows robots to execute tasks with higher precision and efficiency. This is particularly useful in industrial settings where robots must perform intricate operations or solve problems on the fly.

Interaction with Humans. With its enhanced reasoning, robots can engage in more natural and contextually appropriate interactions with humans, understanding and responding to complex queries or commands

We’ve also seen rapid advances in reinforcement learning. Reinforcement Learning (RL) plays a critical role in the evolution of intelligent robotic systems, offering a paradigm where robots learn to make decisions through interaction with their environment.

This approach is particularly advantageous for robotics because it enables robots to adapt to new situations without the need for explicit programming for every conceivable scenario. For instance, a robot can learn to navigate through a cluttered room by interacting with its surroundings, adjusting its path based on obstacles it encounters, thereby improving its performance through trial and error, much like how humans learn from their experiences.

The essence of RL lies in its ability to allow robots to optimize their actions to achieve specific goals. Whether navigating a maze or performing a task with minimal energy consumption, RL employs a reward system in which the robot receives feedback on its actions. This feedback guides the robot toward better decision-making, optimizing its behavior to maximize rewards or minimize penalties.

Recent advancements in RL, particularly with integrating deep neural networks, have shown significant promise in enabling robots to generalize from past experiences to new, unseen scenarios. This generalization capability is crucial for robots operating in dynamic environments where they must adapt to changes or unexpected events. For example, a robot trained to pick up objects of various shapes and sizes can apply this knowledge to grasp new objects it has never encountered before, demonstrating the power of RL in fostering adaptability and learning in robotics.

Recently, researchers unveiled Genesis, a new open-source simulation platform that aims to accelerate how AI systems learn to control robots. Genesis is a generative physics engine that generates 3D and 4D dynamical worlds powered by a physics simulation platform designed for general-purpose robotics and physical AI applications (Spencer).

Genesis combines multiple physics solvers—the same algorithms that power video game physics—into a single, powerful framework designed specifically for training AI that will eventually operate real-world robots. Using GPU-accelerated parallel processing, Genesis can run simple simulations at up to 43 million frames per second—that's 430,000 times faster than in real-time. To put this in perspective, an AI system can gain the equivalent of ten years of training experience in just one hour of computing time.

Similarly, Google has released Genie-2. “Genie 2 is a world model, meaning it can simulate virtual worlds, including the consequences of taking any action (e.g. jump, swim, etc.)” DeepMind writes. “It was trained on a large-scale video dataset and, like other generative models, demonstrates various emergent capabilities at scale, such as object interactions, complex character animation, physics, and the ability to model and thus predict the behavior of other agents.”

Models like Genesis Genie 2 (Google) have a couple of purposes – they can serve as training grounds for virtually embodied AI agents, able to generate a vast range of environments for them to take action in. They can also, eventually, serve as entertainment tools in their own right. Today, Genie 2 generations can maintain a consistent world “for up to a minute” (per DeepMind), but what might it be like when those worlds last for ten minutes or more? Anything a person has an image of or takes a photo of could become a procedural game world. And because systems like Genie 2 can be primed with other generative AI tool,s you can imagine intricate chains of systems interacting with one another to continually build out more and more varied and exciting worlds for people to disappear into. “For every example, the model is prompted with a single image generated by Imagen 3, GDM’s state-of-the-art text-to-image model,” DeepMind writes. “This means anyone can describe a world they want in text, select their favorite rendering of that idea, and then step into and interact with that newly created world (or have an AI agent be trained or evaluated in it).”

Fei Fei Li’s start-up, World Labs, is developing similar technologies.

Brain-Computer Interfaces

The field of brain-computer interfaces (BCIs) is witnessing remarkable advancements, pushing the boundaries of what was once considered science fiction into tangible reality. At Johns Hopkins APL and School of Medicine, researchers have made significant strides in developing a digital holographic imaging system capable of detecting neural activity through the scalp and skull, offering a new path to noninvasive BCIs. This technology identifies tissue deformation during neural activity, providing a novel signal for brain activity that could be used in future BCI devices. In parallel, Precision Neuroscience has introduced its Cortical Surface Array, featuring 1,024 electrodes, which has provided unprecedented insights into neural patterns in the motor cortex. This high-resolution array captures beta oscillations, crucial for understanding how the brain controls movement, and has the potential to revolutionize neurological treatment and rehabilitation.

Notably, Neuralink, Elon Musk's company, has achieved a significant milestone by successfully implanting a brain chip in a human for the first time, aiming to create direct brain-to-computer interfaces. The device, known as "the Link," uses thin, flexible threads with electrodes to record neuron activity, transmitting signals via Bluetooth. This technology focuses on aiding individuals with severe paralysis by restoring control over limbs or communication devices. Additionally, UC Davis Health has developed a groundbreaking BCI that translates brain signals into speech with up to 97% accuracy. This system was implanted in a man with ALS, allowing him to communicate his intended speech within minutes of activation, marking a major step forward in restoring interpersonal communication for those with severe speech impairment.

Neuralink's brain-computer interface could revolutionize problem-solving in educational settings. Students might access relevant information instantly, allowing them to tackle complex problems more efficiently. This technology could enable rapid data processing and analysis, enhancing students' ability to identify patterns and connections and fostering advanced problem-solving skills across various subjects. The brain chip might facilitate real-time student collaboration, leading to more dynamic and innovative problem-solving approaches. By providing immediate feedback on problem-solving strategies, Neuralink could help students refine their approaches and learn from mistakes more quickly, allowing them to engage with more challenging and diverse problems and fostering greater creativity and adaptability.

*AI and the Future of “Work”

The debate over AI's impact on work is complex. Still, more and more individuals believe AI will substantially disrupt the job market. This is especially true as millions of agents are deployed in 2025 “that can do more or less the same job as your average remote worker.”

One example of an AI agent is Google’s Deep Researcher, which will prepare a research plan on your topic, conduct research on 40-50 high-quality websites and documents, and then write a 7-20 page research report with 75+ footnotes. I have used this tool extensively, and I’ve yet to find any hallucinations.

Perplexity’s Arvind Srinivas says that we’ll soon see the ability of Perplexity to not only research but also to then write PowerPoint/Slide presentations based on the research. He also speculates that we may soon see worlds where individual agents negotiate product purchases on our behalf, perhaps advertising to each other.

Very few (if any) of the ‘future of work” reports anticipate smaller disruptions assuming the deployment of these agents or the most advanced reasoning capabilities. In my mind, this has always been a challenge of more conservative projections.

Even before the deployment of agents on the “for hire” market, translators and illustrators are losing their jobs to AI.

In the area of medicine, we’ve already seen “Superhuman performance of a large language model on the reasoning tasks of a physician” (Brodeur et al). An AI tool named Mia, tested by the NHS, successfully identified tiny signs of breast cancer in 11 women that were missed by human doctors. This tool analyzed mammograms of over 10,000 women, flagging all those with symptoms and an additional 11 cases not identified by radiologists. This early detection capability could significantly improve patient outcomes by catching cancers at their earliest stages. This does not necessarily mean that human doctors will lose their jobs, but regardless, it will substantially impact medical care.

The impact of AI on work will continue to be studied, but more and more thinking needs to go into what jobs will be safe(r), at least for the next 5-10 years. Allie Miller, one of the leading and most respected voices on AI noted:

Some argue that AI cannot do work that requires creativity, emotional intelligence, and complex decision-making in uncertain contexts, but most people do not do these jobs. New research shows that a “PC Agent, trained on just 133 cognitive trajectories, can handle sophisticated work scenarios involving up to 50 steps across multiple applications.”

Graduating students will need re-training and constant upskilling and they can’t rely on the government to provide it. The best option is for them to learn how to use AI systems that can support Advancing Preparation Opportunities for Lifelong Learning Outcomes

World-Wide Changes are Coming Fast and Furious, and They Aren’t Just About AI

Most of those running schools today grew up in an era of peace, not extensive societal conflict. The period between the late 1990s and early 2010s was indeed marked by increasing global interconnectedness, driven by factors like the rise of the internet, expanding free trade agreements, and the growing influence of international institutions. The fall of the Soviet Union had ushered in what some scholars called the "end of history" - a supposed triumph of liberal democracy and market capitalism.

However, this era of relative stability began to unravel in the 2010s. Several factors contributed to this shift: The 2008 financial crisis eroded faith in globalized capitalism and international institutions. Social media, once seen as a democratizing force, began to amplify political polarization and nationalist sentiments. Growing economic inequality within countries fuelled populist movements that often rejected internationalist policies.

The rise of China as a global power also challenged the U.S.-led international order. Regional powers like Russia became more assertive, as seen in the 2014 annexation of Crimea and its subsequent invasion in 2022. Brexit in 2016 marked a symbolic blow to European integration, while various countries saw the emergence of leaders who emphasized national sovereignty over international cooperation.

By the 2020s, the world had shifted dramatically from the optimistic globalism of the late 1990s. International tensions increased, trade became increasingly weaponized through sanctions and tariffs, and military conflicts erupted in various regions. The COVID-19 pandemic further accelerated these trends, exposing vulnerabilities in global supply chains and reinforcing nationalist impulses toward self-sufficiency.

We are now entering what some have described as an age of comprehensive conflict. Indeed, what the world is witnessing today is akin to what theorists in the past have called “Total war,” in which combatants draw on vast resources, mobilize their societies, prioritize warfare over all other state activities, attack a wide variety of targets, and reshape their economies and those of other countries. Newsweek recently identified 10 significant conflicts Trump will inherit.

And it’s not just geopolitical conflict that our students are faced with. In a Manifesto, Wil Richardson identifies many other challenges facing today’s students

Climate change (caused by humans or natural factors)

A growing rich-poor gap

Social isolation and mental health challenges (See also OECD)

Social media addiction

I’ll add: Forthcoming deportations and family separations (for those affected)

I’ll add: School shootings

See also: Pedagogies in the Time of Collapse: A Hopeful Education for the End of the World as We Know It.

The fundamental disconnect between school leaders' formative experiences and students' current reality creates profound educational implications that reach into every aspect of school operations. Today's students are coming of age in an environment where conflict, instability, and polarization feel normal rather than exceptional. Unlike their teachers who grew up expecting peace and progress, these students are developing a fundamentally different worldview, navigating a world where global tensions directly affect their daily lives through social media, economic impacts on their families, and sometimes even having refugee classmates from conflict zones.

It is also worth noting that while previous generations did face challenges (Vietnam war, Korean war, etc.), students, due to the interconnected world being at their fingertips, are much more exposed to all of it, including the most brutal footage of conflicts on Twitter and TikTok.

Schools face unprecedented operational challenges in response to these shifts. They must balance welcoming learning environments with preparing for potential threats in ways that weren't necessary in the 1990s, including physical security and cybersecurity measures. Traditional approaches to teaching topics like current events, civics, and history need updating to address a more complex and conflicted world. Schools must help students understand multiple perspectives while avoiding false equivalencies and partisan polarization.

The challenges extend into community relations as schools increasingly find themselves managing tensions from the broader society that spill into educational settings. This includes navigating political divisions among parents and staff, supporting students from different cultural backgrounds during times of international tension, and addressing conflicts that arise from social media but impact the school environment. School leaders and teachers need new skills to facilitate discussions about controversial topics, support students processing traumatic global events, create inclusive environments amid increasing societal divisions, and help students develop digital literacy and critical thinking skills.

These changes require a fundamental rethinking of how schools operate and how they prepare students for an increasingly complex and contested world. The traditional education model, developed in a more stable era, must evolve to meet these new challenges while maintaining its core mission of preparing students for successful futures.

These shifts in global dynamics and educational challenges make it clear that there can be no return to pre-pandemic educational approaches, despite some calls to "get back to normal." The COVID-19 pandemic didn't create most of these challenges - rather, it accelerated existing trends and exposed vulnerabilities in our educational systems that were already present. The nostalgia for pre-pandemic schooling often overlooks how that model already struggled to prepare students for an increasingly complex and polarized world. Instead of trying to recreate an educational model designed for a more stable era, schools must adapt to help students thrive in a world characterized by persistent uncertainty, rapid change, and societal tensions. This means developing new approaches to everything from classroom management to curriculum design that acknowledge and address the realities of our current age of comprehensive conflict.

While we cannot return to pre-pandemic times, this moment of transformation presents an opportunity to build educational systems that are more resilient, relevant, and responsive to student needs. Today's students, despite growing up in challenging times, show remarkable adaptability and technological fluency. They often demonstrate a sophisticated understanding of complex global issues and a capacity for empathy that crosses traditional boundaries. Schools can harness these strengths to create learning environments that not only acknowledge current realities but also prepare students to be active participants in shaping a better future. By embracing this transformation rather than resisting it, educators can help students develop the critical thinking, emotional intelligence, and adaptive capabilities they'll need to navigate and ultimately help resolve the complex challenges of our time.

Our Students’ Worries

While many schools continue to ignore AI, students understand that the economic value of what they are learning may be limited at best. They are very aware that any future jobs are uncertain.

This doesn’t just apply to the best students in the most reputable CS programs and to all high school and university students.

Beyond employment, students generally fear the development of superintelligence and “AI Terminator” or takeover scenarios. While many consider these scenarios to be “far-fetched,” I don’t know of anyone who will say the risk is zero.

AI safety researcher and director of the Cyber Security Laboratory at the University of Louisville, Roman Yampolsky, puts the probability of AI takeover at 99.999%. Elon Musk has put it at 10-20%. Recently, AI Godfather Geoff Hinton put it at 10-20%.

While educators often implore students to work hard and prepare for their future, many wonder what that future will entail. Most educators are not willing or able to have these conversations with students.

Restrictive Approaches to Managing AI in Education Will Fail to Protect Students and the Academy

Many of the restrictive approaches taken toward AI in education are based on four faulty premises.

(1) We can distinguish between AI writing and human writing. This was true of older AI tools and still may be true of free tools (to a degree), but it isn’t true of paid-for tools, at least when trained on writing samples. Many studies have shown that AI writing cannot be reliably detected by writing detectors and/or by the human eye.

(2) AI has a lot of factual errors. AI still obviously has some factual errors, but the # has *radically declined;* and when models are fine-tuned and/or multiple models are using in combination (for example, adding symbolic AI approaches) those rates start to collapse. In many instances, and especially compared to students, they are going to have fewer errors.

(3) AI is something out there that students go and get to use.* How can you say “no AI on an assignment” when AI is proactively embedded in browsers, search engines, word processors, operating systems, phones, eyeglasses, and contact lenses? AI is ubiquitous/omnipresent/all-pervasive.

AI will keep getting better and more extensive in these areas, which means academic adaptation that relies on these approaches is "racing to failure."

These approaches are psychologically reassuring, but they don’t really solve anything, and their workability will continue to wane over time. Studies that cite their effectiveness relative to what are now ancient AIs (even ChatGPT4.0) don’t really prove anything. And they also lock-in systems that are losing the support of industry, taxpayers, students, and parents who directly or indirectly pay the bill.

“No AI or AI only in X situations* won’t protect the academy from AI. At best, it's a short-term illusion. What will protect the longevity of the academy is to put all of its incredible brain power into figuring out how to best work with it.

(4) It’s a faulty premise to assume we should draw lines and protect the status quo. Instead, we need to focus on Advancing Preparation Opportunities for Lifelong Learning Outcomes.

Rising to the challenge — Helping Our Students Prepare for a World of Machine Intelligence

In a world where our devices know as much as we do and are as smart as we are, at least in many ways, educating students to thrive in that world will require some adaptations.

What Students Need to Learn

What — Foundational Knowledge

There are emerging discussions of what students need to learn in a world where human-level intelligence is being discussed, or even now.

This debate has existed since the dawn of the Internet. Some have argued that students don’t need to learn anything because it’s all on the Internet. However, this notion didn’t stick because people can’t go look something up every time they have to act.

AI and GAI, specifically, are different, however, because with GAI, computers can act on the knowledge and complete tasks, something the internet could not do. For example, GAIs, using the knowledge it is trained on, can write papers, run advertising campaigns, take control of applications on computers, answer customer service queries, teach content, and grade exams. This starts to bring up questions of what one needs to know to be able to work well with the tools. How strong of a writer do you need to direct or co-write a paper with AI? How strong of a designer do you need to be to co-design with an AI tool that can instantly generate multiple designs? How well do you have to know grammar and spelling in order to make sure AI tools adequately proof your work? What knowledge do you need to work with the tools to solve problems and make money?

Carlo Incho has identified five roles students need to learn to take on — Flow Director, Pattern Spotter, Boundary Setter, Insight Builder, and Strategic Partner—which represent a framework for developing wisdom in working effectively with AI. The Flow Director involves seamlessly switching between independent thinking and engaging with AI, like conducting a cognitive orchestra to achieve goals. The Pattern Spotter focuses on recognizing when AI outputs are helpful versus misleading, relying on refined judgment to discern quality. The Boundary Setter emphasizes setting clear limits, protecting sensitive information, and making smart choices about AI interactions. The Insight Builder transforms AI outputs into meaningful contributions, using them as a springboard for deeper thought and preserving intellectual sovereignty. Finally, the Strategic Partner reframes AI as a collaborative partner, engaging it in dialogue, challenging assumptions, and using its capabilities to amplify human thinking. Together, these roles highlight the importance of developing human-centric skills for AI collaboration, focusing on wisdom rather than technical expertise, and maintaining a balance that preserves the unique value of human insight.

What knowledge will you need to learn in the future to solve problems and make money.

There are also questions of prioritization. Should learning calculus really be prioritized over learning statistics? In today's AI-driven world, statistics has emerged as a particularly crucial skill compared to calculus, though both remain valuable. Statistics provides the fundamental tools needed to understand and interpret the massive amounts of data that AI systems generate and consume, helping us determine if patterns are meaningful or just random noise, make reliable predictions, and evaluate model performance. While calculus is important for understanding the mathematical foundations of machine learning algorithms, statistics offers more immediately practical tools for working with real-world data, making informed decisions, understanding AI model outputs and limitations, and communicating results to stakeholders. Statistical knowledge enables us to interpret machine learning model outputs, understand the probability distributions that underpin many AI algorithms, evaluate model accuracy, and identify potential biases in AI systems. However, rather than viewing it as a strict either/or choice, it's worth recognizing that both subjects complement each other – calculus helps understand the optimization processes in machine learning. In contrast, statistics helps interpret and validate the results.

These are just a couple of examples. I think it is important that every field or subject area think hard about what students need to learn in a world where machines will be able to do much of what human knowledge workers do.

Thinking about these questions is important to Advancing Preparation Opportunities for Lifelong Learning Outcomes.

What — Skills

Existing curriculums have a bias toward just knowing, the exact era where AI is most able to outperform human beings.

Charles Fadel, 2024, p. 13

While what content students need to learn in an AI world is still unexplored, more attention has been given to what skills students need to develop. This is really not unique to AI, as many have been arguing for decades that a greater emphasis needs to be placed on these skills. In a world of AI, however, where AI can do many jobs, these skills will become essential for running a business.

Critical Thinking, Creativity, Problem-Solving, and Caring

Critical and creative thinking must be linked in the minds of leaders. They must have the creative imagination to conceive new ways to solve problems and the critical thinking to turn innovative ideas into practice. Military leaders must be able to do this under volatile, uncertain, complex, and ambiguous (VUCA) conditions. (Upton et al, 2024)

Problem-Solving

Critical Thinking

Metacognitive skills

Metacognitive skills - the abilities to understand, monitor, and regulate our own thinking and learning processes - have become increasingly vital for students navigating AI. These skills function as our brain's "control center," enabling us to plan how to approach tasks, evaluate our understanding, and adjust our strategies when needed. As AI tools like ChatGPT become more prevalent, students must develop strong metacognitive abilities to critically evaluate AI outputs, understanding when to trust or question AI-generated content while recognizing their own knowledge gaps that AI might be helping to fill.

Strong metacognitive skills also enable students and employees to make strategic decisions about when and how to use AI tools appropriately, plan how to effectively combine AI assistance with their own thinking, and adapt their learning strategies based on what works best for them.

The development of these skills is crucial for maintaining problem-solving independence. Students who can monitor their own problem-solving process, identify where they're stuck, and choose appropriate strategies will be better equipped to use AI as a tool rather than becoming dependent on it. Furthermore, as the future will likely involve significant human-AI collaboration, students with well-developed metacognitive abilities will be better positioned to maintain awareness of their own contributions versus AI input, recognize when verification or expansion of AI-generated content is necessary, and effectively integrate AI capabilities with their own unique human insights.

Wisdom

In his book "Wisdom Factories: AI, Games, and the Education of a Modern Worker," Dr. Tim Dasey, who spent 35 years working with AI at MIT’s Lincoln Lab, argues for a significant shift in educational paradigms to prepare students for a future dominated by AI where it will increasingly take over roles traditionally filled by human experts. Instead of emphasizing the accumulation of detailed knowledge or expertise, which AI can now perform more efficiently, Dasey advocates for an education system that cultivates wisdom.

Wisdom, in this context, refers to the ability to apply knowledge in complex, real-world scenarios, fostering skills like critical thinking, creativity, judgment, and the capacity to manage and navigate uncertainty. Dasey emphasizes that humans must complement AI rather than compete with it. Wisdom skills enable humans to work alongside AI, focusing on tasks where human intuition, empathy, and strategic thinking are irreplaceable. Wisdom equips individuals with the ability to adapt to new information and solve problems that are not straightforward or have no single correct answer. It encourages a holistic approach to learning, where students are not just experts in one field but can integrate knowledge from various disciplines to address complex issues.

Valuing Different Things — Caring

Stuart Russell, a prominent AI researcher, has highlighted how the traditional premium placed on knowledge work gives way to a new reality where care-based professions take center stage. This transformation isn't just a minor adjustment in the job market – it represents a fundamental reimagining of what we value in the workforce.