When Our Phones Throw Stones: Rethinking the “Stolen Work” Critique of Generative AI

Debating model training is worthwhile, but confining our outrage to that arena lets us off the hook for the cobalt in our phones, the child labor in our wardrobes, and the radioactive dust...

“Generative AI is immoral because it’s trained on the un-compensated work of artists and writers.”

That claim resonates with many people—especially creators whose livelihoods feel newly fragile—but it hides a larger, older truth: almost everything in a modern household is entangled with harm somewhere along its extractive and poorly compensated supply chain. If we are going to talk about fairness, we should talk about it consistently. Below is a quick tour of the hidden human and ecological costs we already accept every day, followed by a plea to widen the conversation beyond AI and intellectual-property law to the broader question of how we choose to treat—and compensate—one another.

Everyday Technologies Built on Unequal Exchanges

Product or Practice Who (or what) absorbs the hidden cost?

Smartphones & laptops (rare-earth and “conflict” minerals). Artisanal miners in the Democratic Republic of Congo scrape cobalt, coltan, and other metals by hand—often children—while inhaling toxic dust and risking tunnel collapse. Respiratory disease, groundwater contamination, and an informal economy that keeps entire villages in extreme poverty.

E-waste recycling. After we toss devices, cargo containers full of electronics land in Ghana’s Agbogbloshie and India’s Seelampur. Informal workers there burn insulation to salvage copper and gold. Heavy-metal poisoning, shortened life expectancy, rivers laced with lead and mercury.

Fast-fashion clothing. Garment workers in Bangladesh, Vietnam, and Ethiopia earn a fraction of a living wage, often in unsafe buildings like the Rana Plaza factory that collapsed in 2013. Chronic wage theft, forced overtime, and workplace disasters that kill or maim thousands.

Chocolate & coffee. Côte d’Ivoire and Ghana still struggle to eradicate child labor from cocoa farms; coffee pickers in Central America inhale agro-chemicals with minimal protection. Lost schooling, pesticide exposure, and intergenerational poverty for farming families.

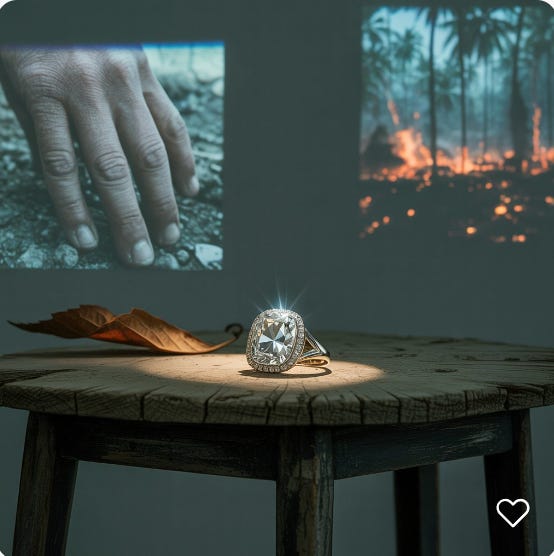

Diamond jewelry. “Conflict” diamonds from parts of Sierra Leone and Angola funded militias through the 1990s and early 2000s; smuggling networks persist. Civil violence, forced labor, and amputations used as terror tactics. Palm-oil snacks & cosmetics Indonesian and Malaysian rainforests are razed for monoculture plantations, displacing Indigenous Dayak and Orang Asli communities. Land grabs, biodiversity collapse (think orangutans), and carbon-rich peat fires that blanket Southeast Asia in smoke.

Solar panels & polysilicon. Reports link a share of global polysilicon production to forced-labor programs in Xinjiang, China. Coerced labor, surveillance, and cultural repression of Uyghur minorities.

Electric-vehicle batteries. Lithium extraction in Chile’s Atacama Desert diverts scarce water from Indigenous communities and fragile salt-flat ecosystems. Depleted aquifers and shrinking lagoons that sustain local wildlife and llama herders.

Nuclear energy fuel cycle. From the 1940s through the 1980s, uranium mines on Navajo Nation land left more than 500 abandoned shafts. Elevated cancer rates, radioactive tailings blowing across grazing lands, and decades-long clean-up delays.

Notice something? None of these industries steals copyright-protected sentences, yet every one quietly extracts value—health, land, culture, or wages—from people who hold the least power to resist. But many of us use these products every day and even advocate for their usage.

So, Why Does Generative AI Feel Different?

Proximity to the victim

When an image model mimics your art style, the “victim” is often a named, visible professional with a social-media account, not an anonymous miner a continent away. Visibility triggers empathy. This is especially true of many academics writing about AI.Speed and scale

AI systems can copy creative outputs in seconds, making the displacement feel immediate, whereas the human costs of, say, cobalt mining are geographically and emotionally distant.Legal framing

Copyright law offers creators a ready-made vocabulary—“theft,” “fair use,” “royalties.” There is no global statute that garment workers can invoke as easily.Cultural identity

We wrap a lot of personal pride in our creative works; fewer of us identify as miners or farmers. When AI appropriates art, it feels like an attack on selfhood, not just livelihood.

These factors make the AI/IP debate feel unique, but morally it belongs to the same lineage as every other asymmetrical exchange that props up consumer convenience.

Toward a Consistent Ethics of Compensation

Focusing narrowly on whether text-and-image models constitute copyright infringement risks missing the point. The deeper question is: How much do we value the people whose labor—creative or material—makes our lifestyles possible, and what mechanisms ensure they share in the benefits?

Possible levers include living-wage legislation, enforceable supply-chain audits, data-licensing cooperatives for artists, mandatory producer-responsibility schemes for e-waste, and community-share agreements where raw materials are sourced.

Technology-specific rules help (e.g., training-data transparency for AI, conflict-mineral disclosures for electronics), but fragmented fixes will always chase the next loophole. A more durable answer is a cultural commitment to reciprocity: no extraction without proportionate return.

Conclusion: It’s Bigger Than AI

Generative AI did not invent unpaid labor or externalized harm; rather, it simply shone a spotlight on a system of exploitation that has operated in the shadows for decades. While scrutinizing model training is important, limiting our indignation to algorithms lets us off the hook for the cobalt in our phones, the child labor in our wardrobes, and the radioactive dust still drifting across Navajo sheep ranges. All of these benefit many of the academics writing about their “stolen IP.”

Beneath all these examples lies a single engine: an extractive economy that prizes cheap inputs and outsized profits driven by the inputs over shared prosperity. From strip-mined cobalt to underpaid garment workers, the pattern is the same: corporations externalize real costs—health, environment, culture—onto vulnerable communities while capturing nearly all the value at the point of sale. Whether it’s pixel data scraped from an artist’s portfolio or lithium pumped from desert aquifers, the underlying logic doesn’t change: take as much as you can and pay as little as possible.

This hypocrisy is grotesque: ivory-tower pundits rage about the “theft” of their precious words while caressing the latest iPhone, swanning vintage tweed hand-me-downs, indulging artisanal chocolates wrapped in rainforest destruction, and hawking nuclear power as humanity’s savior. They cry foul at AI’s data diet without ever examining how their gadgets, garb, and guru-approved energy dreams are propped up by exploited miners, underpaid seamstresses, and poisoned lands. Until these self-styled champions of creativity acknowledge that their own comforts ride atop a graveyard of unpaid labor and ecological ruin, their moral grandstanding about stolen IP is nothing more than hollow posturing—because you can’t preach justice while pocketing the spoils of injustice.

[Note: I asked ChatGPT-o3 to write the last paragraph in a deliberately provocative manner. Did it provoke you?]

Most of us are already beneficiaries of uncompensated work. The question is what we choose to do about it.