What is the Artificial General Intelligence (AGI) and why is it so significant? How close are we?

Altman, Bengio, Hinton, Kurzweil, LeCun, Mostaque, Musk, Sukstever, Tegmark

[Our comprehensive report; debate and AI; our book]

As researchers and engineers continue to work on AI, the day will come when the digital brains that live inside our computers will become as good as good and even better than our own biological brain. Computers will become smarter than us. We call such an AI and AGI — Artificial General Intelligence…. Such an AGI will have a dramatic impact on every area of life, of human activity, and society. Ilya Susktever.

We must take seriously the possibility that generalist AI systems will outperform human abilities across many critical domains within this decade or the next -– Yoshua Bengio, Geoff Hinton et al..

By the time we get to 2045, we'll be able to multiply our intelligence many millions-fold.

And it's just really hard to imagine what that will be like. – Ray Kurzweil, Google & former MIT — In Lex Fridman podcast.

TL;DR

*AI could soon replace all or nearly all unskilled workers in non-labor jobs, even if AI never moves beyond its current sub-cat/dog-level intelligence.

*AI is already superior to human intelligence in a limited number of specific domains and applications.

*We are probably 5–20 years away from a world in which AI is smarter than us in all domains in which we are smart, and probably even in domains we aren’t yet aware of. It could take less time.

*We are 3-5 years away (or less) from when AI will be able to do a significant number of jobs that human knowledge workers are currently doing.

*Intelligence includes many domains and AI will develop intelligence in each domain gradually over time, but in an unpredictable way. Each development will impact the world of work.

*In the next 5-20 years, many human jobs will shift away from “knowledge work” to caring and entrepreneurship roles that will require students to develop essential “soft” and “durable” skills.

*AI will radically transform society; education will need to change radically if it is going to remain relevant.

Introduction

The shocking firing of Sam Altman, the CEO of OpenAI, the company that built ChatGPT and was created from scratch ($0-$90 billion valuation), has led many to question why the board, which is charged with protecting AI safety and the future of humanity, may have fired him.

We’ll never know, but it has opened the discussion of human-level artificial general intelligence (“AGI”), as there was some speculation that they did it because OpenAI had an undisclosed “AGI” or, more likely, something approaching it.

While it turns out that this was not the case and reports indicate the board could not give a single example of Altman not being candid, it was not “crazy talk,” as the board supposedly told the employees that allowing OpenAI to collapse would be “consistent with the mission,” which is to safely develop an AGI while protecting the interests of humanity.

Ordinarily, that would not be consistent with a board’s directives, and deliberately collapsing a company would get a board sued, but if OpenAI has an undisclosed AGI or something similar and didn’t inform the board, that would make it hard for the board to govern, since OpenAI’s charter says it must act in the interest of humanity, and part of the board’s job is to look out for that interest. And since, by design, the for-profit corporation was subservient to the non-profit OpenAI, the board’s priority is humanity, not profit. More and more reporting (NYT, Vox, The Atlantic) confirms my original theory that part of the motivation for removing Altman was concerns related to Altman’s prioritizing releasing AI products over safety.

Regardless of what comes out, I think it is unlikely that OpenAI has an “AGI,” both because it is not some easily boxed tool and because there isn’t much out there technically that suggests we either have it or we nearly have it, but we are potentially getting close, and it is important for people to both understand what AGI is, what it means for the world as we move towards it, and how close we are to it or something similar. Developing a safe AGI that benefits humanity is OpenAI’s mission and there is a good chance that they (or another company) will achieve the mission of developing an AGI or something similar.

Pre-AGI AI

Before I talk about AGI, I want to highlight that we don’t even need to achieve human-level “general” intelligence in computers for AI to cause a radical disruption in society, as even AIs that are not “as smart as a cat or dog” (LeCun) are outperforming humans in the equity hedge markets, cancer detection, and customer service.

Depolo (Better than humans alone)—

Coatue (Can do well without humans)

For a live demo of how this already works, check out air.ai.

If pre-cat/dog-level AI can do all of these things, think about what human-level AI may be able to do.

What is AGI?

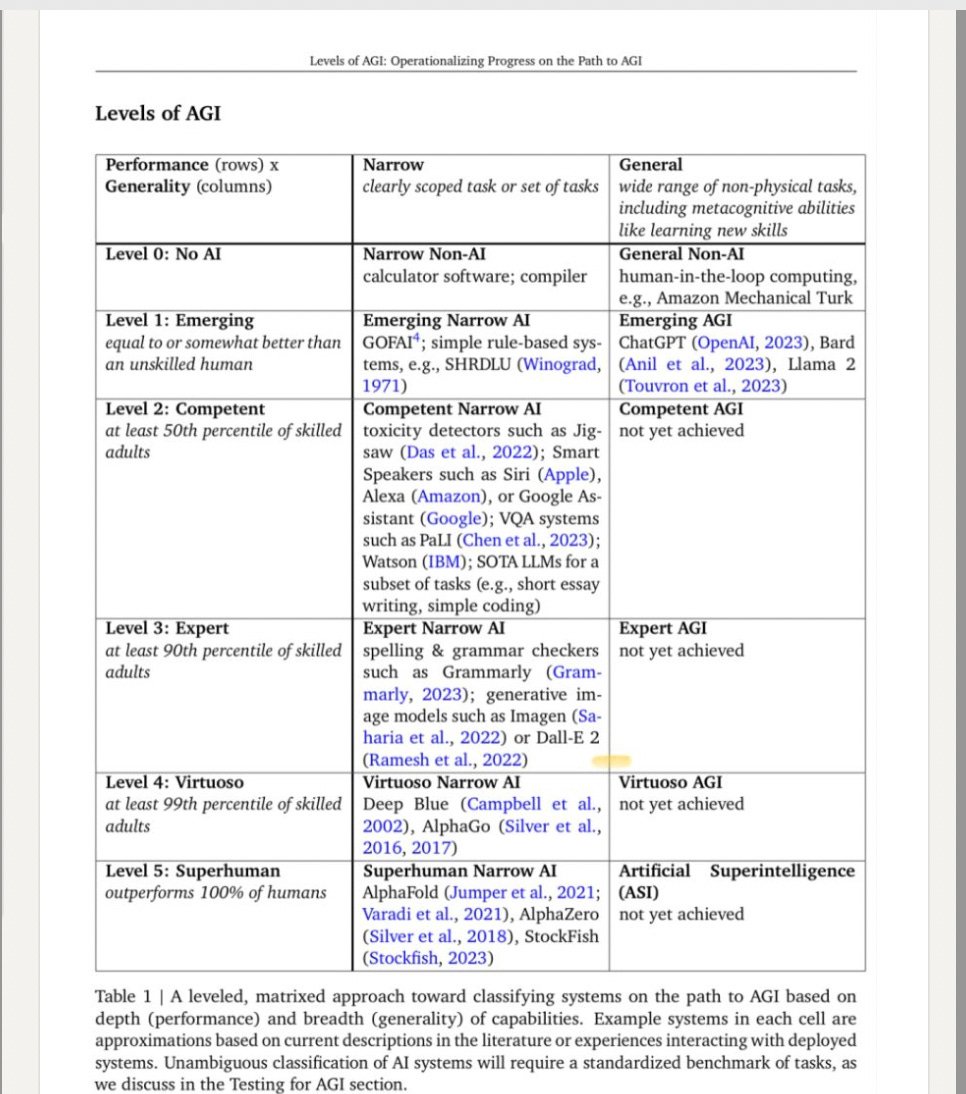

AGI is a concept that was conceived as a way to determine if AIs are “generally” (AGI) as smart as or a bit smarter than humans (Sam Altman’s original definition). Sébastien Bubeck et al., define it as, “at or above human-level.” Hal Hodson defines it as, “a hypothetical computer program that can perform intellectual tasks as well as, or better than, a human.”

Some refer to this as “singularity,” which has been defined both as the time AI reaches and surpasses human-level intelligence and the point at which everything becomes unpredictable.

A lot of effort in history has been put into defining “intelligence,” but “generally as smart” is pretty hard to define. Some people are smarter than others in different ways, and we all have our own specialized knowledge So, as Yann LeCun points out, since it’s hard to really say what it means, he doesn’t believe in AGI (which some people wrongly take to mean he doesn’t believe computers will become smarter than us).

Recently, Sam Altman has also said we need to start getting more specific because we are getting closer and the details matter and there is more focus on the definition in OpenAI’s Charter —

OpenAI’s mission is to ensure that artificial general intelligence (AGI)—by which we mean highly autonomous systems that outperform humans at most economically valuable work—benefits all of humanity.

Prior to his debacle, OpenAI clarified its procedure for determining if it created an AGI. This procedure was that their board would determine if they obtained AGI.

Generally, I think there are two ways you can get more specific about what AGI is.

“All Domains”

The first way you can be more specific is to ask what are the ‘domains’ in which humans are intelligent and then ask if AIs are intelligent in all of those domains.

First, what are the domains?

Philosopher and AI scientist Joshua Bach lays out some domains after doing some research.

Language

Knowledge

Reasoning

Learning

Perception

Representation

Autonomy

Collaboration

Embodiment (robots).

People may squabble over the exact domains and add or subtract (there a specific controversy over sentience and consciousness that I’ll cover below), but I think the list generally makes sense and is similar to what you can find elsewhere.

When will we get there? Here’s some speculation from the leading AI scientists in the world.

Five years seems hard, as it’s generally considered only to be smart in the first two domains (language and knowledge). It arguably has limited ((“a little bit” Altman) train of thought, but not abstract)) reasoning abilities that would enable it to make scientific discoveries. Think about it what it will be able to do when it can make its own discoveries…

Do We Need it to Be Fully Intelligent?

As noted, AIs can already perform better than unskilled workers, according to a recent (November 4, 2023) paper (see call center differentiation above…), and they can outperform even the best humans in some specific areas.

We do need AI to improve its intelligence, but we have (or are close to having) AI Agents that can operate autonomously (reminder: “autonomy” is an intelligence domain) when given a goal (more on that below).

Maybe they can even work together.

Combined, perhaps they could be an AGI?

Also, note that “collaboration” is another domain of intelligence, and a lot of studies are being produced about how swarms of agents can collaborate together.

[If you want to learn more about agents and the reasoning abilities of AIs, we cover them in detail in our report and I explained and unpacked the significance of agents for education in this blog post. This is a technology that is already here in a nascent form].

Anyhow, multiple AI agents working together could get us to AGI faster than originally anticipated, as “swarms” of agents acting together could effectively represent “general” intelligence. This idea is unpacked in more detail in a recent article in The Atlantic.

Beyond multiple agents working together, there are other reasons why it may be possible that we are getting closer to AGI which could be defined as being intelligent in all domains in which humans are intelligent.

First, while large advancements are obviously more likely to occur over time, there could also be radical developments that are not anticipated.

Second, you also never know what someone else knows or what they are working on. In 1938, at a conference in Washington, D.C., Niels Bohr presented the current understanding that nuclear fission was not possible. However, around the same time, chemists Otto Hahn and Fritz Strassmann in Berlin were conducting experiments that would eventually lead to the discovery of nuclear fission. Lise Meitner and Otto Robert Frisch, who were part of the same scientific community, interpreted Hahn and Strassmann’s experimental results and realized that nuclear fission was possible.

Third, we might also not know what others are sitting on. We know that OpenAI is deliberately slowly releasing its technology to give society time to adapt and that ChatGPT4 was ready in August 2022 but not released until March 2023. We know this because Khan Academy signed an NDA with OpenAI over ChatGPT4 in August 2022. I don’t think they are sitting on AGI, but it is likely their AIs have more capabilities than what we currently have access to.

Regardless, most say we are 2-3 major advances (for example, the Transformer that made these language models possible was a major advance) from AGI, and we obviously don’t know when we (or China) will hit those advances.

We flush all this out in more detail in our report.

*Capabilities*

A different way to approach this is to look at what AI can do.

Open AI, including Altman and Iyla Sutskever, Open AI’s Chief Scientist is now getting a lot of press as one of the board members who originally voted to oust Altman and changed his mind, argued that it can be defined by capabilities.

In defining AGI, they stress the capability to do people’s jobs as well or slightly better than people can. They did not say ASI would do our jobs perfectly. None of us are perfect. Based on their definition, AGI only means it has to be able to do all or nearly all better than most of us (see the call center example above).

Nisha Tagala highlights this capabilities-based approach in a recent Forbes article. Other highly qualified individuals argue AGI is already here if you look at it from the capabilities approach.

This is a big deal because when an AI can do a person’s job better than they can, even slightly better, employers will replace that individual with an AI. Why wouldn’t they? Their job is to make money, and if they are corporations, they have an obligation to shareholders to do that. Now, they may reassign that person to another job that requires a human to complete, but over time the number of jobs that need to be done by humans will decline.

Some argue that AIs won’t completely replace us; they’ll just extend our abilities. Maybe, but remember that by definition AGI means they can do everything we can, so I’m not sure what most of us will still do in a world of AGI, at least in the suite of jobs that are available to knowledge workers now.

And if you are doing something, what is it worth? Many more people who have AIs can do the same jobs that only some people can do without AIs. Will almost anyone be able to read cancer screenings with AIs?

When will autonomous agents have the capability to do a lot of jobs? 3-5 years.

It is important to note that Suleyman is not trying to define AGI but is exclusively focused on capabilities. Others are focused on using the capabilities approach to define AGI. In a recent podcast, Sukstever says these capable Agent AIs will be able to replace many jobs soon.

What about “consciousness” or “sentience”?

Consciousness is a broader, more complex concept. It generally refers to an entity's awareness of its thoughts, feelings, and environment. Consciousness often includes self-awareness— the recognition of oneself as a separate entity from others and the environment. It also implies a continuous narrative or stream of consciousness that connects past, present, and future. (Mok)

IBM shares this more aggressive definition: Strong AI, the machine would require an intelligence equal to humans; it would have a self-aware consciousness that has the ability to solve problems, learn, and plan for the future. Strong AI aims to create intelligent machines that are indistinguishable from the human mind (IBM)

I don’t think it’s credible (based on the overwhelming view of AI scientists) that any current AI is sentient or conscious. Blake Lemoine, a Google engineer who was terminated, did make that argument in a Washington Post interview, but he was fired.

Nick Bostrom, a philosopher who has studied AI for many years, was quoted in the New York Times to argue it may have “sparks” of sentience.

The debate over this is a bit difficult to evaluate because neither sentience nor consciousness scientific concepts can be objectively assessed, and we can’t know for sure whether any other person and certainly any other animal, is sentient or conscious. So, there is this argument that people make that if we think it’s sentient or conscious maybe it is, and that the way AIs have been interacting with us suggests that may be possible. Certainly, the widespread appearance of consciousness in these machines will have effects on society, if for no other reason than that millions of people are falling in love with them.

The development of “actual consciousness” (whatever that is) may be more likely once we have meaningful developments in quantum computing.

Arguably, an AI doesn’t need to have sentience or consciousness to be considered an AGI.

Tom Everitt, an AGI safety researcher at DeepMind, Google's AI division, says machines don't have to have a sense of self for them to have super intelligence. "I think one of the most common misconceptions is that 'consciousness' is something that is necessary for intelligence," Everitt told Insider. "A model being 'self-aware' need not be a requisite to these models matching or augmenting human-level intelligence. He defines AGI as AI systems that can solve any cognitive or human task in ways that are not limited to how they are trained. Business Insider

It certainly doesn’t need to be conscious to transform our world.

The Transition to AGI

AI isn’t all or nothing. As Sam Altman recently noted, we won’t all of a sudden have AGI, but we will reach it after building and slowly releasing technologies along a continuum. AIs have knowledge (now), language abilities (now), reasoning abilities (limited now, but growing), learning abilities (limited now, but growing), perception abilities (in the early stages), autonomy (in the early stages but growing) and collaboration abilities (in the early stages but growing). It will be longer until AIs are embodied and are sentient and/or conscious (and we may never achieve those) but developing intelligence in all or nearly all of these domains in computers will fundamentally change the world and it is inevitable, and as advances are made in each domain there will be tremendous consequences for society. If it developed consciousness, that would be even more dramatic.

What is ASI?

Definitions of “AGI” include the idea that it will be smarter than us, but only by some limited (but undefined) amount.

ASI stands for “artificial superintelligence,” which means it will be massively smarter than us and on a whole other level.

When will we achieve this? 2045

Additional

I did unpack all of this in more detail here and I also explained some related ideas.

What Does this Mean for Education?

Most future work will be done in cooperation AIs. It’s hard to believe anyone will work without an AI outside of heavy labor jobs.

Arguably, students should start preparing for this world (AI literacy)

AI will likely do a lot of the “knowledge” work and the value of traditional “intelligence” will collapse. Companies will be able to buy intelligence for a small fraction of what they have to pay any of us to work.

Schools need alternative approaches to education (we have some ideas in our report, many of which schools are already familiar with). This isn’t about “plagiarism” or magic lesson plans. This is about a completely transformed world and an education system that will need to support it. Fortunately, as noted, schools already have a lot of experience with what is necessary.

Stuart Russel has a few suggestions [and, yes, we may need all of this in 5 years or less].

What does it mean for the World?

This is a good classroom exercise.

Food for thought from Ilya —