We Have "AGI-Like" AI

TLDR

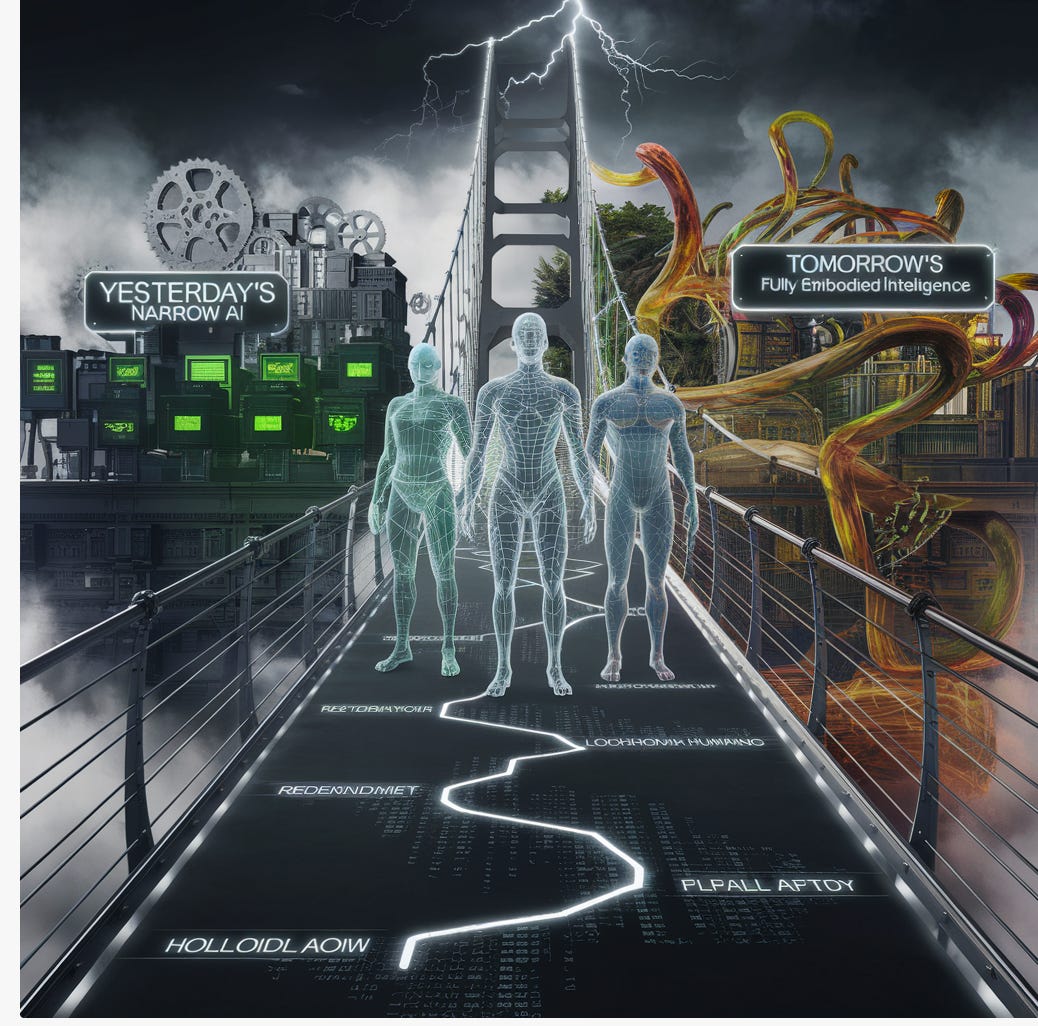

Today’s frontier AGI-like models (ChatGPT-o3; GeminiPro 2.5, maybe Claude3.7) sit between yesterday’s narrow AIs and tomorrow’s fully embodied intelligences. They carry enough breadth to disrupt universities, law firms, call centers, and creative studios; enough jaggedness to surprise and sometimes mislead us; and enough unfinished business in reasoning, memory, long-horizon planning, and physical agency that calling them “AGI” outright would be hubris. Using the -like suffix keeps our language—and our governance—synced to reality: celebratory about the leaps, sober about the cliffs, and vigilant about the road still ahead. It also encourages us to focus on how human-like the frontier models are and we still need to do get to full human intelligence.

Instead of debating whether current AI models are or are not AGI, let’s debate how AGI-like they are. This will raise awareness and help formulate actionable change

Current Nomenclature — What is AGI?

The phrase “artificial general intelligence (AGI)” has escaped research papers and migrated into Senate hearings, board-room decks, and dinner-table debates. Everywhere I turn, someone is asking whether we’ve “hit AGI yet.”

But what does AGI mean? Generally, it means that AI systems that have “human level” intelligence.

What is included with human-level intelligence? Human-level intelligence has an ability to communication in natural langauge (AI has this), short-term memory (AI arguably has this), durable long-term memory (AI is developing this), causal reasoning (AI seems to capable of basic deductive reasoning), hierarchical planning (AI can plan but not very far head), rich multimodal perception (AI has this), the ability to teach itself new skills on the fly (maybe), thhe ability to create new knowledge (not there yet); and the ability to generalize from one domain to another (not there yet).

Some influential individuals have more specific ideas about what AGI is. OpenAI defines it as a highly-autonomous system that matches or outperforms humans at most economically or cognitively valuable tasks — i.e., breadth of competence, not one flashy benchmark. OpenAIWikipedia

Across the AI field even the name of our destination is contested. Anthropic’s co-founder Dario Amodei, by contrast, usually says “powerful AI,” cautioning that capabilities will arrive in waves, not as a single “Eureka, we hit AGI!” moment robtyrie.medium.com.

Meta’s Yann LeCun dislikes the AGI label altogether and has rebranded the goal as AMI—advanced machine intelligence—pronounced ami, “friend” in French, to signal a more cooperative, incremental vision sixfivemedia.com. Whatever the banner, everyone agrees we are converging on something far broader than today’s task-specific models—yet still short of a robot nanny you would trust with your toddler. Calling that interim destination

“AGI-like” keeps the rhetoric honest.

The Case for “AGI-Like”

When I call a system AGI-like, I am not being coy; I am acknowledging an awkward adolescence. These models have surged far enough to reshape white-collar work, education, and even international security policy (autonomous warfare), yet they still lack the depth, grounding, and self-correction that would let us hand over the full car keys of civilization.

Think of them as brilliant exchange students who finish the homework before class starts, speak every language in the cafeteria, and tutor your kids in calculus after dinner—then forget where they left their shoes and occasionally insist that Toledo is the capital of Canada. They are like prodigies, but not yet fully-formed adults.

Insisting on the “-like” reminds me, and hopefully my audience, to keep two thoughts in tension: awe at the capabilities that have already arrived, and sobriety about the engineering—and the ethics—that still separate us from true generality. It is a linguistic speed bump, slowing the hype just enough to let nuance cross the road.

Am I Saying Current Models are “AGI-Like?”

When I use the hyphenated label, I’m acknowledging that the latest systems unmistakably resemble the broad, multi-talented engine the OpenAI Charter describes—“highly autonomous systems that outperform humans at most economically valuable work” —yet still bat below the human league average on crucial innings of cognition. They can draft legal briefs, debug code, summarize a 900-page report, or read radiology images with near-expert accuracy, but ask them to keep track of a long conversation, solve a tricky spatial-reasoning puzzle, or exercise common-sense judgment in a real-world setting and the mask slips.

That uneven box score, however, is closing fast. Only a year ago a 128K context window felt lavish; the just-released GPT-4.1 juggles one million tokens—roughly the text of War and Peace plus Moby-Dick, with room to spare—while showing sharper long-context reasoning and better self-correction The Verge (that’s lot of shor-term memory). Gemini Pro’s is 2.5 million+

Memory architectures such as HippoRAG and retrieval-augmented agents are patching today’s short-term recall, and richer multimodal pipelines are giving the models eyes, ears, and nascent situational awareness.

So yes—I call them AGI-like because they are already above human in some lanes, below human in others, and sprinting toward parity+ in the rest. The hyphen keeps me honest about today’s shortcomings while refusing to ignore how quickly tomorrow’s scoreboard could flip in the models’ favor.

Why “AGI-like” Marks a Critical Understanding

What are the benefits to thinking of today’s frontier modeals as AGI like?

First, current models provide only partial coverage of human cognition: they are dazzling at language, code, and pattern recognition but shaky at grounding, embodiment, and open-ended invention. They are yet AGI.

Second, there is no agreed-upon bar exam; benchmarks proliferate faster than consensus, so declaring “we have AGI” would be premature no matter who wins the next leaderboard.

Third, loose talk of full AGI distorts risk communication: regulators, CEOs, and the public either panic or relax when they should be asking sharper questions. Many think, well, we don’t have AGI yet so we dnn’t have to worry.

Fourth, English already has a useful suffix for analogies—-like—which we use for “human-like,” “life-like,” or “liquid-crystal-like” technologies that imitate key traits without actually being the thing.

Fifth, focusing on “AGI-like” progress clarifies research priorities: it invites a sober discussion of which capability gaps remain, instead of treating the finish line as a mystery.

Sixth, it’s just accurate. Researchers such as Ethan Mollick call today’s capability landscape a “jagged frontier”: models crush PhD-level logic puzzles one minute and bungle kindergarten math the next Axios. That uneven contour is exactly what we should expect from systems that are AGI-like—impressive, but still spiky rather than smooth across the intelligence spectrum.

Seventh, because large models live inside servers, they can already complete essentially all academic work that happens on a screen—writing essays, solving equations, synthesising literature reviews, designing software. In that narrow yet economically huge slice of the world they behave like a virtual AGI—a “brain in a jar,” as one OpenAI forum commenter put it—while still lacking the sensors, dexterity, and persistence you would need to leave a child in their care OpenAI Community. I think this is why some academics (Tyler Cowen) think current systems are AGI.

Eighth, even before they walk or grasp, AGI-like systems can automate or 10× a vast share of knowledge labor. McKinsey estimates that by 2030 tasks worth up to 30 percent of U.S. working hours could be automated, a trend now “accelerated by generative AI” McKinsey & Company. That translates into millions of displaced or transformed jobs and trillions in productivity gains without waiting for humanoid robots. In my opinioin, McKinsey’s analysis even understimates the 2030 capabilities of the model.

Ninth, Public debate often flips between “nothing to worry about until real AGI” and “AGI tomorrow, everyone panic.” Both extremes mislead. An AGI-like framing reminds policymakers, educators, and businesses that the transition costs and safety questions start well before the mythical all-capable machine arrives—and that pretending otherwise courts complacency.

Tenth, it shifts our attention to present superpowers. If we talk about systems as AGI-like, we naturally examine what they already do better than any human: absorb a trillion-token knowledge base, reason at silicon speed, draft code or prose in seconds, and persuade through fluent natural language. By tracking those concrete affordances—rather than waiting for a Hollywood-level reveal—we can build guard-rails, curricula, and business processes that harness the upside and blunt the downside.

Eleventh, if we say it’s “AGI-like,” we debate the details.

__

Anyhow, instead of AGI Yes/No, let’s focus on how AGI-like the models are and what that means.

__

I asked ChatGPT-03 for some title options for this. This is one it gave me -

“Between Spark and Singularity: Mapping the AGI-Like Landscape”

If you’ve been following the debate, you know that is a clever/smart title.