Upping the Game: Strengthening Critical Thinking in Debate in the World of AI

Pushing debate students to think critically in the world of AI means encouraging them to use AI tools properly and demanding more of them. They will be rewarded with wins and greater skills.

[See also: Beyond Algorithmic Solutions: The Significance of Academic Debate for Learning Assessment and Skill Cultivation in the AI World]

As in other parts of K-12 education and academia, many debate coaches worry that AI will replace students’ ability to think critically. Debate coaches worry students will just use AIs for a lot of their debate work and that the critical thinking skills debate helps build will be lost. This is similar to the concern coaches have always had about debaters who rely on coach-produced preparation materials and/or students who sort of “leech” of work done by their teammates.

This is not an unfair concern and resolving it requires changing preparation assignments, strengthening the expectations we have of our students, while permitting and even encouraging students to use AIs for “base line” work. When used properly, AIs have the ability to up the quality of the “game” and strengthen critical thinking, the same way large teams and lots of assistant debate coaches always have. I told my own debaters to think of AIs as additional debaters on and coaches who are part of the team.

There are different formats of competitive high school debate but let me give a couple examples of how this would work using two popular debate formats: Congressional Debate and Public Forum Debate.

In Congressional Debate, school submit various pieces of legislation that are considered by tournament officials. Tournament officials then select pieces of legislation and put it together in a packet, which is referred to as a docket (sample).

Participants receive a copy of the legislation in advance of the competition and then, “brainstorms affirmative and negative arguments” and “find supporting evidence through research” (National Speech and Debate Association).

Common “homework” assignments include dividing the bills between the students and asking them to prepare summaries, lists of pro/con arguments, and questions that participants may want to ask in each of the model Congressional Debates they will participate in.

AIs can complete those tasks with ease.

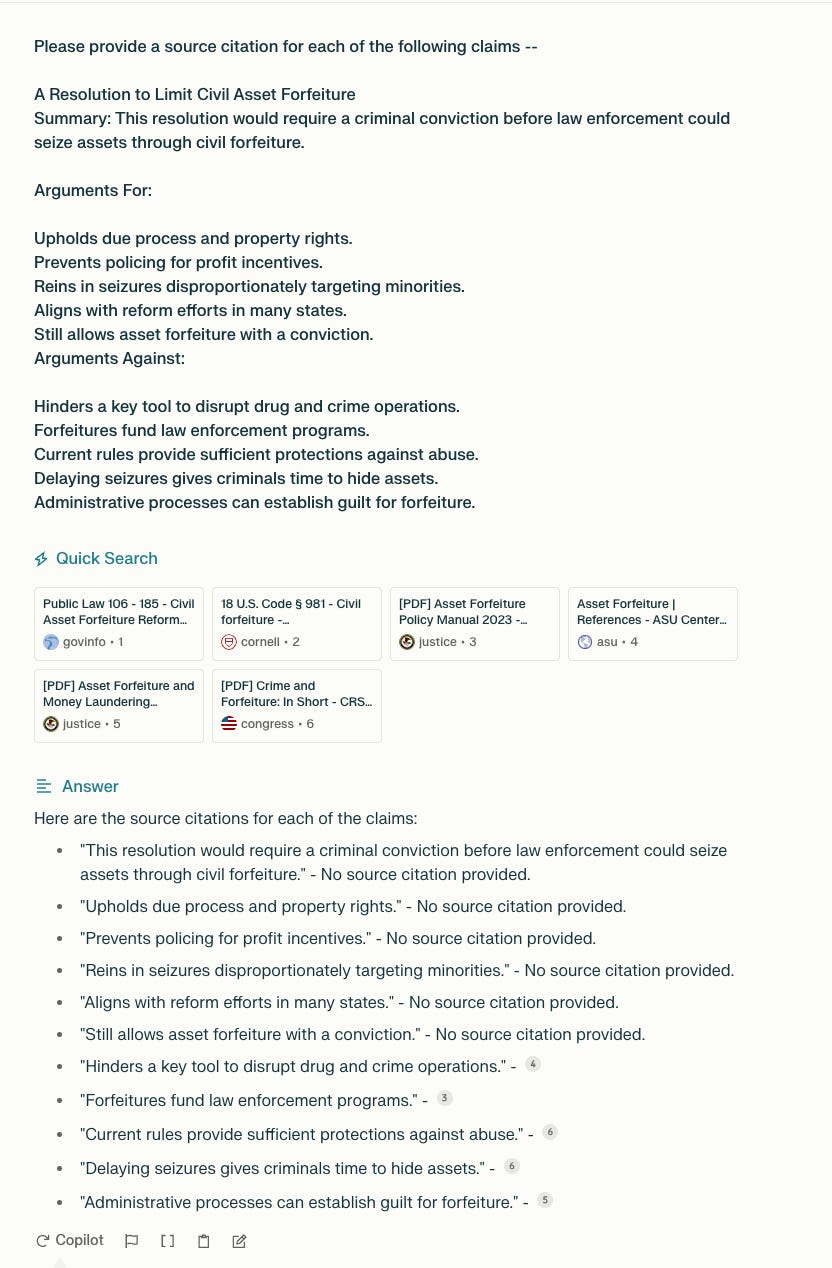

I first loaded the sample legislation docket into Anthropic’s Claude and asked it to generate a summary of each bill and a list of questions to ask.

As you can see from these samples, it did a pretty good job.

I then asked Preplexity.ai, which integrates AIs and web search, to produce source citations.

It was able to produce source citations for approximately half off the claims. If I had used Perplexity Pro ($20/month), it may have done better.

I did try this in Bing, which has some ChatGPT integration, and it didnt’ do as well. It only produced citations for two of the claims.

Of course, we are really only in the 1st inning of this “stuff.” Gemini, Google’s answer to ChatGPT/Claude/etc, will be out soon and many anticipate that will fully integrate web search and fact-check itself. Regardless of whether or not that is true, “evidence” references will be available in the near future.

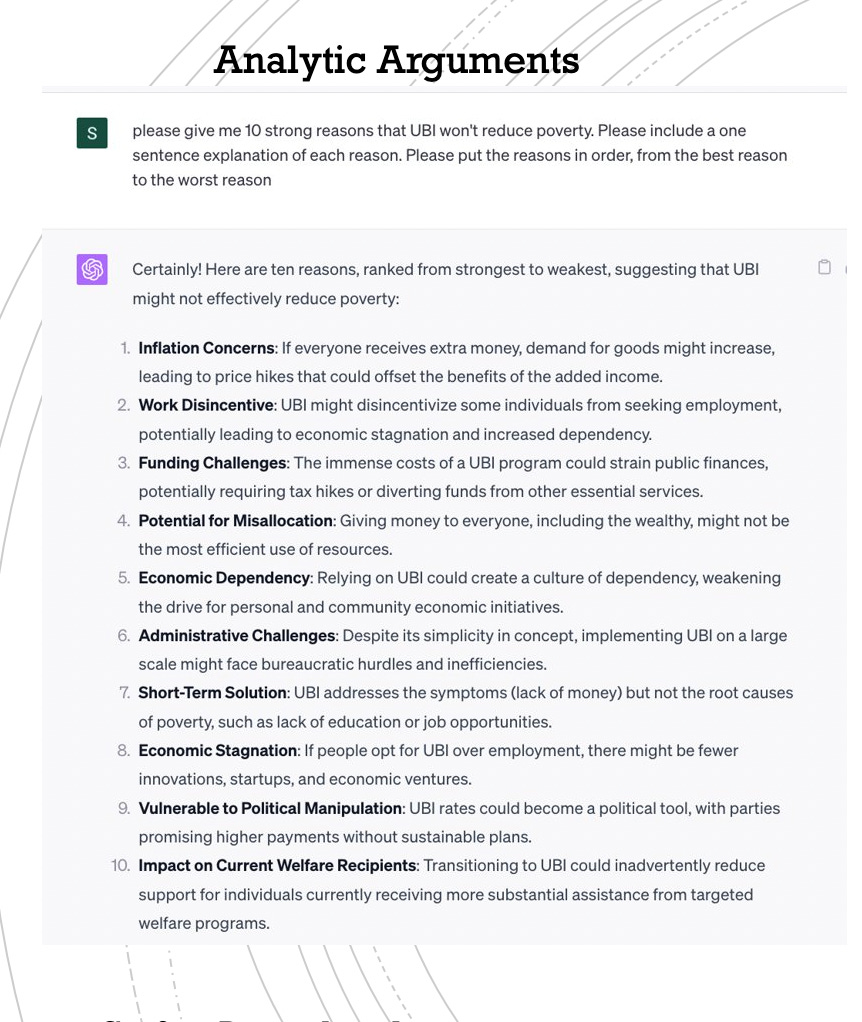

AIs can also complete basic work in other speech and debate events. For example, universal basic income has been debated in the past in Public Forum debate and is currently being debated in Policy Debate.

AIs (these images come from ChatGPT4) can be really helpful to students.

It can generate arguments.

It can generate question lists.

And it can not only generate arguments, but it can also generate responses and counter-responses, organizing them into a table.

Here is a sample of how the AI tools can perform the tasks identified above in an integrated way.

First, I uploaded a sample speech on a current Public Forum topic that had two major arguments in favor of increasing US military presence in the Arctic (deter Russia and respond to oil spills) and I asked Claude to outline the major claims.

I then asked Claude to put a response next to the claim in a table.

And, yes, I could have asked Perplexity.ai, Bing, and maybe soon, Gemini/Google, for evidence citations to support the responses.

AIs can do this; that’s a reality. And these are just “off the shelf,” general purpose AIs. Students with even a small amount of technological knowledge can either train these AIs to do this through the settings functions in these tools or by building their own simple applications that use APIs from these tools.

So, the question for debate coaches, like all teachers, is what should we ask our students to do that takes this as a given and helps advance them to the next level? Pretending that debaters aren’t using AIs to do their homework/prepare them for debates is not only a failed strategy but puts them at a competitive disadvantage relative to peers who are using it in such capacity.

__

I think the best approach is to suggest to students that they use tools that parents permit them to use at home or that their schools allow them to use. K-12 school policies (both public and private) vary a lot: Some ban it on computers/networks, some don’t ban but don’t encourage use, at least of particular apps; others allow it and encourage in school-use, but the reality is that most students are using these tools in at least some capacity to prepare for debates and to help with homework.

I think that ALL of the following work in a way that encourages critical thinking (at least until the machines get better, though they’d have to be specifically trained in academic debate styles and topics to be able to do these well).

Assignment(s):

(1) Use AI to generate list of 10 arguments in favor of _____. Then rank each argument in order from better to worse and come to class being ready to explain your ranking and different factors that influence your ranking.

The factors that could impact the ranking need to be taught to students. Do the arguments have reasons behind them? What arguments need evidentiary support? What arguments are more likely to be persuasive to most judges? Are there any judges who judge frequently who are likely to be persuaded by particular arguments? Are any of the arguments so easy to answer that they shouldn’t be made in the first place?

(2) Use AI to generate list of 10 questions about _____. Then rank each question in order from better to worse (generally) and come to class being ready to explain your ranking and different factors.

(2a) Certain questions are obviously more relevant in certain situations than others, so be prepared to discuss which questions you would use in which situations.

(2b) Present how your opponent might respond to your question (you might want to use AI for this), what follow-up questions you might ask based on that potential response, and how you might (if possible) be able to use your opponent’s response against them in a debate.

(2c) Present what other questions you might want to ask and why.

(3) Use AIs to suggest evidence citations that support the arguments you generate in (1). Be prepared to present a couple of the sources and why they do or do not support the claim.

(3a) Find some additional evidence sources and be prepared to present on those (you won’t really know how they found them, but who cares: they are gathering more evidence!)

(3b) Be prepared to explain at least one weakness in the evidence cited by the AI (this will not only help students see some limitations of work done by AIs but also think about identifying weaknesses in their opponent’s evidence (students should be taught to identify weaknesses).

(4) Have the students use (if permitted by the school) AI tools in class to quickly assemble arguments for a debate (they should be able to this in 10 minutes or less) and have them do a quick practice debate. They will then be able to see where the initial arguments are strong and where they are weak and build from there.

(5) Have students use AI to generate a list of arguments and then the responses. Students should be prepared to discuss which responses where good and which responses were not so good and should be prepared to explain why.

(6) As a teacher, use your own AI to generate a list of arguments and responses. In class, have the students generate the third level of response. They could do this in a written and/or verbal form. You could do the same with questions.

(7) Use AIs to generate research but have students highlight underline they key part of the research that supports the claim and explain why.

(8) Have students practice crossfire (the questioning period in debates) with AIs.

(9) Make arguments better. This is a sample debate theory argument related to conditionality good/bad, a popular argument in policy and Lincoln-Douglas debate.

Teachers could give this to students and ask them to do any of the following -

Shorten it (by reducing the explanation of each point or the number of points).

Improve the wording of the argument.

Students should be instructed to do this both with AIs and on their own so that they can both think through it see any potential differences. They could then compare the changes made by the AI to the changes made by them and discuss which one is different.

[As a side note, I haven’t done this yet, but it might be interesting to have a student upload a judge’s philosophy and have the AI write a theory block based on the judge’s philosophy].

(10) After reading this, how do you think you could help students use AI tools to strengthen their critical thinking?

Notice that suggestions 1-9 all include students presenting/performing something in-class and require teacher and student interaction throughout the class.

___

Ultimately, I don’t think that AI presents any real threat to what we hope our students will learn through debating.

In debates, students have to present ideas, adapt any pre-prepared materials to the specifics of their opponent’s arguments, adapt to judge’s preferences or marshal peer support in Congressional Debate, defend their own evidence, refute the specifics of their opponent’s evidence, make choices about which arguments to win the debate on, figure out which of their opponent’s arguments are most threatening to their position and determine where they want to both agree and disagree. They can have an AI (or another teammate or coach) produce all of the material they need for their debates, but if they can’t do these things that an AI can’t (yet) do, they won’t win their debates.

What I do think is important is that we help students use AIs properly to strengthen their own preparation, which will, in turn, strengthen their own debating abilities and what they gain from their participation in debate, including critical thinking skills. Like any teammate or coach, when properly utilized, AIs give students “leg-up” and makes them better, the same way that AIs are massively boosting worker productivity and the quality of their output.

Students will need the support of coaches/teachers who can support these efforts and raise the bar for what students need to do, including potentially changing homework assignments. Without these changes, students will simply use AIs to complete old-fashioned/pre-AI World debate work and will be worse off for it. That’s a reality. We can, and should, do better.

See also: Beyond Algorithmic Solutions: The Significance of Academic Debate for Learning Assessment and Skill Cultivation in the AI World