Last spring, I tried to provide a comprehensive analysis of AI developments each week, but those posts took more time than I had, and they were too long for people to read.

Nonetheless, I think there are important developments for educators to understand, and I hope they start to see the significance of the cumulative developments. To support that, I’m going to do a “Tuesday Ten,” where I briefly cover approximately 10 AI developments from the last week.

Individual bots. At last Monday’s Developers’ Conference, OpenAI made it very simple for anyone with a paid ChatGPT4 account to develop their own bot that they can share with a limited number of friends or all other GPT4 subscribers (wider sharing is anticipated at the beginning of December). These bots both open up a number of learning opportunities for students and can also be set up with instructions to help automate simple daily tasks such as lesson plans. In the latter way, they can be the foundation for having your own personal assistant and establish the foundation to build out your own autonomous agents that will transform education and industry As Yann LeCun has been emphasizing lately, we will soon all work with and interact with AIs as part of our daily lives, and these are the foundations for that. Other popular opportunities to develop these types of bots include Poe and Playlab. No technology or coding experience is necessary. I wrote more about this here.

This is not to say this is ready to go now (they have holes (that are being plugged) Furze), but these are the foundations and the bot I started building for debaters works reasonably well, even though it was delayed by a human variable: a bad head cold :).

So, yes, it obviously pulled from my training data to help debaters develop arguments and organize ideas. More is coming as I have time over Thanksgiving.

A data scientist GPT without any additional training scored in the top 50% of all data scientists, so I’m hopeful.

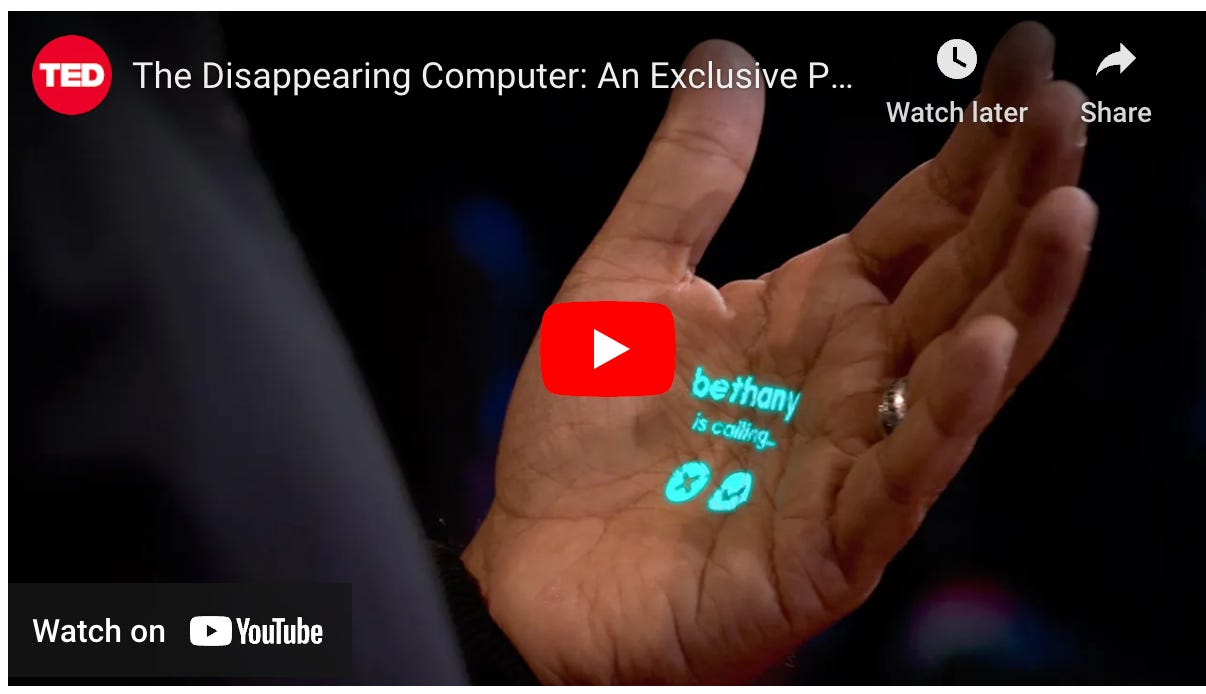

The Pin. Last week, Humane released its AI Pin

The AI pin is a small, wearable device that can be activated to make and receive calls; write, receive, and search texts; take photos; instantly translate speech between individuals; keep a record of one’s day; and function as another way to interact with your emerging AI assistant or agent (see above). Venture Beat article on the pin.

OpenAI’s CTO recently said they want to get society to move away from keyboards, and this is the first step. We’ll see if OpenAI eventually develops a similar device.

Bots/assistants/agents/pins — Yes, the interaction of these is all a bit ambiguous at the moment, but these are the foundations of the AIs that will support us in our personal lives, learning, and work all day every day.

Image to 3D. As part of the emerging trend of text-to-text, text-to-image, text-to-video, etc., we now have image-to-3D images. Adobe now has this, and so does Stable Diffusion.

[Photo only; to play the video, click on the “Stable Diffusion” link above].

Grok (and Olympus?). Elon Musk’s X.ai released its own LLM, Grok, last week (it’s still in beta but will be available to Twitter subscribers soon). The model was developed in 2 months and is only outperformed based on some metrics by Palm2 (Google), ChatGPT4, and Anthropic2. So, yes, it’s arguably already better than the free model many people are using (ChatGPT3.5). X.ai has access to a lot of data, including all Twitter, now “X”, “first person” data, and all of the visual data collected by Tesla for years. Developing and/or integrating models that have “vision” and can fully understand the world the way a human does is arguably critical to achieving human-level AI. Amazon is reportedly developing its own model, Olympus.

I bring this up to not only share another model but also to point out how quickly this technology develops and is therefore difficult to regulate or police. Many anticipate that in 2 years we’ll be able to run models with capabilities such as Grok and GPT4 off of our own cell phones (or our AI “pins”….) There are already hundreds, if not thousands, of smaller models that can run off laptops and cell phones.

Interact with students’ AIs. As individuals develop what are essentially their own AIs and as educational institutions eventually move to their own AI platforms, one big question that will open up is how these different AIs will interact with one another.

Labs.perplexity.ai. There has been a lot of pressure on the developers of the large “frontier” models to develop them in a way that is representative of all individuals and limits harmful output. Well, perplexity.ai just blew the lid off that with their labs.perplexity.ai release where students can prompt it about anything and get what we might think of as unimaginable responses.

Inappropriate images. Basically, there are commercial image generators (not the mainstream, popular ones) that allow you to upload an image of any person and then use AI to produce an image of what that person will look like. In instances where this has happened (Spain; Westfield, NJ) many found the images to look very real.

I’ve been trying to warn schools about this since last spring, but people are busy. Hopefully, this will motivate people to think ahead about how to handle these situations, as they are going to become more common. Thinking ahead about how you will respond when it happens is an important first step; your community will expect you to respond in some way. TapIntoWestfield —

It will also happen on college campuses. 3D imaging and VR will only aggravate the situation.

Deep fakes. On a related note, this problem is getting worse. According to a recent study —

In Experiment 1 (N = 124 adults), alongside our reanalysis of previously published data, we showed that White AI faces are judged as human more often than actual human faces—a phenomenon we term AI hyperrealism.

As the visual datasets of other races expand, this will become a greater problem across the board. I think AI literacy is a (borderline) emergency. People need to understand that we are going to live in a world where we just don’t know what is real and what is fake. [See also: AI-generated fake audio of Germany's top news program "Tagesschau" spreads disinformation; stories like these are a “dime a dozen” nowadays].

The societal consequences are going to be enormous, and they are going to be greater if people do not understand what is going on.

Start embracing AI. AI is in your school. It’s everywhere. Your students and faculty are using it, even if you think they aren’t. Getting a low score on an AI-writing detector test doesn’t mean a paper wasn’t written with AI. No one has any magic answers for you that they are hiding. State education associations and university accreditation councils don’t have any magic manuals that they are going to distribute about how to manage and use this technology. Dive in, and you’ll soon know as much as them — or more, as a lot of the stuff I’ve seen come out lately is either dated (“all the training is on English text”— Japanese images and text, Chinese, Meta translation), horribly misinformed, or adopts a definitive view on a perspective that is not widely shared by AI scientists (e.g., many leading AI scientists do not believe that it is only a stochastic parrot, and even most (maybe all) of those that do believe it currently is such a parrot also believe it will eventually develop human-level intelligence).

Two boxes. In my May Cottesmore presentation, I put up two boxes:

(a) Box 1 — How educators can use AI to do what they do now (lesson plans, quizzes, tests, vocabulary lists, etc.)

(b) Box 2 — How the education system needs to change because, in the near future (sort of already), everyone is going to have multiple AIs working with them all day, and the premium on intelligence, especially “knowledge-based” intelligence, is going to decline rapidly. It’s hard to think that significant changes in the education system won’t be needed to accommodate that change.

There is a lot of focus on preparing educators to work in Box 1, which is important, if for no other reason than that they can see the power of even the current but limited technologies, but the hard questions are starting to be about Box 2. I encourage you to start those conversations, as the “ed tech” companies already are, and they’ll be happy to provide the answers and the services if you don’t want to.

Practical suggestions: Two AI teams in your institution. Team 1 works on Box A and Team 2 works on Box B.