Tuesday's 10 for Educators

GenAI job skills; jobs; LLMs; "World" models; talking with ChatGPT; K-12 usage; Claude upgrades; searching YouTube; Brain to computer without any keyboard; making images better (or worse)

A lot has happened in AI since last Tuesday, but I’ll try to keep this brief.

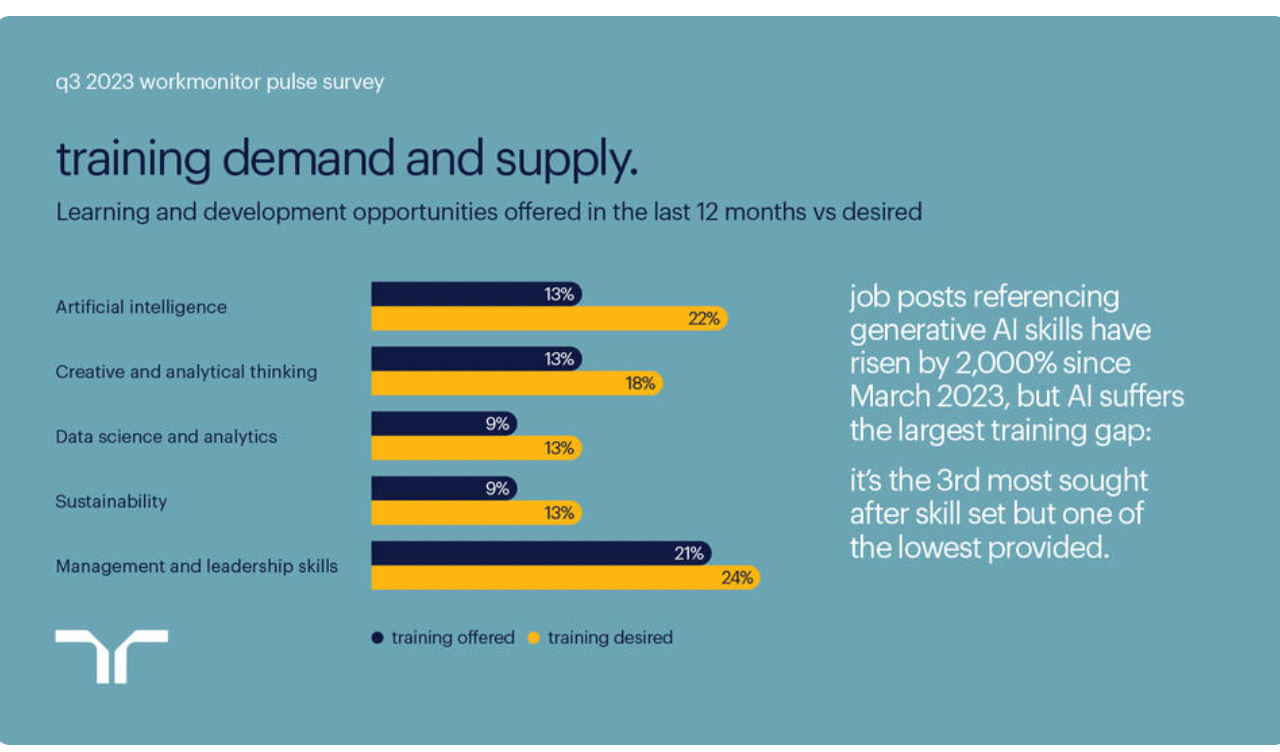

Job skills. One of most in demand job skills at the moment is the ability to work with generative AI. If you want to help your students prepare for the world of work, help them learn about generative AI.

[This is from Randstand] Also, see this Forbes article.

This is part of what a broad push by employers looking for high school and college graduates to have more job skills.

Reasoning, planning, and “hype.” If you followed any of the news over the holiday, you may have heard of all of the speculation about “Project Q*” at OpenAI. The peculation “hype” was over whether or not OpenAI made an initial discovery that would allow AIs to reason and plan. It is “hype” in that while those two abilities would be incredibly consequential, alone they would not get us to AGI, and, at best, it seems the only thing that was proven was that it was possible. It was also “hype” in that there was no confirmation of it.

That said, all large AI companies are heavily invented in efforts to develop AIs that can reason and plan and those advances are expected sometime between now and the next 2-3 years. That is not hype. There is a full explanation here.

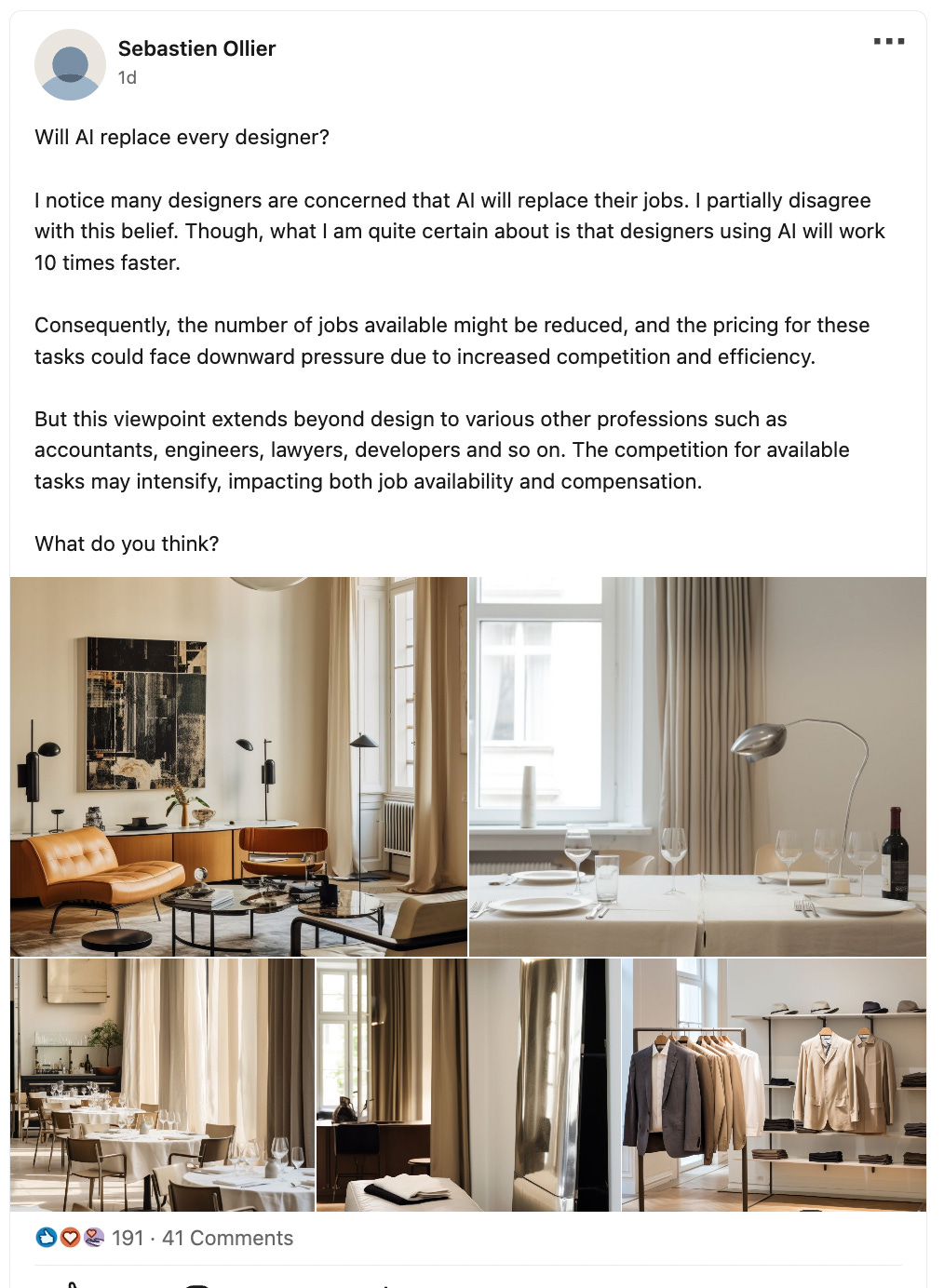

Jobs. Since AI is now real, there is a big debate about how AI will impact employment. Many AI leaders (Altman, Mirati, Hassabis, Musk) believe AI will trigger, at a minimum, short-term job loss. Other AI leaders (Andreesen) and economists (Grennan) believe it will work out, at least over the long-term.

We’ll obviously see what happens, but what I don’t think anyone debates is that here will need to be some new jobs, and almost everyone thinks retraining and social support programs will be necessary in the transition.

Bill Gates is talking about a 3 day work week. Jamie Dimon has suggested 3.5 days.

A few concrete examples to think about.

#1 (and, yes, these are AI-generated images)

#2

#3

Understanding LLMs & World Models. Although I’m in the minority opinion on this, I think it is essential for teachers to understand how this technology works and where it is headed to make informed decisions about how to use it in the classroom.

There are two videos that are helpful here.

One, Andrew Karpathy released an excellent video on how large language models work and there is a short section on agents.

Two, There is a great discussion with Lann YeCun, Sebastian Bubkeck, and Tristan Harris about LLMs, how they work and the efforts being made to develop “World models” that will bring us closer to human-level intelligence.

K-12 usage. New survey research from the U.K. shows how schools are using AI to strengthen instruction. 80% of British teenagers have used it.

__

Those are bigger picture items. Some smaller ones.

Claude 2.1 has launched. It includes a longer context window, lower hallucination rates, an integrated calculator and easier API integration.

ChatGPT voices now free to all users. We will talk more and more with AIs rather than type or “text.”

Stable diffusions text-to-video is improving radically, this includes text to 3-D.

Google’s bard (bard.google.com) can now answer questions about any YouTube video.

A demonstration proves that a computer can be directly controlled from the brain without a mouse. Check it out!

There are no magic solutions. Integrating AI into education in a way that prepares students for the AI world is hard. Beware of snake oil salespersons.

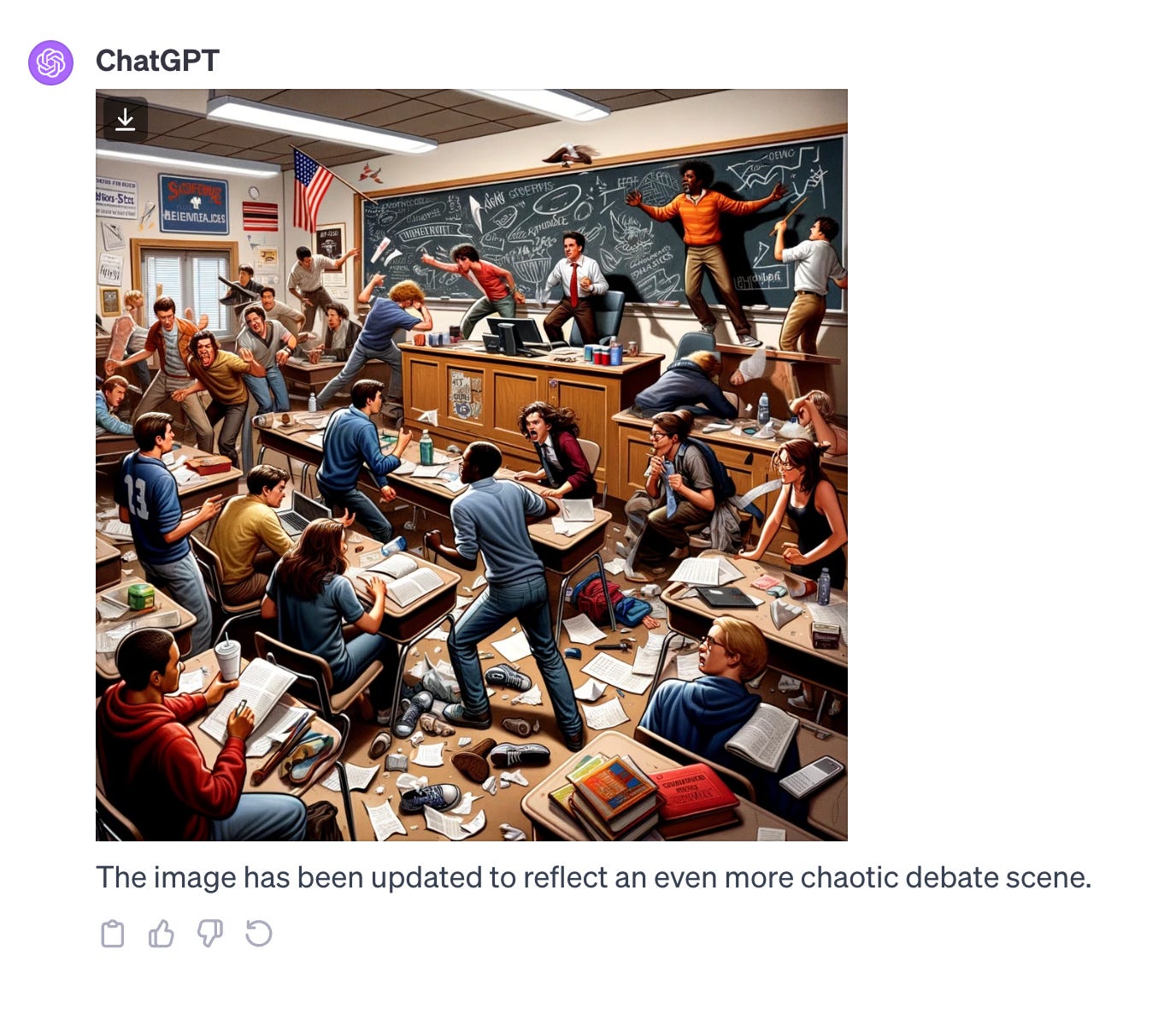

Making things better or worse. There is a funny thing going around about how you can ask Dalle-3 via ChatGPT to make your images better or worse. This isn’t what we are aiming for —