A week ago, Sam Altman posted a picture of strawberries.

Some interpreted this as a reference to the coming release of ChatGPT5, which will potentially be called “Strawberry.” If leaks are correct, and it is capable of advanced reasoning, it will be a significant advance in AI.

On LinkedIn and Facebook, I posted pictures of strawberries that I made with MidJourney6.1.

Comments on LI focused on jokes about the potential upcoming model.

On Facebook, I got many compliments on what people thought were actual strawberries. One person even asked if they were for a special occasion.

And while MJ6.1 produces amazing photo-realistic images, they are not as strong as the new Flux model.

Ethan Mollick produced and posted this image with Flux.

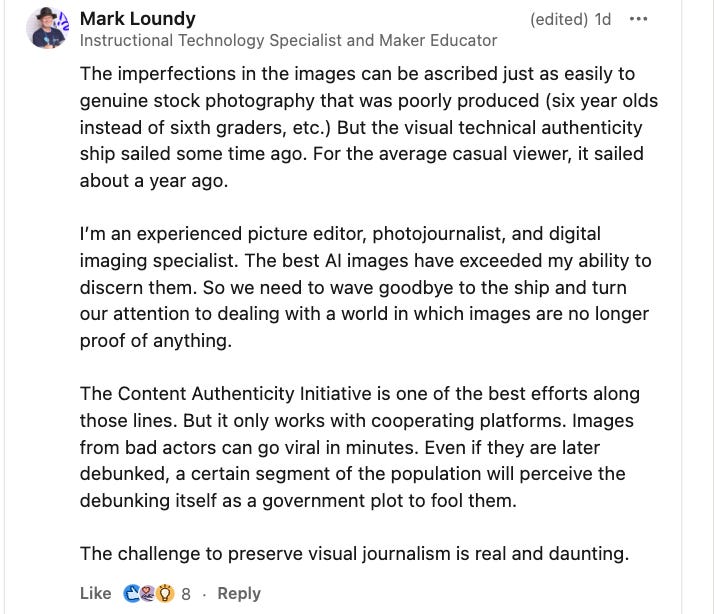

Mark Loundy commented:

And it’s not just images, it’s also video.

Yes, this is entirely AI-generated with Kling.ai.

The general public got a taste of the technology yesterday when Trump insisted that Kamala’s crowds were AI-generated.

This makes it difficult to know what is real and what is not real, something called “Liars Dividend."

It does appear the photo is real.

Multiple in-person witnesses collaborate it.

Major news outlets purchased similar photos from Getty images.

One person checked the weather at the time and noted the sky was blue.

And more —

This opens a lot of questions.

The reality is, no matter how much “media literacy” we teach these deep fakes are becoming indistinguishable to the human eye, and a person is only going to invest so much time in determining if a photo is real or not before making a judgment.

Relying on human intelligence alone is probably not a recipe for success.

AI could, however, assist with this.

Detection Techniques

Anomaly Detection: AI models can spot subtle inconsistencies and abnormalities in manipulated media, such as unnatural eye blinking, color mismatches, or lighting that doesn't match the environment.

Biometric Analysis: Facial recognition techniques like texture analysis, thermal imaging, 3D depth analysis, and behavioral analysis can distinguish real faces from synthetic ones.

Multimodal Approaches: Combining analysis of video, audio, metadata and contextual clues improves detection accuracy and makes deepfakes harder to evade.

Authentication Methods

Digital Watermarking: Embedding imperceptible AI-generated patterns into content at creation can later verify its origin and integrity.

Blockchain: Distributed ledger technology can immutably log media metadata to track provenance and flag any manipulations.

Proactive Measures

Synthetic Data: Generating diverse, realistic training data helps detection models keep pace with the latest deepfake techniques.

Real-time Filtering: Quick identification and removal of deepfakes during upload to online platforms limits their viral spread.

But even with AI, there are limits —

However, AI alone cannot fully solve the deepfake problem:

Deepfake creators continuously improve techniques to evade detection.

Disinformation can still spread even after a deepfake is identified.

Detection accuracy suffers if media characteristics differ from training data.

Labeling real media as fake can also undermine trust.

Also not real —