The Debate Over Artificial General Intelligence, Artificial Super Intelligence and What it Means for Education

The developments along the way will disrupt education more and more every day and there is no alternative but to help our students prepare to live and work in the AI world.

Updated June 10th

Introduction

Artificial Narrow Intelligence (ANI)

Artificial General Intelligence (AGI)

Artificial Superior Intelligence (ASI)

You’ve heard these terms and acronyms tossed around a lot, but what do they mean? How will we know when each has been achieved? What is the relevance of the debate? And what does it mean for education?

Artificial General Intelligence (AGI)

What is AGI, and when will we get there?

Even though it's in the middle, it’s easiest to start here.

AGI is difficult to define, but it basically refers to the idea that computers will be able to demonstrate they have achieved the intelligence of the average human being.

This is obviously a bit vague and difficult to conceptualize, so let me offer a couple of examples.

Sam Altman, the CEO of OpenAI (the developer of ChatGPT), defines AGI this way:

AGI is basically the equivalent of a median human that you could hire as a coworker, and then they could say, "Do anything that you'd be happy with a remote coworker doing just behind a computer, which includes, you know, learning how to go be a doctor or learning how to go be a very competent coder. There's a lot of stuff that the median human is capable of getting good at, and I think one of the skills of AGI is not any particular milestone but a meta skill of learning to figure things out and that it can go decide to get good at whatever you need (Altman).

Altman hasn’t said when he thinks we’ll get to AGI, but he supports a “slow take-off” but faster timeframe (five years) (Fridman interview). You can watch the interview, but it seems like he’s saying we should get there through slow iterations over 1–5 years and that it could potentially take 20 years to get there. Remember that OpenAI had ChatGPT4 by at least last August but didn’t release it until March because Altman believes in the slow, iterative release of capabilities in order to give society time to adapt.

On May 3, Sam Altman defined the mission of OpenAI as, “trying to develop and deploy beneficial and safe AGI for all of Humanity.” Altman

Ray Kurzweil, a futurist and AI investor and developer, argues that AI has to be able to pass the Alan Turing test: Can a machine, when subjected to a quality human questioner, perhaps over a period of 6 hours, demonstrate that it is intelligent. He thinks this will happen by 2029 and notes that the timeframe most people focus on continues to accelerate/get closer (Kurzweil).

Geoff Hinton, the “Godfather of Neural networks” says he “cannot rule out 5 years (until we achieve AGI)… "Until quite recently, I thought it was going to be 20–50 years” (Hinton).

Yann Lecun, the Chief Data Scientist at Meta, a professor at NYU, a winner of the Alan Turning prize for his work in AI, and a former advisee of Hinton’s doesn’t even believe in the concept of AGI, but that doesn’t mean that he doesn’t think computers will become intelligent.

Lecun defines intelligence as “being able to perceive a situation, then plan a response, and then act in ways to achieve a goal—so being able to pursue a situation and plan a sequence of actions” (LeCun). He says that once machines can do that, they will have emotions. He’s not sure when that will happen, but he thinks it will be achieved, perhaps in 20 years (LeCun).

Yacun does not believe that LLMs can achieve AGI, arguing that AI needs to be able to internalize the entire human experience before it can do that (LeCun), and even describes plug-ins as “duct tape,” but, again, he does argue that we will reach AGI and what we might do it using the “world models” he’s developing. "There's no question in my mind that we'll have machines at least as intelligent as humans” (LeCun). [His most recent lecture 5/24/23 is here. | slides]

Similarly, Yejin Choi, a computer scientist who recently gave a popular TED Talk, argues that ChatGPT4 and other similar LLM models lack common sense and an ability to engage in “chain reasoning.” This does not mean, however, that she believes that AGI is not achievable, only that it cannot be achieved using LLMs.

Other thoughts –

*Ben Goertzel, a leading AI guru, mathematician, cognitive scientist and famed robot-creator… ”We were years rather than decades from getting there." (Yahoo News).

*In a challenge to LeCun’s claim that auto-regressive LLMs can never achieve AGI:

To surmount these challenges, we introduce a new framework for language model inference, Tree of Thoughts (ToT), which generalizes over the popular Chain of Thought approach to prompting language models, and enables exploration over coherent units of text (thoughts) that serve as intermediate steps toward problem solving. ToT allows LMs to perform deliberate decision making by considering multiple different reasoning paths and self-evaluating choices to decide the next course of action, as well as looking ahead or backtracking when necessary to make global choices. Our experiments show that ToT significantly enhances language models' problem-solving abilities on three novel tasks requiring non-trivial planning or search: Game of 24, Creative Writing, and Mini Crosswords. For instance, in Game of 24, while GPT-4 with chain-of-thought prompting only solved 4% of tasks, our method achieved a success rate of 74% (Yao et al, May 17)

Former Google engineer Mo Gadawat says we will achieve it by 2025 or 2026 (Gadawat).

Of course, between now and the time AGI is achieved, there will be enormous advances. As Sam Altman recently noted, in a couple of years, people will basically think of ChatGPT4 as a toy.

Basic reasoning skills?

As hopefully you know, large language models (LLMS) such as ChatGPT are all based on the basic idea that what they are doing is simply “predicting the next word.” Recently, however, many experts have observed what they consider to be basic reasoning abilities.

Sebastian Bubeck, a Harvard professor who worked on the ChatGPT4 integration with Bing, said, “I think it is intelligent.” Bubeck argues in a lecture and an article, “Sparks of AGI” that Chat GPT4 can –

Now, Bubeck isn’t arguing that ChatGPT4 has reached AGENERALI, but that it has sparks of INTELLIGENCE, including some simple reasoning abilities. Others share similar perspectives.

Geoffrey Hinton uses the following example:

"Well, if you look at GPT-4, it can already do simple reasoning. I mean, reasoning is the area where we’re still better. But I was impressed the other day with GPT-4 doing a piece of common-sense reasoning I didn’t think it would be able to do. I asked it, ‘I want all the rooms in my house to be white. But at present, there are some white rooms, some blue rooms, and some yellow rooms. And yellow paint fades to white within a year. What can I do if I want them to all to be white in two years" ’ " It said, ‘You should paint all the blue rooms yellow. That’s not the natural solution, but it works. That’s pretty impressive common-sense reasoning that’s been very hard to do using symbolic AI because you have to understand what "means" means ..

This topic is covered in more detail in this article in Scientific American:

At a conference at New York University in March, philosopher Raphal Millière of Columbia University offered yet another jaw-dropping example of what LLMs can do. The models had already demonstrated the ability to write computer code, which is impressive but not too surprising because there is so much code out there on the Internet to mimic. Millière went a step further and showed that GPT can execute code, too, however. The philosopher typed in a program to calculate the 83rd number in the Fibonacci sequence. “It’s multistep reasoning to a very high degree,” he says. And the bot nailed it. When Millière asked directly for the 83rd Fibonacci number, however, GPT got it wrong; this suggests the system wasn’t just parroting the Internet. Rather, it was performing its own calculations to reach the correct answer.

Artificial Narrow Intelligence (ANI)

Everything else that comes before that is called artificial narrow intelligence (ANI) and is, generally speaking, the current level of intelligence machines possess. ANI includes everything from AI used to return search engine results to voice and speech recognition to predictions for sports, politics, and investment.

And, yes, it includes all of the generative AI technologies that have exploded onto the scene in the last 6 months, the most advanced of which is GPT4 (which arguably includes some reasoning ability). Any advances in current AI technologies that occur prior to the development of AGI will be considered ANI.

Now that is a lot to group under any concept of AI—everything from speech recognition to something like this that ChatGPT4 did in seconds.

If you can’t see the gap from these “very” narrow uses to AGI such as speech recognition to the example above, you can embrace Yuval Noah Harari’s idea that what arrived before the LLMs is “primitive AI.” In other words, despite any limitations, there were dramatic leaps from primitive AI to LLMs, and there will be more dramatic improvements before we get there. These advances will have their own impacts.

Artificial Super Intelligence (ASI)

May 22 Update — OpenAI: “Given the picture as we see it now, it’s conceivable that within the next ten years, AI systems will exceed expert skill level in most domains, and carry out as much productive activity as one of today’s largest corporations.”

ASI refers to the idea that machines will become smarter than us. As with AGI, it’s not a question of if, but when. [Note on terminology: I’ve also seen AGI defined as including what I’m referring to as ASI, but that’s because once we have AGI we’ll already have ASI in some areas and since computers will be fully-capable of self-programming at that point, the flip to ASI will be very fast].

Ray Kurzweil, cited above: “By the time we get to 2045, we'll be able to multiply our intelligence many millions of times. And it's just really hard to imagine what that will be like.” (Kurzweil).

How realistic is Kurzeil’s prediction?

I think you're once you have a human-level AGI, you’re some small integer number of years from a radically superhuman AGI, because that human-level AGI software can rewrite its own code over and over. It can design new hardware and pay people to build a new factory for it and whatnot. But for human-level — by which we mean AI that's at least at human-level on every major capability — I think Ray's prognostication of 2029 is not looking stupid right now, it's looking reasonable. Of course, there's a confidence interval around it. Could it be three or four years sooner or three or four years later? Absolutely. We can't predict exactly what will happen in the world. There could be more pandemics, there could be World War Three, there could be a lot of things that happen. In terms of my own AGI projects, I could see us getting there three years from now. (Ben Goertzel, AI Global Leader)

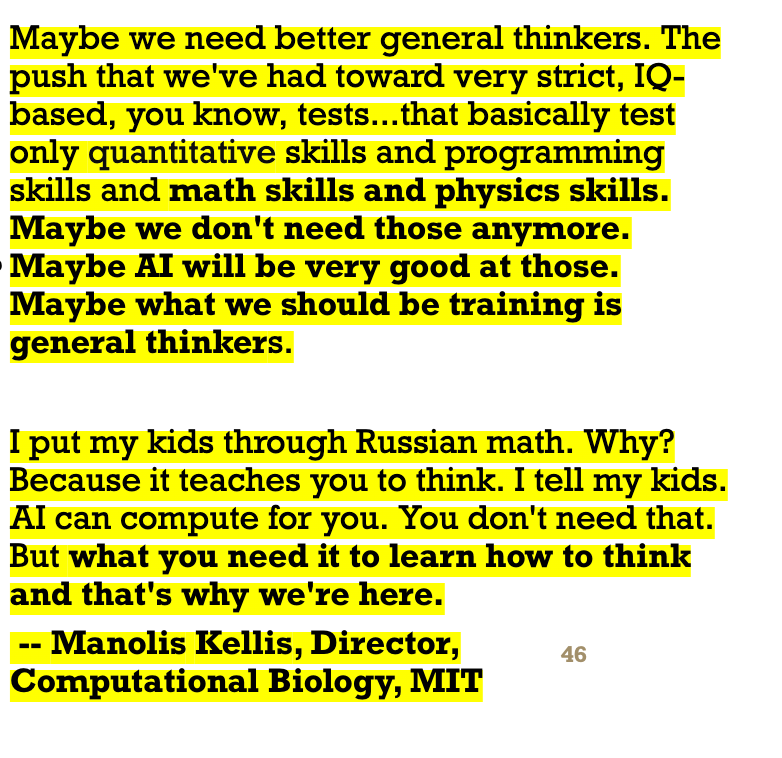

Manolis Kellis, Head of the Computational Biology Group, MIT: “Maybe we shouldn't think of AI as our tools; maybe we shouldn't think of AI as our assistant. Maybe we should think of AI as our children. In the same way, you are responsible for training those children, but they are independent human beings, and at some point they will surpass you. Maybe the next round of evolution on earth is self-replicating AI.” (Kellis)

Even those such as LaCun who believe we are far from AGI agree that we will not only reach AGI (though, remember, he defines it more precisely: the ability to perceive a situation plan a response; respond) but also ASI. LaCun says we will all have AI assistants who are much smarter than us that we will direct to complete tasks.

Wharton professor Ethan Mollick says we already have our own “AI interns;” these are “AIs” that can help us with rough drafts but can’t complete the final product better than we can.

In the future, we’ll have Geoff Hinton and Yann Lecun as our AI assistants. And so will the students in your school.

Mo Gawdat says it’s inevitable that we will have systems 10X as smart of Einstein (e.g., an IQ of 1600) soon and a billion times smarter than us by 2045.

General Implications of the Continuum

So, the important point here is that there is a continuum that has three implications:

There will be many, many more developments in AI over the next 1–20 years before we hit AGI, and these will impact schools (we may soon view ChatGPT4 as “primitive”).

Many disagree on what is included in AGI and will debate exactly when it will arrive; some of that disagreement is over how they define it.

AGI could arrive (probably) any time in the next 3–20 years.

I think all leaders in AI believe that we will eventually have AGI or something similar (LeCun).

All (I think) believe that we will eventually have ASI.

It’s a question of when, not if. And the “when” is not that far away.

Implications for Education

*There will soon be machines in society and schools that are as smart, if not smarter, than the other people in the building. Just think about that for a minute and let it sit in. Machines will be able to teach students content. There will be many things human teachers will still be better at, but these machines are going to be really good at teaching content.

*Machines will be able to do many of the things that students are learning in school—some of them already are. It’s not clear that some of what students are being taught will have any value. What will you say when a student tells you they don’t need to learn your class content because they will have their “AI assistants” (LeCun) available with that knowledge in the future?

* The more and more I read about this, the more and more I hear the leaders in this field talk about the importance of soft skills: developing relationships and social connections; communicating well; thinking critically (Emad Mostaque). These are the skills that are uniquely human and are developed in a part of the brain that AI models won’t be able to replicate for at least a very considerable amount of time. They are also the skills that good educators excel at, and they are the skills that schools are going to still need from human educators.

*Will we teach our students to compete with machines or use machines to augment their own capabilities? Stanford economist Erik Brynjolfsson, who directs the Digital Economy Lab at the Stanford Institute for Human-Centered AI, argues that we can either use AI to automate ourselves to irrelevance or augment our capabilities. LeCun frequently cites Brynjolfson to argue that this means AI will not take jobs but rather enhance humans and that “AIs” will work for us, but as Brynjolfson points out in his paper, this requires proactive efforts, including proper education. There is obviously a chance that if we don’t do what we need to augment ourselves, we will be automated into irrelevancy. It seems to me that changes in the educational system will be critical to boosting human augmentation. Both ignoring AI and continuing current training, which is, at best, designed to help us compete with machines, will lead to a world of human irrelevancy. “We need to give the tools to create new jobs that can replace some of these old jobs being done.” (Mostaque) Where that infrastructure does not exist, there will be widespread unemployment and poverty (Harari).

*How will the classroom change when every student has an AI tutor on their phone that is with them 24/7?

*Intelligent beings do bad things, and some intelligent people use other intelligent people to do bad things. These machines will do bad things in your schools. Kids will use them to do bad things.

*If kids leave school without knowing how to work and interact with intelligent machines, they’ll struggle to both get a job and be a part of society. If schools don’t prepare them for this world, schools will lose their relevance. Many argue they’ve been losing their relevance for years.

*Most experts who claim to know about AI have experience with primitive AI. That’s a simple reality. LLMs are only approximately 2 years old and have only received widespread use in the last 6 months. When hiring consultants who know about AI, you might want to unpack this a bit.

A Few Concluding Thoughts from Others

“I think the nature of school will change dramatically. I think it's still worth it. But you know, I would encourage schools to embrace this technology and just expect more that you can handwrite your essays like at Eton, because they're like, "We can't do essays anymore. I said, Oh, you can just embrace it and say, like, let's use it to create and explore what the kids want and assume that every child will have their own AI in a few years.” (Emad Mostaque).

“Nobody knows how the world will look in 2050 except that it will be very different from today. So the most important things to emphasize in education are things like intelligence, emotional intelligence, and mental stability. Because the one thing that they will need for sure is the ability to reinvent themselves repeatedly throughout their lives for the first time in history. We don't fully know what particular skills to teach young people because we just don't know in what kind of world they will be living. We do know they will have to reinvent themselves, especially if you think about something like the job market. Maybe the greatest problem they will face will be psychological. Because at least beyond a certain age, it's very, very difficult for people to reinvent themselves.” (Yuval Noah Harari).

“You start school and you're creative, and you're told you're not allowed to be creative until you're successful and you can be creative, because schools like petri dishes, social status, games, and childcare.” (Emad Mostaque).

Additional References

Syme, P. (2023, May 17). ChatGPT's clever way of balancing 9 eggs with other objects convinced some Microsoft researchers that AI is becoming more like humans. Business Insider. https://www.businessinsider.com/chatgpt-open-ai-balancing-task-convinced-microsoft-agi-closer-2023-5

Are we prepared for AGI?

Reiforcement Learning Still a Path to AGI. Reinforcement Learning Still A Viable Path To AGI .

Want to learn more?

Check out the book I edited: Chat(GPT): Navigating the Impact of Generative AI Technologies on Educational Theory and Practice.

Register for my Intro course with Sabba Quidwai.