The Continued Advance of AI, Robotics, and Brain-Computer-Interfaces: Preparing Students for a New World; An Update on our Report

An Update on our Report

TLDR

*Not engaging AI removes humans from the AI loop; we need to invest more in humans.

*Emerging technologies are triggering a societal transformation and education must adapt. There are many AI in education apps out there, but if we don’t prepare students for this new world, then using the AI apps will not accomplish anything.

*Professional development is essential and must include more than “how to” training

*AI guidelines from California, North Carolina, Oregon, Virginia, West Virginia, Australia, the UK, and UNESCO are reviewed.

*Advances in robots, brain-computer-interfaces, and merging human-computer abilities are discussed

*Tylor Swift and the expanded need for AI literacy

*Some sectors integrate AI faster than others.

*Schools integrating into LLM wraps (SchoolAI; ChatGPT4, etc)

Download our free report

Schools that do not engage AI are deciding not to keep humans-in-the-loop (HITL), and it will also result in the lowest SES students who depend on them being left out of the AI World .

This is already happening.

— Me

What is often identified as “professional learning” regarding technology for educators can often be little more than training on basic functions such as entering rosters, generating reports, or assigning prefabricated tasks.

The human, in these instances, is taken out of the loop.

— US Department of Education, Office of Technology Policy

In preparing this update, I’ve thought about a few things (and, well, COVID gave me more time to think). In this post, I’ll cover a few of those thoughts and then list the key updates.

(1) We need to invest more in humans. As we noted in the first edition of our report, as a society, we can’t just keep investing money in AI, multi-trillion dollar AI companies that have a higher economic valuation than many countries, and advancing AI weapons systems that kill humans; we also have to invest money into developing human life, especially if we want humans to remain in control and work with AI systems, something that is emphasized in all the AI guidance reports.

(2) Educators know so much about how to teach, care for, and develop students; they should not cede that role to the private sector. Schools that do not engage AI are leaving themselves (and their humans) out of the AI loop, resulting in the lowest SES students who depend on them being left out of the AI World as well. This is already happening.

According to a recent survey, as I predicted a year ago, students who are the strongest academically and who also tend to have higher SES are the ones who are most heavily using these tools, further widening the gap between them and their peers.

Author George Estreich noted that “Civilization-altering technologies should not be guided by billionaires who create and profit from them; they should be guided by us.” We can only guide these technologies if we engage them.

(3) Educators need to first think about how AI is going to fundamentally change the world, and then they need to think about what changes are needed in education to prepare students for that world. What do we need to do to prepare students for a world where machines have intelligence that is competitive with human intelligence and can complete all or nearly all of our work tasks? What do we need to do to prepare students for a world where they will all own and run their own AI models on their phones, glasses, and other wearables?

(4). This is hard, but it can be done. Most faculty at every level of education now realize they have been swindled by AI writing detection companies, that these tools cannot be relied on to police student AI use, and that AI can do many current school assignments.

Yesterday I showed how easy it is to complete a common college-level assignment in minutes with zero risk of AI detection. The reality is that a significant number of school assignments have to be changed. Kelly McDowell noted that “One day in the not so distant future, students will be graded on their ability to utilize AI creatively and effectively to complete assignments - rather than their ability to complete assignments without it. Powerful critical thinking skills can be taught via AI, but the nature of student assignments has to change.”

But Andy Firr notes the reality of the honest struggle.

‘Change the assessment and assignment’.

‘If students are using AI in an unethical way your assignment is rubbish do something about it’.

‘Make it authentic’.

All the above comments appear daily on my feed. Stop. 🛑

Give yourself a break, this is difficult, very difficult. Personalised GPT’s / Premium service features etc mean all the above when working at scale with large numbers of students is hugely challenging and bad actors will continue to circumnavigate your assessment changes.

If you feel overwhelmed at what to do and how to move forward, congratulations. You are probably in the 99.9.% of people in academia, and that’s ok. We need help with this, not constant criticism from AI ‘experts’.

And this is not the only challenge educators face. Beyond day-to-day operations, only 15% of K–12 students report positive mental health, a massive drop since 2013, and many do not see their school work and tests as having any meaningful value.

Over the weekend, I wrote a draft AI Guidance for a school district and at the end, I listed out all the actions that needed to be taken to implement the guidance. The list was long and required considerable work, but it represents what is needed if we are going to prepare students for the future.

(4) Many (but fortunately not all) educators are missing the significance of the moment. A big part of the reason that educators are in the spot just described is that educational administrators failed to see the significance of the moment, despite repeated warnings about how quickly the technology was advancing and how AI-writing detectors did not work. Little was done to prepare their faculty for what this year would bring.

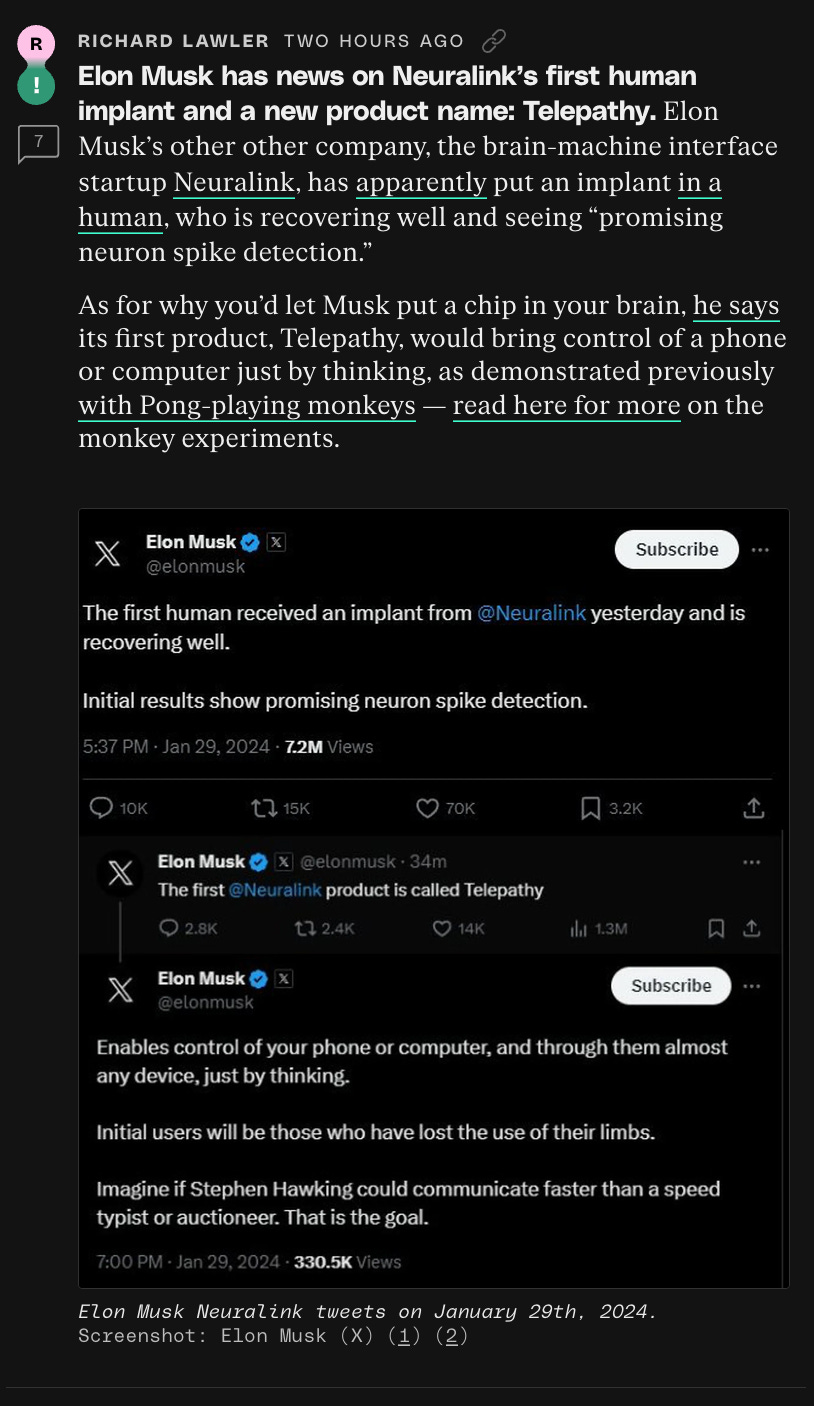

This problem of writing assessment is magnified not only by a world where machines will compete with human intelligence but also by a world where humans are able to communicate with machines with their minds alone.

On January 29th, the first device was inserted into a human.

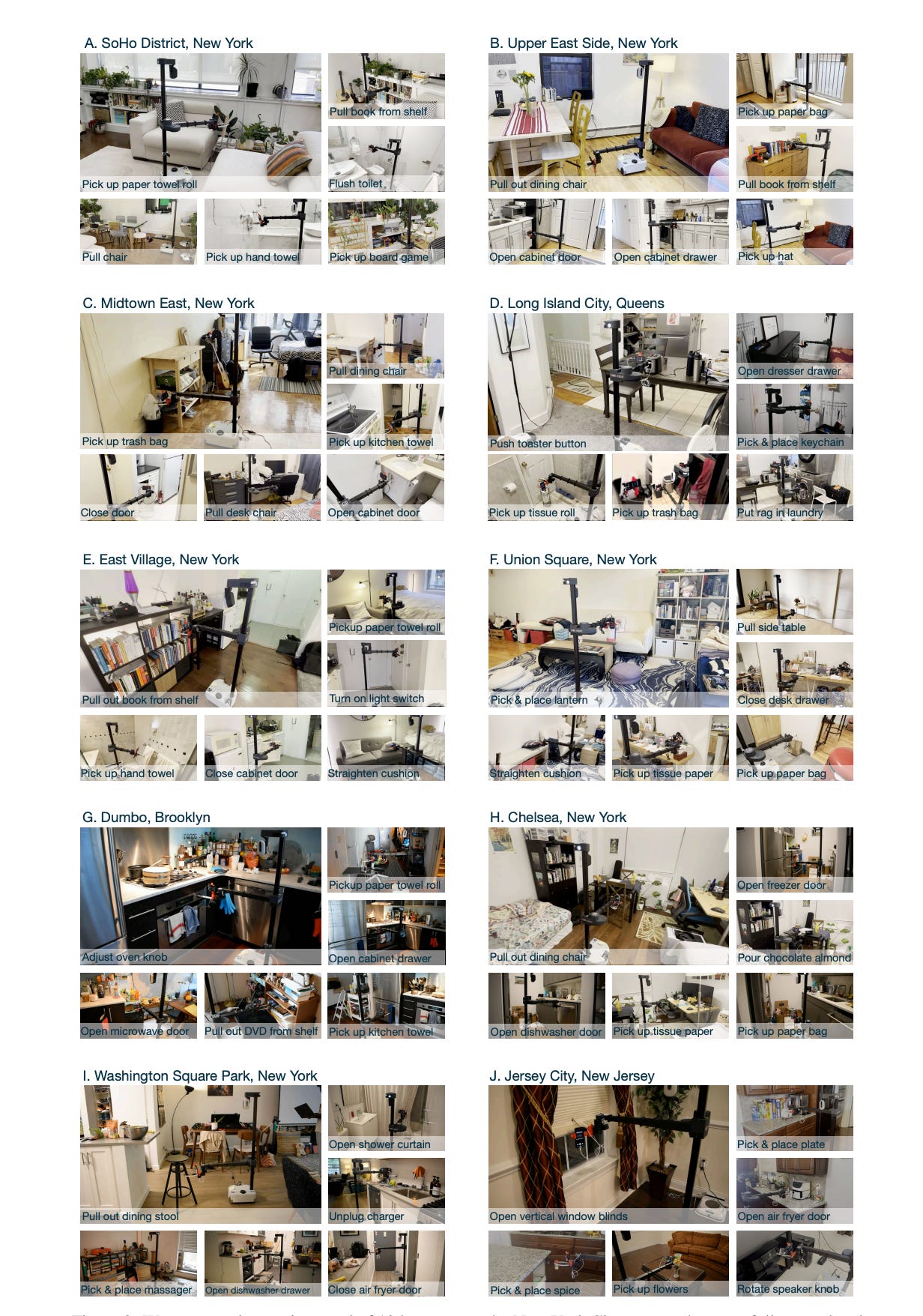

And robots can learn on their own and maneuver in New York City apartments.

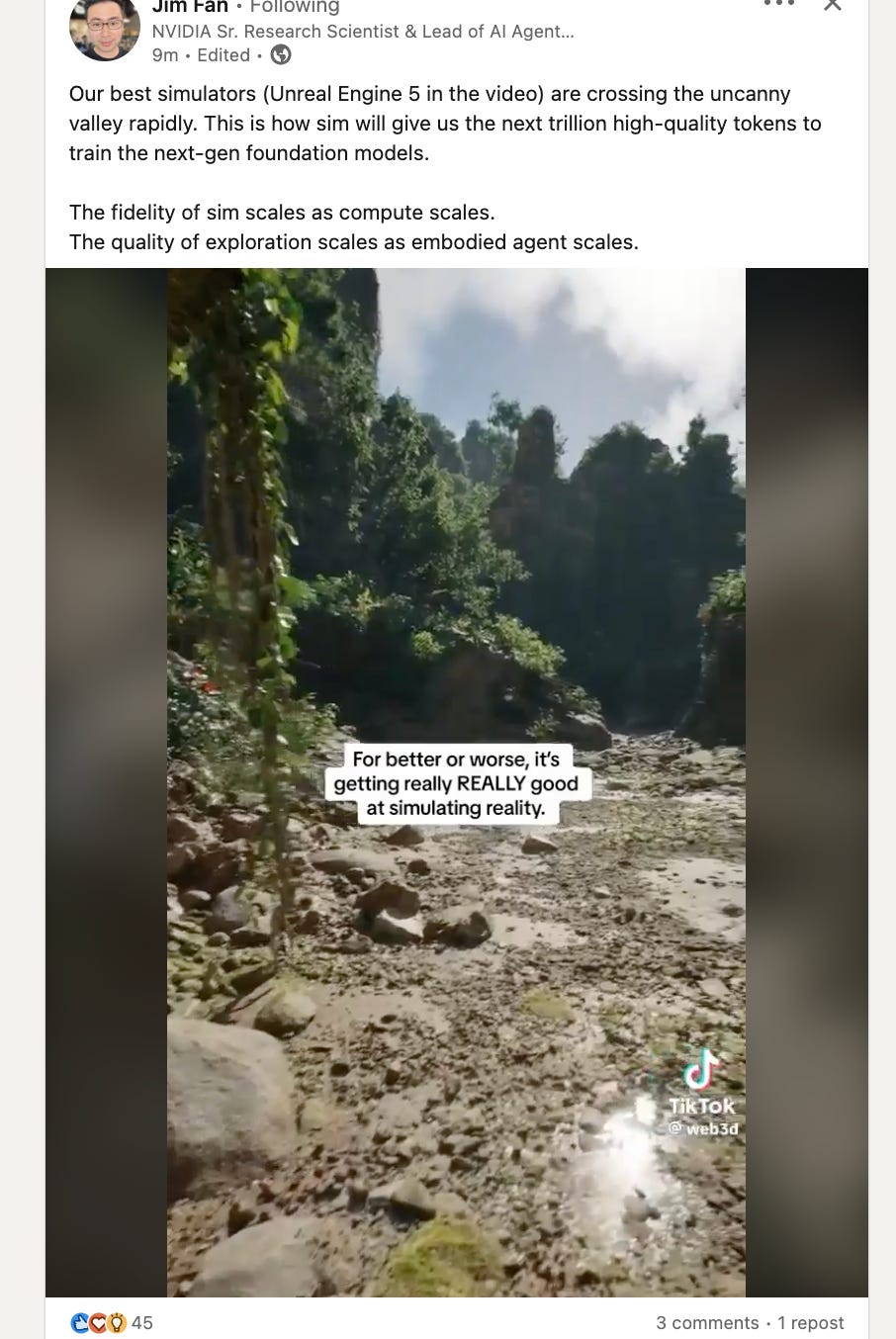

And we are getting closer to “robots on wheels.”

This is in no way to suggest that we will all have brain-computer-interfaces next week, that robots will soon be living with all of us, or that driverless Tesla’s drivers will soon be zipping all over. But these changes are coming, and they’ll be an integral part of our students’ lives.

Contrast these developments with the recently released National Educational Technology Plan. The plan offers incredibly valuable points about equality and developing graduate, administrative, and teacher profiles, but it only mentions artificial intelligence six times, four of which are in bibliographic references. It references robotics five times, three of which are in bibliography references. And given that it took 8 years to release this new one, we may very well be living in a world of AGI before the next one is released, and it may or may not reference AI.

We’ve had two major USDOE technology and AI reports released with barely a mention of the capacities of current technology.

This is not another piece of “edtech” that can be grouped with all of the others. Remember This is a technology that can drive instruction and complete student assignments. It just needs a lot more attention, and the May DOE report barely mentions the powerful AI technologies are driving this revolution (I heard it was completed before ChatGPT was released, but, regardless, it makes only a couple of references to interactive AI technologies).

In its previous report, look at what the USDEO said the technology “may” be able to do. We know that it can already do these things and more.

(5) This is not just about technology; systemic curriculum change is needed. Imagine if the curriculum of today’s educational system focused on teaching students how to use AI to grow crops because most people used to work in agriculture. Just as education fundamentally changed in response to the end of the agriculture area, it is going to need to fundamentally change to prepare students for the AI World.

Last week, a state superintendent emailed me to say they already have an effort underway to revise ELA, math, and science standards so that they can infuse AI concepts and media and digital literacy expectations into the curriculum. This represents very advanced thinking, but it is ultimately what all state education boards will need to do in order for their public educational institutions to stay relevant. Learning economic concepts without understanding how AI and related technologies will change the economy is certainly strange.

And it’s about 10Xing skills such as collaboration, community, critical thinking, and creativity. These are the number one skills employers are looking for, the skills they think graduates currently lack, and the skills everyone thinks are absolutely essential in an AI world.

(6) AI professional development and literacy programs are essential to make sure AI is used in the best possible ways, is used in ways that are compliant with laws and regulations, and helps everyone involved with an educational institution and the wider community understand the AI world and how much AI is going to change the world. Many states and districts are issuing AI guidance now, and that guidance can only be acted on and implemented if faculty and staff are AI-literate.

(7) The world is going to change a lot; there are no stable ideas or jobs. People are going to need to reinvent themselves, and AI tools enable people to create their own businesses for a very limited amount of money. Recursive self-improvement in AI refers to a process where an artificial intelligence system is capable of improving its own algorithms and performance without human intervention. Given how quickly the world will change, we need to develop students who are capable of their own recursive self-improvement as they grow. This should become a central goal of any educational system focused on prioritizing human development, and it is a core idea of deep learning approaches in education.

(8) Emerging AI systems are more powerful than most realize. There is still chatter in educational forums about how AI tools can’t do math, generate accurate bibliographies, write like people, etc. This doesn’t account for not only so many recent advances but also the development of specific tools for these purposes (Consensus in ChatGPT4, new Google math programs, etc).

The sources in this search are all 100% accurate.

(9) Some sectors are integrating faster than others. AI is advancing much faster than society is integrating it. Financial services and finance-related consultancies are probably seeing the most integration. The military expects to have all of AI integrated in 18-24 months. Healthcare and insurance are seeing more integrations. Integration in education is obviously slower, but it is still one of the two sectors expected to be most impacted, especially as it is challenged by providers offering AI-supported instruction outside the current system.

Key Report Updates

*A thorough discussion of the California, North Carolina, Oregon, Virginia, Washington, West Virginia, Australia, UK, and UNESCO AI guidance documents is included

*Significant advances in video are reviewed

*Developments in multimodal AI

Developments in robots are explained

Developments in agents are explained

*Internet of Bodies (IoB) technologies and how humans will merge with machines is explained

*The appendix on BCI is substantially updated. I’ll move it to a new chapter as I have time.

*Deep Fakes — Taylor Swift and Joe Biden

*Inclusion of ideas from the new DOE Technology plan