Preparing Students for Artificial Transformational Intelligence (Brynjolfsson)

ATI opens huge challenges and many questions for educators

Introduction

Stanford economist coined the term Artificial Transformational Intelligence (ATI) to focus on the only question that matters:

How will AI transform the economy—and, in doing so, reshape the world?

It doesn’t matter whether AI achieves this through an elegant replication of human intelligence or through sheer brute force computing power that some will argue is not “actual intelligence.”

And it doesn’t matter if it’s achieved solely or largely through scaling, neursosymbolic approaches (Marcus), a greater application of reinforcement learning (Sutton), or world models (LeCun, Li) Or even a permutation of all of these approaches (Goertzel, most researchers) plus one to two new major breakthroughs (Hassabis). It doesn’t matter if we get to effective robotics through RL and hand sensors or robots that are embodied differently than humans (Brooks). All that matter is that AI and robotics are rapidly accelerating. The impacts on productivity, jobs, and global economic, social, and political systems are the same.

ATI shifts the debate from what AI is to what AI does—how it drives economic change and rewrites the future. What matters is that scaling even existing technologies into the world and continued rapid advances in AI and robotics are changing, and will radically change the world.

GDPVal

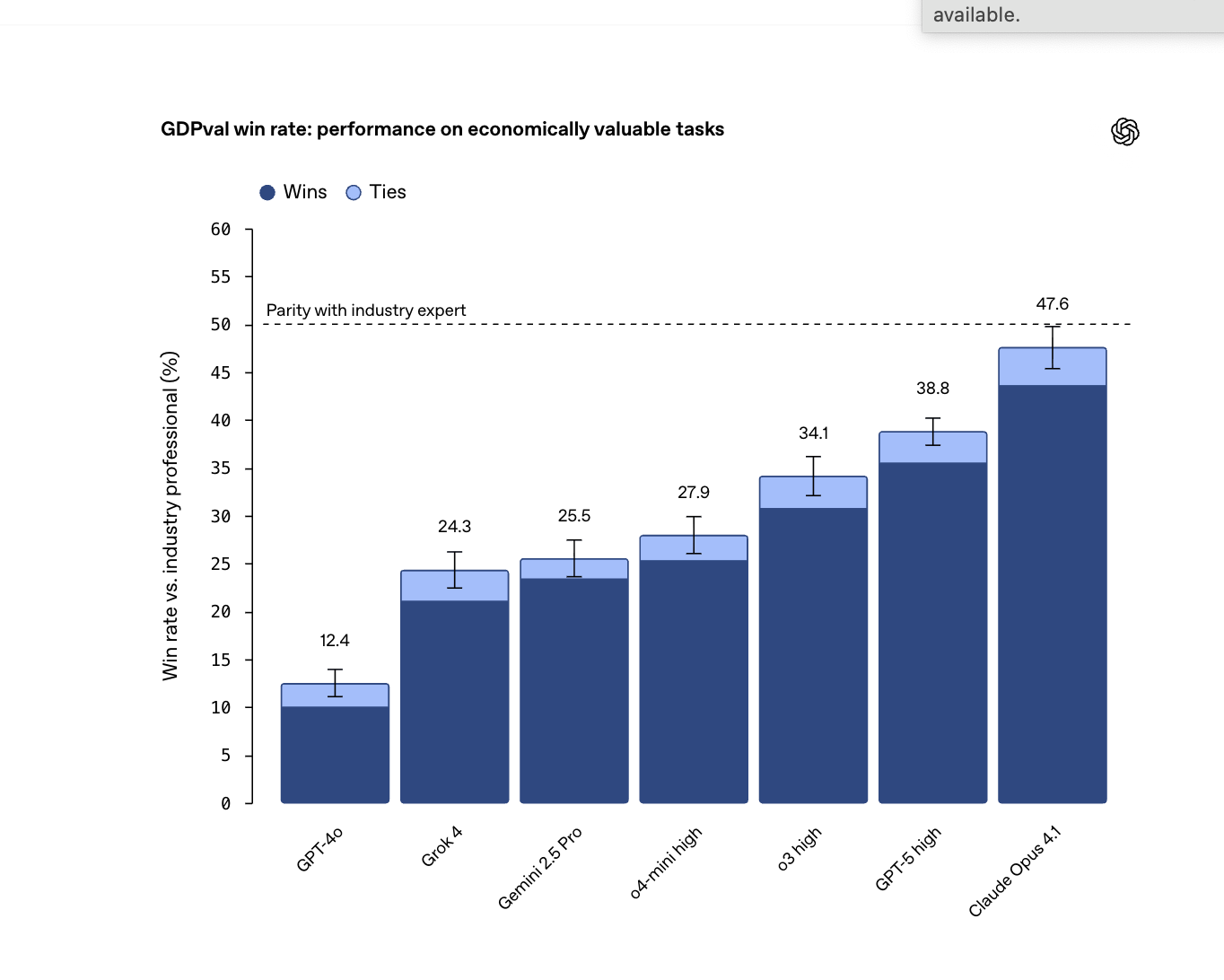

On Friday, Open AI released a new AI eval — GDPval: a new evaluation “designed to help us track how well our models and others perform on economically valuable, real-world tasks.”

OpenAI’s GDPval evaluation measures model performance on tasks drawn from 44 occupations across major industries, asking AI systems to produce deliverables like legal briefs, engineering diagrams, slide decks, and data analyses. In blind comparisons against human experts, the latest models already “tie” or “win” a substantial fraction of tasks, and can perform them roughly 100× faster and 100× cheaper (though that doesn’t yet include the human oversight or integration effort).

Importantly, GDPval is about tasks, not whole jobs. But that distinction doesn’t protect employment: if AI can already perform all the component tasks of a job, then the job itself may be largely automatable. Even when AI cannot yet do everything, the result is fewer humans needed for the residual tasks (e.g. the judgment, the oversight, the parts AI can’t handle). In other words, jobs become decomposed: many tasks get shifted to AI, and only the leftover tasks require people — meaning fewer total workers may be needed for many roles.

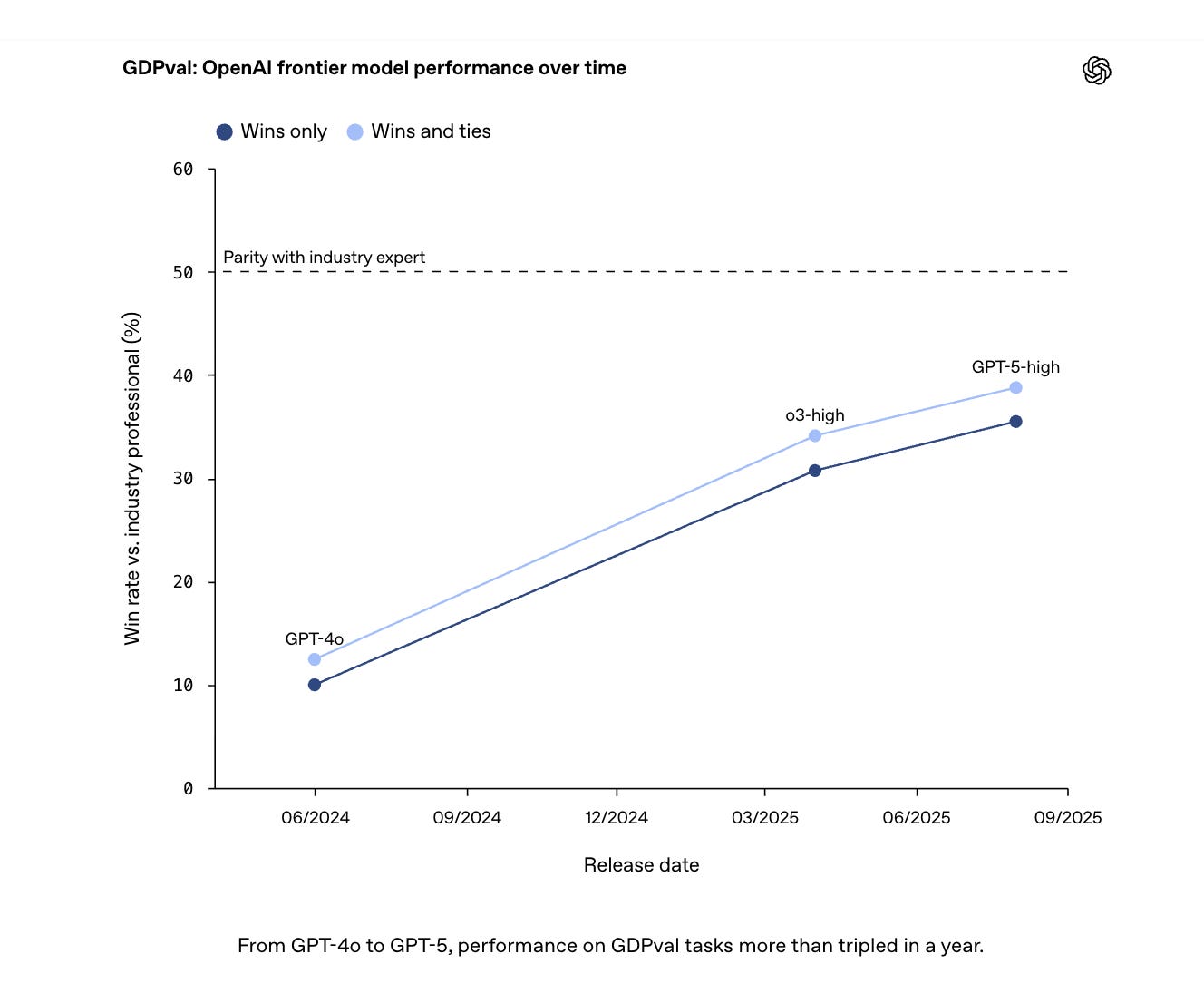

Based on this benchmark, model progress has been rapid.

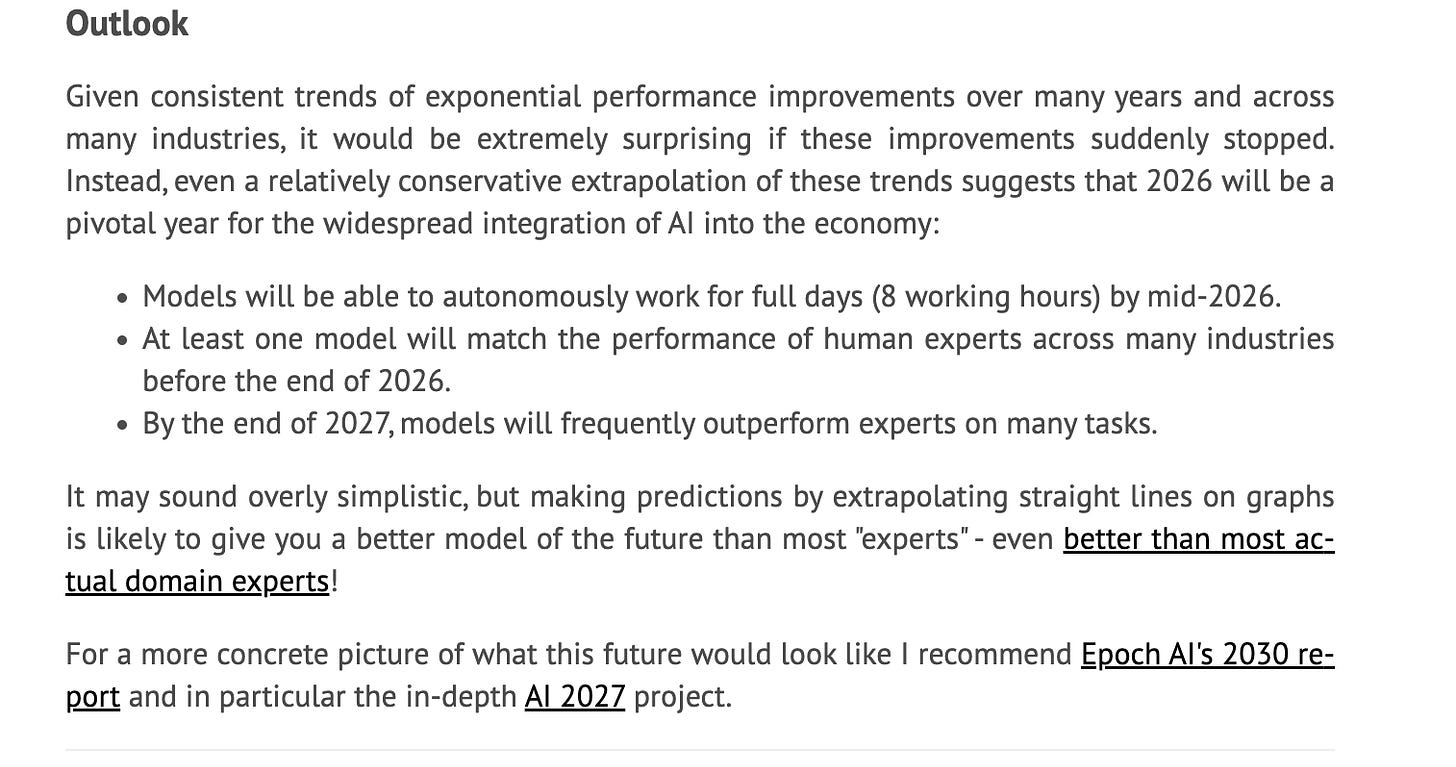

And, of course, we are likely to continue to see continued, arguably exponential, improvements, potentially even a “rapid takeoff” when AIs start building AIs.

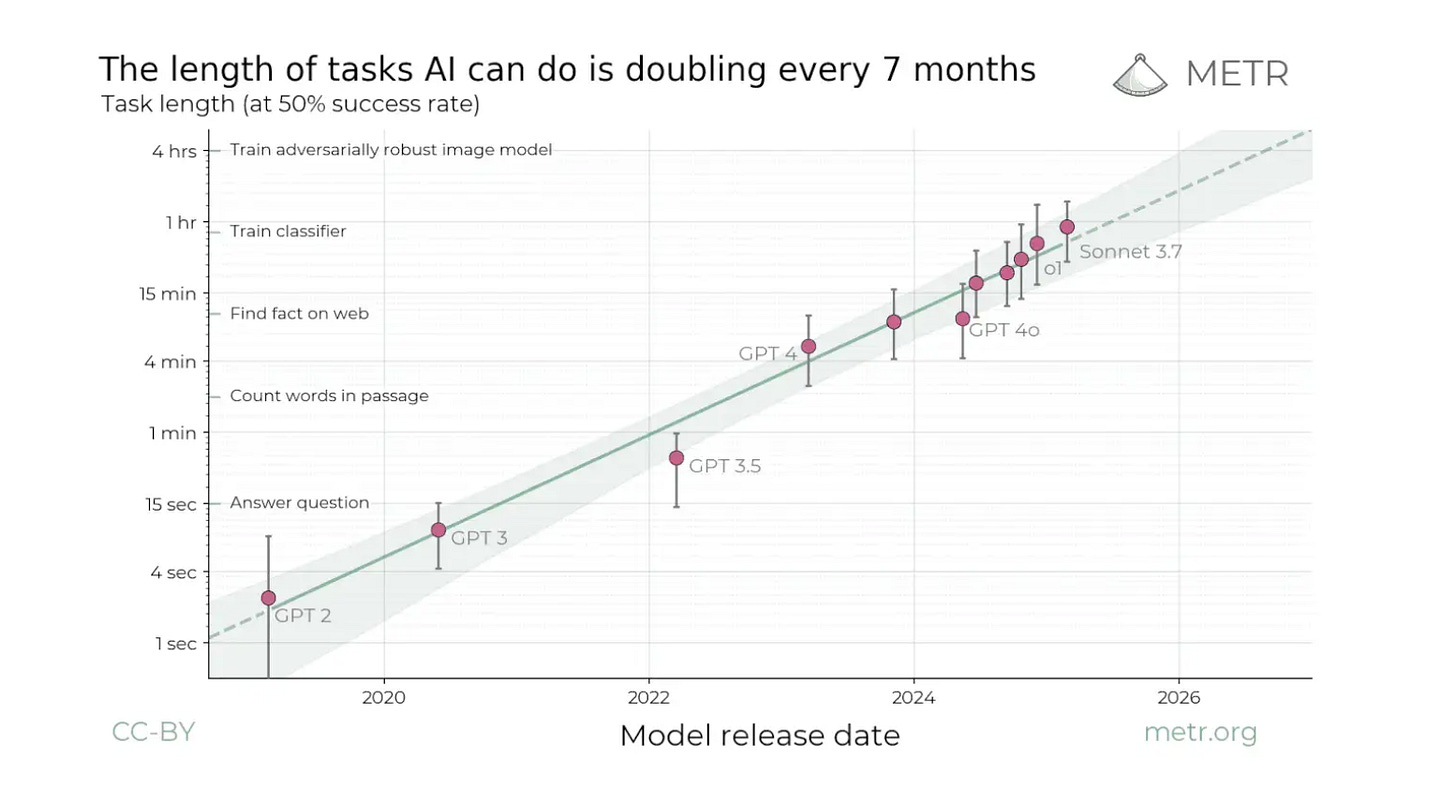

Julian Schrittwieser, the co-first author on AlphaGo, AlphaZero, and MuZero, shared METR’s update.

He projects -

[These are consistent with other projections I’ve read]

So, in other words, AI’s ability to perform more tasks of workers more effectively over a longer period of time will continue to grow. Eventually, probably likely between 2027-and 2035 (the median prediction is 2032), it will be reasonable to claim that AGI has been achieved. But even if it hasn’t, it will still have a transformational impact on the economy.

ATI and Key Questions

This September, Erik Brynjolfsson, Anton Korinek, and Ajay K. Agrawal released A RESEARCH AGENDA FOR THE ECONOMICS OF TRANSFORMATIVE AI (NBER Working Paper 34256)

In the paper, they offer 100 research questions (see below) they argue need explored as AI starts to have a bigger impact on the economy. The questions stem from six major areas.

1. Transformative Economic Growth and Bottlenecks

The paper asks whether AI could trigger a “growth explosion” and what constraints — like energy, compute, or even governance — might limit it.

This isn’t just about faster GDP growth; it’s about structural shifts in how economies create value, organize production, and innovate.

There’s an implicit tension between the promise of abundance and the risk of bottlenecks that slow or distort that abundance.

2. Inequality and Power Concentration

Many questions probe how TAI might widen or narrow economic gaps — between workers and capital owners, small and large firms, rich and poor nations.

The paper ties this to political power: if wealth and decision-making concentrate, democratic institutions could erode while corporate or state technocracies rise.

There’s also the global angle — will AI amplify existing inequalities between developed and developing countries?

3. Labor, Meaning, and the Future of Work

If machines can do almost everything, what happens to jobs — and beyond that, to purpose, dignity, and identity?

The agenda explores whether policies could replicate the positive aspects of retirement (freedom, security) without the negative aspects of unemployment (isolation, loss of meaning).

It also asks if intrinsic reasons for work — community, creativity, contribution — matter enough to preserve in a post-work economy.

4. Safety, Alignment, and Governance

Several questions look at how to balance economic incentives for faster AI progress with the existential risks of getting it wrong.

There’s concern about race dynamics — companies and countries might prioritize speed over safety if being first gives strategic advantages.

Governance here isn’t just technical; it’s economic: how do we align incentives so firms and nations act in ways consistent with human welfare?

5. Information, Truth, and Polarization

TAI could either strengthen knowledge creation and dissemination or flood the world with misinformation and manipulation.

Economic questions emerge around incentives for truth-telling, the costs of misinformation, and the role of media, platforms, and governments.

There’s also the link to political stability: control over information flows is both an economic and democratic issue.

6. Transition Dynamics

Even if TAI brings long-term benefits, the transition period could be chaotic: unemployment spikes, social unrest, policy whiplash.

The agenda asks about policy tools — retraining subsidies, adaptive regulation, safety nets — to smooth the ride from here to there.

This feels like the least glamorous but most practical set of questions: how do we avoid a crash on the way to the future?

Questions for Educators

The paper only mentions “education” once and in the context of training, but there are a number of key issues educators need to address related to these topics.

1. Economic Growth

Big Picture Questions

If AI drives explosive economic growth, what kind of education helps students thrive in a future of abundance rather than scarcity?

Should we prepare students for jobs — or for lives of purpose in a world where work may no longer be the main source of meaning?

Practical Questions

How should schools redesign curriculum when economic skills change every few years?

What partnerships with businesses, universities, or governments can help schools stay ahead of labor market shifts?

2. Innovation, Discovery, and Invention

Big Picture Questions

Should schools teach students how to create with AI rather than just study facts about the world?

How do we balance teaching foundational knowledge with fostering creativity and curiosity?

Practical Questions

What hands-on experiences (e.g., robotics, entrepreneurship, debate) should schools integrate to prepare students for innovation-driven futures?

Should students learn AI literacy like they currently learn reading and math?

3. Income Distribution

Big Picture Questions

If AI risks widening wealth and opportunity gaps, how can education be a tool for equity rather than a source of further inequality?

Should education guarantee equal access to AI tools and learning opportunities?

Practical Questions

How do we ensure rural and underfunded schools have the same access to AI-powered learning as wealthy districts?

Should governments or companies fund AI infrastructure for schools to prevent inequality from accelerating?

4. Concentration of Decision-Making and Power

Big Picture Questions

If a few companies or countries control most AI systems, what should students understand about power, democracy, and digital citizenship?

How do we teach students to question algorithms and understand who controls information?

Practical Questions

Should civic education include digital literacy and AI governance issues?

How can schools prepare students to be critical consumers and ethical users of AI?

5. Geoeconomics

Big Picture Questions

If AI reshapes global power dynamics, how can education help students see themselves as part of a global community?

Should students learn about AI’s role in trade, security, and global cooperation alongside history and civics?

Practical Questions

How can schools teach international collaboration skills in a world where AI drives both cooperation and conflict?

Should world languages, diplomacy, and cross-cultural communication get more emphasis in K–12 curricula?

6. Information, Communication, and Knowledge

Big Picture Questions

If AI floods the world with information and misinformation, how should schools teach critical thinking, media literacy, and debate?

Could AI make truth and evidence central to all subjects, not just science or history?

Practical Questions

How should schools update research, writing, and presentation assignments in a world where AI can generate text and images instantly?

Should debate, dialogue, and ethics courses be standard in all schools to fight polarization?

7. AI Safety and Alignment

Big Picture Questions

How can education help the next generation think about ethical AI development and responsible use?

Should students learn to weigh economic benefits against potential risks to humanity?

Practical Questions

Should K–12 students study topics like bias, transparency, and algorithmic fairness in math or computer science classes?

How do we train teachers to guide students through conversations about AI ethics and safety?

8. Meaning and Well-being

Big Picture Questions

If work disappears, how will students find meaning, purpose, and fulfillment?

Should schools shift focus from career preparation to human flourishing — creativity, empathy, wisdom?

Practical Questions

How should schools support mental health and belonging when AI transforms social life and relationships?

Should arts, sports, and service learning get more emphasis as sources of meaning beyond academics?

9. Transition Dynamics

Big Picture Questions

How can schools prepare students for a world that changes faster than education systems can adapt?

Should we treat adaptability as a core life skill alongside reading and math?

Practical Questions

How do we give teachers time, training, and resources to keep up with constant technological change?

Should education policy encourage pilot programs, innovation sandboxes, and rapid curriculum updates rather than waiting for top-down reforms?

Full Set of Questions Pulled from the Paper

Economic Growth

How can TAI change the rate and determinants of economic growth?

What will be the main bottlenecks for growth?

How can TAI affect the relative scarcity of inputs including labor, capital and compute?

How will the role of knowledge and human capital change?

What new types of business processes and organizational capital will emerge?

How can economists detect early signs of an AI-driven “growth explosion”?

Which bottlenecks will gain prominence in a TAI-driven economy?

Will energy availability, computational resources, or raw materials become key constraints?

How will the value and relevance of human capital evolve as TAI is developed?

Which human skills will become redundant and which will remain in demand?

How should education and training systems adapt to prepare workers?

What factors will influence the rate of TAI adoption?

What policies could promote optimal diffusion of TAI?

Invention, Discovery, and Innovation

For what processes and techniques will TAI boost the rate and direction of invention, discovery, and innovation?

Which fields of innovation and discovery will be most affected and what breakthroughs could be achieved?

How and where will TAI automate scientific discovery?

How will the ability to automate experimentation and problem-solving at scale influence technological progress?

What will be the likely bottlenecks?

What will be the impact on the frequency and quality of innovations?

To what extent will TAI identify previously unrecognized complementarities across disciplines?

How might TAI shift innovation from local to global discoveries?

Will TAI democratize the innovation process or concentrate it further?

Will TAI allow small firms or individuals to perform sophisticated R&D?

Will humans still need to articulate desired outcomes while TAI does most of the innovation work?

What will be the new bottlenecks in innovation?

To what extent will TAI shift the distribution of agents in the innovation process?

Will innovation production shift from human brains to compute?

What will be the marginal value of intelligence itself?

In what domains will frontier models be most valuable?

Income Distribution

How could TAI exacerbate or reduce income and wealth inequality?

How could TAI affect labor markets, wages, and employment?

How might TAI interact with social safety nets?

Will the capabilities of TAI largely displace workers or create new labor demand?

How might changing bottlenecks affect distribution of gains across populations?

What will jobs look like if machines can perform essentially all tasks?

What are the implications for wages, employment, and unemployment rates?

Will compute and robots remain scarce and expensive enough to preserve a role for labor?

Will TAI lead to higher income inequality or lift all boats equally?

How will outcomes differ if AI systems are proprietary vs. open source?

Will current social safety nets function effectively in a TAI world?

If not, how can they be adapted?

Concentration of Decision-making and Power

What are the risks of AI-driven economic power becoming concentrated in a few hands?

How might AI shift political power dynamics?

Will TAI be dominated by a single AI system, a few systems, or many competing systems?

Will larger firms gain advantage over smaller competitors?

Will centrally-planned economies find new life?

Will AI democratize expertise and foster competition?

Which decisions will become centralized vs. decentralized, and under what conditions?

If TAI depresses wages, how will economic bargaining power shift?

Will capital owners, governments, or technologists gain more power?

How should control rights between humans and machines be allocated?

Will economic concentration spill over into political concentration?

Will centralized systems erode individual liberty?

How will AI concentration affect regulatory capture risks?

What governance structures balance innovation incentives with risk management?

Geoeconomics

How could AI redefine international trade, security, governance, and inequality?

How will TAI reshape deterrence and the balance of power?

Will TAI challenge traditional notions of nation-state power?

How will TAI change cyberwarfare economics and critical infrastructure defense?

Can regulations manage dual-use risks without harming growth?

Will TAI exacerbate global economic inequalities between nations?

What determines which countries capture the most value?

How will TAI reshape global trade patterns and emerging economies’ competitiveness?

What policies can technologically lagging countries adopt to avoid marginalization?

How might inequality affect global migration, stability, and cooperation?

How can international institutions balance sovereignty with oversight?

How can we prevent regulatory arbitrage between countries?

Information, Communication, and Knowledge

How can truth vs. misinformation be amplified or dampened by TAI?

How can TAI affect the spread of information and knowledge?

How will TAI affect the quality of information flows?

How can we incentivize high-quality information production?

Will AI-generated content provide novelty and insights—or spread falsehoods?

Could TAI overwhelm human-produced content entirely?

What are TAI’s implications for political stability and social cohesion?

How might TAI shape public opinion or disrupt political processes?

Will TAI influence state surveillance and civil liberties?

AI Safety and Alignment

How can we balance economic benefits with catastrophic risks?

What can economists contribute to AI alignment research?

How can social welfare functions be adapted for AI alignment?

What incentive structures encourage safe AI development?

How do we handle preference aggregation and value learning for AI?

What methods assess trade-offs between growth and existential risk?

Under what conditions is slowing or halting AI development rational?

How do we prevent AI race dynamics from undermining safety?

How do we handle open-source vs. closed-source safety trade-offs?

Meaning and Well-being

How can people retain meaning and worth if “the economic problem” is solved?

What objectives should TAI be directed toward?

What factors explain positive retiree experiences vs. negative unemployment impacts?

Can economic policy replicate retirement’s positive aspects for displaced workers?

Will people make welfare-maximizing choices in a post-work world?

What externalities or internalities justify work-promoting policies?

How do we measure mental health and well-being effects accurately?

How can we design institutions for meaning in a post-work society?

Can TAI itself help create new sources of meaning and fulfillment?

How do we equitably distribute not just wealth but also meaningful activities?

Transition Dynamics

How does the speed mismatch between TAI and complementary factors affect rollout?

How can adjustment costs be minimized during transition?

How should we respond to crises like mass unemployment or system failures?

How can retraining subsidies, regulatory sandboxes, or grants speed adaptation?

What transition pathways maximize social welfare?