Human-Level Intelligence for Second Semester? We are arguably getting close.

One could make a weaker case that we are there

There have many announcements from Eleven Labs, Google (Gemini+), Spatial Intelligence, and Open AI over the last two weeks that have really surprised, even shocked, many people.

Today, OpenAI announced its new o3 model. This model is still in safety testing, but it will be released towards the end of January/early February, as second semester takes off.

While only 3 months after the release of o1, the model has made incredible strides.

Coding

In competitive coding, only 172 humans in the world outperform it (and only 1 of those humans works at OpenAI, according to the ).

Math

96.7% on AIME 2024 (missed one question)

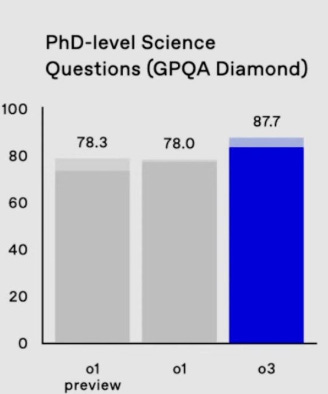

PhD Level science

A human PhD will score approximately 70.

Don’t forget that unlike humans, these models basically have PhDs in most things. Most people never have 1 PhD. A few people have two PhDs. Imagine that not only do you have a PhD in nearly all fields, but you can reason across nearly all fields at the PhD level. No human being can do that.

Reasoning

There has been a lot of debate about AI and reasoning, but I think it’s fair to say the ARC-AGI Competition has been a gold standard: Can AI system can efficiently acquire new skills outside the data it was trained on — “Adaptive Learning”?

According to OpenAI, o3 achieved a peak score (when a massive amount of compute was used) of 87.5%. For context, 85% is generally considered equivalent to human-level performance. 100% represents perfect performance.

Note that ChatGPT4 - - the most advanced model many people have used — is not even on here, as it could not score more than 5%.

The founder of the test was present for the roll out of o3.

Note: If it can adapt to novel tasks, its abilities are not limited to its training data.

This doesn’t mean we’ve achieved AGI (it takes a lot of compute to get to 80%+ and there are criticisms of ARC), but it means we are close.

And AI is still in its’ infancy.

What does this all mean?

Most of our students will graduate into an AGI-like world. I’ve been saying this for two years, though most people ignore me and that reality.

Former Director of AGI Safety at OpenAI noted today (12-20-24):

Computers will be able to do a lot (maybe most of) what we are training our students to do. That doesn’t mean the training they are getting in school is all irrelevant, but I think it means we need to think very hard about what we are teaching students and why. There is only so much time for students to learn content and skills in school, and we need to choose carefully about what we are teaching them. What (content and skills) they are learning must be relevant in a world of highly intelligent machines.

Where is the wall to AI progress? If there is one, it seems inference computing has conquered it.

(3) Next year?

(4) This made me laugh

(5) From what we can see, advances in reasoning have mainly led these improvements.

In 2025, we may also achieve infinite memory.

Human Level NLP +Human Level Reasoning + Human-Level+ (maybe infinite) Memory + (some) planning abilities =?

Enjoy the Holidaiys!

Thanks Stefan. Enjoyed your posts in 2024 and looks like possibly a wilder ride for education in 2025!