Chapter I. When AI Surges Beyond Expectations: Guiding Students Toward Deep, Durable Learning

Humanity Amplified

This is a revised version of Chapter 1 of Humanity Amplified. The current version of Humanity Amplified is accessible to all paid subscribers of this Substack at the “Subscription and Book” link above. If you are interested in consulations or keynotes, please reach out at stefanbauschard@globalacademic.org. And please encourage your students to join the Global AI Debates!

__

Prioritizing Investment in Machine Intelligence Over Human Intelligence

Developing human intelligence in a way that amplifies an individual’s capabilities has always been a central goal of not only the educational system but also society at large for thousands of years. However, in recent years the focus on developing intelligence has shifted toward developing artificial intelligence (AI) in computers that allow them to perform tasks that otherwise required the efforts “intelligent beings;[1]” particularly human beings.

These efforts have been undertaken with “colossal computing firepower and brainpower[2]” and financial resources, as the world's largest and most valuable technology companies are engaged in an ambitious race to develop advanced AI systems. In the US, Companies such as Anthropic (Claude), Apple, Microsoft (Copilot), Amazon, Google (Gemini), Meta (formerly Facebook), OpenAI (ChatGPT), and X (Grok) - with a staggering combined market value of $7+ trillion - are pouring massive resources into AI research to support fully embodied AI.

The UAE has just agreed to invest $1.4 trillion in the US, including into AI, over the next decade[3]. Peter Diamandis estimates that $1 billion+ per day is being invested in AI, and that data centers are being built everywhere[4]. In June 2025, Meta invested billions in a new superintelligence initiative[5]. By 2030, we are likely to see a 10,000x increase in model training compute[6].

Beyond investment, there is purchase spending on AI. Worldwide generative AI (GenAI) spending is expected to total $644 billion in 2025, an increase of 76.4% from 2024, according to a forecast by Gartner, Inc[7].

Sovereign AI will drive greater demand for secure onshore data centers, cutting-edge GPUs, resilient energy supplies, and home-grown AI talent as governments scramble to keep strategic models within their borders[8]. Sovereign AI, first popularized by NVIDIA, means a nation can develop, train, and deploy artificial-intelligence systems entirely with its own infrastructure, data, workforce, and business networks, protecting sensitive information while embedding local languages and values.

In Europe, leaders have pledged €20 billion for four “AI factories” and partnered with NVIDIA and Mistral to install more than 3,000 exaFLOPs of Blackwell compute, positioning the bloc for digital independence[9]. ndia’s new IndiaAI Mission selected Sarvam AI to train a sovereign multilingual LLM covering the country’s 22 official languages[10]. The UAE’s Technology Innovation Institute released the Falcon-H1 model as an NVIDIA NIM micro-service so Gulf agencies can run chatbots on air-gapped hardware[11]. Japan’s AI Promotion Act funds a national cloud to host foundation-model R&D under its strict privacy rules[12]. Canada’s Sovereign AI Compute Strategy is subsidizing domestic GPU clusters[13], while the U.S. Department of Defense awarded OpenAI a $200 million “OpenAI for Government” contract to build classified-ready models[14]. Even smaller nations such as Denmark have installed national supercomputers like “Gefion” to train culturally specific models, underscoring how the quest for AI autonomy is reshaping global compute markets[15].

Since the first version of this book was written, many of the original employees have left the company that built ChatGPT, OpenAI, to form their own AI companies that have astronomical evaluations. Ilya Sutskever, OpenAI’s Chief Scientist, left to start Safe Superintelligence, which is now valued at $30 billion. Mira Murati, OpenAI’s Chief Technical Officer (CTO), just announced her new venture – ThinkingMachines. On March 3, 2025, Anthropic announced a new raise of $3.5 billion on a $60 billion valuation.

In China, companies such a Baidu (ErnieBot), DeepSeek, and Alibaba (Qwen) have all released impressive AI tools. Europe lags but is famous for Mistrial. One of the most notable additions to Mistrial is "Flash Answers," which can generate responses at up to 1,000 words per second. Mistral AI says this makes Le Chat the fastest AI assistant currently available.

The global AI in education market alone was estimated to be between $4.8 billion and $5.88 billion in 2024[16]. Industry projections suggest substantial growth, with varying forecasts indicating the market could reach $12.8 billion by 2028[17], $32 billion by 2030[18], $41 billion by 2030[19], $54 billion by 2032[20], or even $75 billion by 2033[21].

Why are companies (and countries) investing so much in AI? Because the impact is expected to be greater than that of fire and electricity. Why? Because AI will also be able to create, including creating more sophist card versions of AI, on its own[22]. We’ve never previously had a technology that could do that.

Already, the investments have paid off[23]. “AI factories” are “generating intelligence” while computer scientists make progress not only toward developing machines that can think and develop super-human “logical” intelligence, but they are also making progress in at least simulating traits such as empathy[24] and creativity[25] that were once thought to be "uniquely human.[26]” Are you feeling down? AI is even better than people at reframing negative situations to pick you up[27], as it can now visually detect and react to nuances in conversation[28]. Therapy and companionship have become the number one uses of AI[29].

Investment Translates to Human+-Level Intelligence

As a result of these efforts and the lack of similar attention to advance and augment intelligence capabilities in humans, computers are now projected to equal or surpass human intelligence in all domains in which humans are intelligent[30], something often referred to as (AGI). Many believe this could happen within five years[31]. Ray Kurzweil, the oldest living AI scientist, recently noted that his original prediction of 2029 for AGI is now conservative[32]. Many of the world’s top experts (Brin[33], Hassabis[34], Amodei[35], Altman[36]) are starting to coalesce their AGI predictions at slightly before or slightly after 2030). Amodei envisions a seismic shift in artificial intelligence within the next two to five years, with 2026 possible for “powerful AI”[37]. He believes AI models will become so immensely capable that they will effectively transcend human control or oversight.

Some believe that an “AI takeoff,” where AIs start making significant contributions to building AIs, could happen as early as 2026[38] or 2028[39]. What will these capabilities mean? According to Meeker et al[40],

Predictions 10 years out are more unreliable, but still shocking, even if in the ballpark.

Half of American adults already believe that AI is smarter than them[41]. Sam Altman, OpenAI’s Ceo, recently wrote:

(W)e have recently built systems that are smarter than people in many ways… ChatGPT is already more powerful than any human who has ever lived… 2025 has seen the arrival of agents that can do real cognitive work; writing computer code will never be the same. 2026 will likely see the arrival of systems that can figure out novel insights. 2027 may see the arrival of robots that can do tasks in the real world[42].

Before we have computers that are more intelligent than us in all ways, we will see computers surpass human intelligence in particular areas, which, as noted, is already starting to happen, even if the development of autonomous intelligence may be far off[43]. We have artificial intelligence bots (“AIs”) that have more content knowledge than any single human, have greater accuracy in making predictions in humans, are undertaking aggressive efforts to improve their factual accuracy, have developed vision, and have learned how to learn, so they can acquire new knowledge on their own with minimal human intervention. They can engage in written and verbal conversations with us in more than 200 different languages and can produce incredible output in image and video as well[44].

According to a recent report from the Stanford Center for Human-Centered AI, AI already surpasses human performance in image classification, visual reasoning, and English understanding[45]. It’s more likely to persuade[46] us or manipulate us[47] than a human. A new NVIDIA-MIT model can even reason across images, learn in context, and understand videos[48]. A recent study shows AIs can “outperform financial analysts in its ability to predict earnings changes” and can “generate useful narrative insights about a company's future performance,” suggesting that AIs “may take a central role in decision-making[49]. We can even send AIs to meetings on our behalf to represent us[50].

Academically, AI tools are performing exceptionally well on tests that are traditional measures of intelligence. ChatGPT4, which is basically a dated model, scored a 1410 on the SAT and got 5s on most AP exams.[51] It also did well on the Uniform Bar Exam[52], the Dutch national reading exam (8.3/10[53]), and it passed the US National Medical Licensing Exam[54] and most of the Polish Board certification examinations[55]. Gemini (Google)[56], offers some modest improvements in the abilities that produced the GPT4 scores[57]. Grok, a new model from X.ai performs reasonably well on multiple academic texts[58]. This is just Grok 2; Grok 3 was released in February 2025. AlphaGeometry2, an improved version of AlphaGeometry, has achieved gold-medal level performance in solving IMO geometry problems. It solved 42 out of 50 problems from the IMO competitions between 2000 and 2024, surpassing the average score of human gold medalists[59]. The success of AlphaProof and AlphaGeometry2 demonstrates the rapid progress in AI's ability to tackle advanced reasoning tasks. These systems exhibit skills in logical reasoning[60], abstraction, hierarchical planning, and setting subgoals, which are challenging for most current AI systems. Creating AI systems that can solve challenging mathematics problems could pave the way for human-AI collaborations, assisting mathematicians in solving and inventing new kinds of problems.

Practically, a new study has shown that a human working with an AI can already work as well as a two-person teams and human teams that use AI can already significantly exceed all human teams[61]. And this study was done with technology that was available in July 2024.

Creativity

There is also ample evidence that AI is creative, as proven by several tests, including the Torrance Tests of Creative Thinking, the Unusual Uses Test, and the Remote Associates Test. The Torrance Tests assess fluency (the ability to generate many ideas), flexibility (considering a variety of approaches), originality (coming up with unique and uncommon ideas), and elaboration (adding details to initial ideas)[62]. AI systems have shown the ability to generate many novel ideas, combine concepts in unexpected ways, and expand on initial prompts - potentially demonstrating creativity on these measures.[63] The Unusual Uses Test requires thinking of creative uses for a common object[64], which AI language models have proven capable of by proposing innovative repurposing ideas[65]. The Remote Associates Test presents three words and requires finding a fourth word that relates to all three[66] - an ability AI has displayed by making insightful analogy connections[67].

Creativity is the driving force behind innovation and economic growth. It fuels the development of new products, services, and business models that meet evolving consumer needs and desires[68]. Creative minds conceive groundbreaking ideas that disrupt industries, spark technological advancements, and open entirely new markets. From the light bulb to the smartphone, many of humanity's most transformative inventions stemmed from creative thinking. And creativity powers industries like entertainment, fashion, and advertising that rely on generating fresh concepts to captivate audiences and influencers.

Images

The quality of images generate from text has simply exploded[69].

Video

The growth of video tools such as Sora (OpenAI), Kling, and Veo3 (Google/Gemini) are enabling the production of incredible short clips, commercials, and even movies.

Understanding AI Agents: From Conversation to Action

The Evolution from "Say" to "Do"

Traditional AI systems like ChatGPT primarily respond to questions—they "say" things through text or voice. But AI agents represent a fundamental shift: they can act in the digital world to accomplish tasks autonomously.

As Mustafa Suleyman (Microsoft's VP of AI and DeepMind co-founder) explained in August 2023, we're moving beyond AI that just talks to AI that actually does things.

What Are AI Agents?

Google defines AI agents as autonomous software systems with three core capabilities:

1. Perceive - They gather information from their environment through sensors or data inputs

2. Reason - They analyze what they've perceived and make decisions based on their knowledge

3. Act - They take concrete actions in their environment to achieve specific goals

In simple terms: An AI agent is a goal-oriented smart program that can work independently, making decisions and taking actions without constant human guidance.

Michael Lieben created this nice flow chart.

Real-World Example: Anthropic's Claude Research Agent

To understand how this works in practice, consider Anthropic's Claude Research agent, released in June 2025[70]:

The Multi-Agent Architecture

Instead of one AI trying to handle everything, the system uses a team approach:

· Lead Agent (Claude Opus 4): Acts as the project manager, interpreting your request and creating a research plan

· Specialized Sub-Agents (Claude Sonnet 4): Work in parallel as research specialists, each focusing on different aspects of the search

· Orchestrator: Coordinates the entire team's efforts

· Memory Module: Combines all findings into a comprehensive, well-sourced report.

In internal tests, this multi-agent setup outperformed a standalone Claude Opus 4 agent by 90.2%. Claude Opus 4 serves as the lead coordinator, while Claude Sonnet 4 powers the sub-agents. To evaluate performance, Anthropic uses an LLM-as-judge framework, scoring outputs based on factual accuracy, source reliability, and effective tool use. They argue this method is more consistent and efficient than traditional evaluation approaches, positioning large language models as managers of other AI systems.

Why This Approach Works Better

This multi-agent system outperformed a single Claude Opus 4 agent by 90.2% in internal testing. The key advantages:

· Speed: Multiple agents work simultaneously rather than sequentially

· Specialization: Each sub-agent can focus on what it does best

· Comprehensiveness: Parallel research covers more ground more thoroughly

· Quality: Better coordination leads to more accurate, well-sourced results

Measuring Success

Anthropic evaluates their agents using an "LLM-as-judge" framework, scoring outputs on:

· Factual accuracy

· Source reliability

· Effective tool usage

This represents a shift toward AI systems managing other AI systems—a glimpse into how complex AI workflows might operate in the future.

The Bigger Picture

AI agents mark a transition from AI as a conversational tool to AI as an autonomous digital workforce, capable of perceiving, reasoning, and acting to accomplish complex, multi-step tasks with minimal human oversight.

As of now, most agents, at least. Outside of coding, can only operate autonomously for 30 minutes, but already in the area of coding, Agents can operate for up to 8 hours and can operate in narrow areas (Pokemon Go) for 24 hours. These capabilities are available in the new Claude model, but also in others. The time at which AI agents can operate autonomously in a reliable way doubles every 7 months, so even if we take the minimum of 30 minutes, we can see 30-1 hr>2>4>16>32. So, within 3-4 years, we can at least expect agents to operate autonomously across many domains for 1.5 days.

Investment Translates to Use of Non-Human Intelligence

Investment has not only translated into greater abilities but also into greater use. According to Meeker et al (2025), even only looking at ChatGPT, AI use has skyrocketed.

Here you can see comparisons to other technologies.

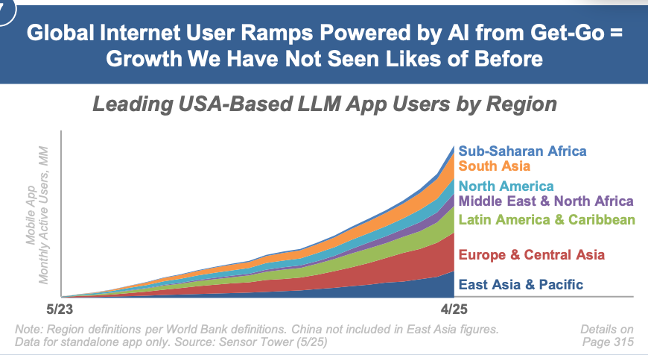

And this use is not limited to the US or the Western world.

AI Brains Enter Robots

Now we are starting to see these “artificial brains” placed in robotic bodies. These brains are being placed into robotic bodies that can move, manipulate objects, and interact with the physical world. This combination allows robots to adapt to new environments, perform useful tasks, and learn from experience. It marks a major step toward embodied intelligence, where machines no longer just think but act. As these systems enter homes, hospitals, and workplaces, they raise new possibilities—and new challenges—for society.

Fei‑Fei Li isn’t just pushing AI to “see” faces—she’s championing spatial and physical intelligence, the ability for machines to perceive, understand, and interact within 3D environments. Her new startup, World Labs, is developing Large World Models that go beyond pixel-level vision to internalize object dimensions, motion, and spatial relationships—skills fundamental to both facial awareness and physical manipulation[71]. Meanwhile, Meta’s V‑JEPA 2 (Video Joint Embedding Predictive Architecture 2) offers a powerful example of this embodied intelligence in action, training on millions of hours of video and refining skills with just 62 hours of robot interaction to enable zero-shot pick-and-place and action-conditioned planning[72].

Together, these advances represent a leap forward: robots that not only recognize a smile or the structure of a face but also predict and respond in real time to physical environments. This marriage of facial intelligence (recognizing human expressions) and physical intelligence (reasoning about objects and movements) is a key step toward robots that understand not just who we are, but how we live—and can assist accordingly.

In this demonstration, the robot chooses the apple when the person asks for something to eat among the items in front of it[73].

Recently (February 2025) Figure's new Helix AI system represents a breakthrough in robotics, enabling humanoid robots to perform complex movements through voice commands without requiring specific training for each object. The system combines a 7-billion-parameter language model serving as the "brain" with an 80-million-parameter AI that translates instructions into precise movements, allowing simultaneous control of 35 degrees of freedom while requiring only 500 hours of training data.

This development holds significant technological importance as it addresses a fundamental challenge in robotics: creating machines that can adapt to unfamiliar environments rather than requiring reprogramming for each new task. By enabling robots to understand natural language commands and interact with previously unseen objects, Helix potentially brings us closer to practical household robotics applications, while also highlighting a strategic shift in the industry as Figure moves away from OpenAI collaboration to develop specialized AI systems for high-speed robot control in real-world situations.

Helix's remarkable efficiency is demonstrated by its training requirements of just 500 hours of data—far less than similar robotics AI systems. By operating on embedded GPUs directly within the robots, the system achieves the computational performance needed for real-world commercial applications. 1X technologies/Redwood AI has recently started testing robots for home use[74].

These abilities will only grow. AI “godfathers” Geoffrey Hinton and Yoshua Bengio, as well as others, recently noted:

(C)ompanies are engaged in a race to create generalist AI systems that match or exceed human abilities in most cognitive work. They are rapidly deploying more resources and developing new techniques to increase AI capabilities, with investment in training state-of-the-art models tripling annually. There is much room for further advances because tech companies have the cash reserves needed to scale the latest training runs by multiples of 100 to 1000. Hardware and algorithms will also improve: AI computing chips have been getting 1.4 times more cost-effective, and AI training algorithms 2.5 times more efficient, each year. Progress in AI also enables faster AI progress—AI assistants are increasingly used to automate programming, data collection, and chip design. There is no fundamental reason for AI progress to slow or halt at human-level abilities. Indeed, AI has already surpassed human abilities in narrow domains such as playing strategy games and predicting how proteins fold. Compared with humans, AI systems can act faster, absorb more knowledge, and communicate at a higher bandwidth. already surpassed human abilities in narrow domains such as playing strategy games and predicting how proteins fold (see SM). Compared with humans, AI systems can act faster, absorb more knowledge, and communicate at a higher bandwidth. Additionally, they can be scaled to use immense computational resources and can be replicated by the millions. We do not know for certain how the future of AI will unfold. However, we must take seriously the possibility that highly powerful generalist AI systems that outperform human abilities across many critical domains will be developed within this decade or the next.

This is all occurring as human test scores in reading, math, and science decline internationally[75].

AI as a General-Purpose Technology

The ramifications of the development of AI for society will be large. AI is an advanced omni-use[76] or “general purpose” technology because it has the potential to transform and disrupt many industries simultaneously. AI exhibits versatility and broad applicability, with the ability to perform a wide variety of cognitive tasks at or above human levels. It can be applied to diverse domains such as healthcare, education, finance, manufacturing, and more. Like other general-purpose technologies throughout history, AI is expected to lead to waves of complementary innovations and opportunities. It will drastically change how we live and work, and drive economic growth, like how the steam engine and the internal combustion engine.

Of course, there have been general-purpose technologies in the past. The difference between AI and previous general-purpose technologies like the steam engine, electricity, and the internal combustion engine, is that those technologies took a long time to permeate and transform society due to the need for building extensive new infrastructure and hardware. The steam engine required building entire new transportation networks of railroads and steamships. Electricity necessitated wiring buildings and constructing power plants. ChatGPT, on the other hand, took 60 days to reach to reach 100 million users[77], making it the fastest adopted technology in history. It gained 1.6 billion users by June 2023[78]. It currently has 400 million weekly users[79]. But it’s just one AI; companies such as Anthropic, OpenAI, Google, and others discussed above are virtually seamlessly updated into software, such ss Microsoft Office and Google docs, running on current hardware people already own and use daily. Meta, for example, recently released Llama3, its latest large language model, and integrated it into the Meta AI assistant across its major social media and messaging platforms, instantly distributing the technology to its 3.19 billion daily users[80].

Computers as Employees, Productivity, and Economic Growth

Application of these growing abilities in the workplace should enable productivity to soar beyond the current impact they are already having.[81] Ethan Mollick points out that “Early studies of the effects of AI have found it can often lead to a 20 to 80 percent improvement in productivity across a wide variety of job types, from coding to marketing. By contrast, “when steam power, that most fundamental of General Purpose Technologies, the one that created the Industrial Revolution, was put into a factory, it improved productivity by 18 to 22 percent[82].” In a recent report, Andrew McAffee from MIT notes that “close to 80% of the jobs in the U.S. economy could see at least 10% of their tasks done twice as quickly (with no loss in quality) via the use of generative AI[83].” According to a recent study, customer support agents could handle 13.8 percent more customer inquiries per hour. Business professionals could write 59 percent more business documents per hour, and programmers could code 126 percent more projects per week[84]. This could generate trillions[85] of additional dollars in economic growth that could drive the development of new industries and jobs and/or potentially be shared across society. Every day people are starting to use these technologies in their workflows[86]. Berkeley Computer Scientist Stuart Russell anticipates this will produce $14 Quadrillion in economic growth[87].

And it’s not just simple digital AI assistants. Archie, the flagship product of P-1 AI, is designed to function as a full-fledged “junior engineer” embedded directly in industrial firms’ existing workflows. Instead of selling a discrete software license, P-1 offers Archie as a Slack- or Teams-native colleague who takes design tickets, runs CAD/CAE tools, iterates with human reviewers, and steadily improves by digesting proprietary data behind the customer’s firewall. The system couples physics-aware synthetic training data with graph neural networks and language models, enabling it to automate routine variant engineering tasks—starting with narrowly scoped use-cases like data-center cooling equipment and scaling each year to more complex sectors such as automotive and, eventually, aerospace. P-1 positions this labor-based pricing model as a way to map onto engineering head-count budgets, arguing that Archie frees senior engineers for novel work while capturing institutional know-how in machine memory, thereby accelerating hardware innovation in much the same way code copilots have sped up software development[88].

Once computers can engage in advanced reasoning and planning, they will be able to overtake more day-to-day tasks, including work responsibilities that require those skills[89], such key job functions in finance, law, production of creative works, and mid-level management.

Last year, significant efforts were made to enhance AI capabilities beyond simple inference reasoning (and there is some debate as to whether they can currently engage in basic inference reasoning (a comprehensive review of the entire reasoning debate can be found in Sun et al[90])), including with meaningless fillers to enable complex thinking[91], contrastive reasoning[92], aiming to enable advanced reasoning skills such as abstract, systems, strategic, and reflective thinking[93]. Recent advancements in natural language processing (NLP) have centered around enhancing large language models (LLMs) using novel prompting strategies, particularly through the development of structured prompt engineering. Techniques like Chain-of-Thought, Tree of Thoughts, and Graph of Thoughts, where a graph-like structure guides the LLM's reasoning process, have proven effective[94]. This approach has markedly improved LLMs' abilities in various tasks, from complex logical and mathematical problems to planning and creative writing[95]. Additional training that allows models to abstract more nuanced knowledge have also proven effective[96]. As the strength of models advance, this debate will continue, and a new benchmark – CaLM (Causal Evaluation of Language Models) has been developed to assess reasoning claims[97]. As we will discuss, adding vision and other capabilities to the models that make them “multimodal” further enhance their abilities.

Leading computer scientists expect that within three to ten years AI will be equipped to perform such complex reasoning and learn to plan based on goals[98]. AI reasoning models are rapidly advancing, with key players like OpenAI, Google DeepMind, and Cohere releasing new models with enhanced capabilities OpenAI has launched o3Pro improving coding, science, and complex problem-solving. Google introduced Gemini 2.0 Flash Thinking Experimental, focusing on multimodal tasks and structured problem-solving, as well as GeminiPro2.5. Cohere has also updated its Command R model series, enhancing their abilities in planning, tool querying, and question-answering. These models break down prompts, analyze contexts, and synthesize responses, marking a significant step towards AI systems that can handle complex tasks with improved accuracy. AI systems are increasing their "thinking time" by breaking down complex questions into smaller tasks and integrating knowledge from various sources to make logical connections and synthesize information across different domains.

It’s Not Just “Hype”

Some find comfort in claims that this is all “hype” and that education, for example, hasn’t yet been “transformed” by AI. But as Jerome Presenti notes, “I don’t think Gartner’s hype cycle applies here. Generative AI is taking education by storm because the consumers, the students, are adopting the technology on their own – not through their schools or their teachers or any kind of edtech B2B offering[99].” ChatGPT, and many other applications, are already displaying impressive abilities as a tutor[100] and homework completion assistant.. The current educational system, like many legacy companies, may very well never adapt to the AI world, but that simply means it won’t survive, as it is replaced by new approaches.

And it’s not just in schools. As Armand Ruiz, VP of Product – AI Platform, noted in May of 2024: “AI is not hype. At IBM we've completed 1,000+ Generative AI projects in 2023, prioritizing business applications over consumer ones[101]. These include projects in Customer-facing functions; HR; finance; Supply-Chain functions; IT development and operations; and core business functions[102].” JP Morgan just unveiled IndexGPT with the help of OpenAI to create thematic indexes for investments[103]. The band Washed Out released the first official music video using Open AI’s text to video tool, that was commissioned by an artist[104].

Current abilities alone are beginning to disrupt education, work, and day-to-day life[105]. Even if AI never advances beyond its current capabilities, it will radically change our world[106]. Current AI “tools” can already replace many job functions and generate image and video that is often indistinguishable from reality to the “naked eye.” They have already “demonstrated expert-level performance at tasks requiring creativity, analysis, problem solving, persuasion, summarization, and other advanced skills[107].” Texas has replaced 4,000 human scorers of its STARR written tests with AIs[108], and others envision robotic snails supporting infrastructure maintenance[109]. The Secretary of the Air Force flew in a plane without a pilot, and the military plans on having more than 1,000 pilotless aircraft in the skies by 2028[110]. AI can automatically generate and test social science hypotheses even though most people do not know how to do that[111]. Companies are investing resources in developing AIs that can engage in autonomous scientific discovery[112].

Human Intelligence Slips

While machine intelligence grows, we also see disengaged students who are disappearing from school, and the scores of humans on knowledge-based exams that we often use to measure our students’ intelligence, our “proxy for educational quality,” are declining while the scores of machines on those same exams rise[113]. AI can grade the STARR exams many students can’t pass. It can automatically generate and test social science hypothesis even though most people do not know how to do that[114]. We have students entering the workforce without skills and capabilities employers need, while employers increasingly turn to machines to complete work humans previously did.

The simple reality is that the educational system, largely speaking, has no plan to prioritizing develop students’ intelligence so they can live and work in a world of highly intelligent machines. Only a small percentage of the nation’s students are receiving training related to understanding or using AI[115], a technology that will completely define their future. Despite JP Morgan training every new hire on AI, only twenty six percent of the nation’s teachers have been trained on it[116].

Preparing Students for the AI World vs Using AI in the Classroom

And even training on AI tools can be question-begging, as we must first decide how to best prepare students for the AI World before deciding how to maximize AI tool use in the classroom. If we launch straight into “AI-tool training,” we risk begging the question—assuming we already agree on what success in an AI-saturated society looks like. Yet that vision is precisely what is still unsettled. Do we want graduates who merely operate today’s apps, or citizens who can interrogate algorithms, remake them, and decide when not to use them? Until the destination is clear, prescribing any particular suite of classroom tools amounts to building the ship before choosing the port.

Recent policy work underscores the stakes. UNESCO’s 2024 AI Competency Framework for Students asks ministries first to define the human capacities—ethical reasoning, socio-emotional judgement, systems thinking—that will remain non-automatable, and only then map tools to those aims[117] .Likewise, the OECD’s Future of Skills initiative argues that curriculum must pivot from discrete “how-to-code” objectives toward “learning-to-learn” agility, so that students can ride successive waves of model upgrades rather than drown beneath them[118]. Put differently: decide what flourishing means in an AI world, then reverse-engineer the pedagogy and hardware.

Framing the problem this way surfaces three design choices every system now faces:

1. Shallow vs. deep integration – Will AI remain a productivity add-on (drafting lesson plans, grading quizzes), or will it become an intellectual partner that reshapes inquiry-based learning and student agency? UNESCO’s 2024 guidance on generative AI calls the second path “human-centred augmentation,” but pursuing it demands new assessment models that value judgement, collaboration, and originality over speed.

2. Core literacies vs. just-in-time skills – Teaching prompt-craft today is useful; teaching epistemic humility, data ethics, and multi-modal reasoning prepares students for tools we cannot yet name. Cutting-edge devices will change yearly, but dispositions travel.

3. Equity architecture – Tool-first approaches often lock schools into proprietary ecosystems, widening the gap between well-funded districts and everyone else. Outcomes-first planning begins by asking what ALL students need to thrive, then leverages open resources, accessible interfaces, and culturally responsive examples to meet that bar.

Seen through this lens, “how to best prepare students for the AI World” is not a side issue; it is the curriculum question of our era. Everything else—whether we teach prompt engineering, adopt a new chatbot, or allocate a third of class time to AI studies (as some economists now urge[119] —flows from that upstream decision. Clarify the destination, and coherent choices about tools, teacher training, and policy will follow; skip the step, and education risks chasing every shiny model while leaving learners unmoored in the very world we claim to be preparing them for. Professor Rose Luckin recently noted:

The Critical Question for me is: Are we building students' capacity to thrive in an AI-integrated world, or are we simply making traditional education more efficient? The technology is advancing rapidly, but we need to be intentional about whether we're using AI to enhance 20th-century educational models or to prepare students for 21st-century realities. The stakes are too high to simply optimize the status quo[120].

Preparing students for this world should not be a controversial idea. It’s no different from preparing students in the 19th century, at the end of the agricultural era, to thrive in the 20th century industrial era. We stopped teaching young people how to use oxen and horses to plow fields and taught them how to use steam-powered tractors and threshing machines. We should first think through what and how we want to teach to prepare students for an AI world before we rush into using AI as much as possible in the classroom. We certainly don’t want to use AI to help students learn how to use oxen and horses to plow fields.

In a recent article in Forbes, Allison Salisbury notes, that workers earning less than about $38,000 a year are 14 times more likely to lose their jobs to all types of automation. In response, she’s seeing a renewed focus on durable skills from large employers. Durable skills are those that she describes as “less about what you know, and more about how you learn and work in the world. They involve things like self-management, working with others, and generating ideas. They cross every imaginable career, and as the name implies, they’re skills that should serve you well no matter what AI-fueled world ultimately serves up[121].”

Artificial Intelligence as More than A Technology

Unlike any tool that has come before, AI constantly evolving and improving itself. Fed with vast amounts of data, AI systems uncover hidden patterns, make predictions, and generate insights that surpass the limits of human cognition. They learn from every interaction, every success, and every mistake, growing smarter and more capable with each passing day.

Through the magic of natural language processing, it can understand and communicate with us in our own words, providing real-time support and engagement across every sector. Whether it's a virtual assistant helping you navigate a complex healthcare system, a financial advisor optimizing your investment portfolio, or a tutor helping you understand your math, AI is there, ready to lend its unique blend of intelligence and adaptability. And unlike a Smart Board, it can make decisions, solve problems, and, with emerging AI agents, act on its own. Want to return your shoes? Just tell the AI and it will know to look up where you got them in your emails, find the return instructions, and print the shipping label[122]. We’ve never had a technology like this, and they are certainly more than paper mills.

Scholars and other leaders are already arguing that simply thinking of this is as a technology is inadequate. Wharton professor Ethan Mollick has referred to it as a non-sentient “alien mind[123]” and argues we should treat “treat AI as if it were human because, in many ways, it behaves like one.[124].” Mustafa Suleyman argues that thinking about it solely as a technology fails to capture its abilities. Instead, he, argues, “I think AI should best be understood as something like a new digital species…I predict that we'll come to see them as digital companions, new partners in the journeys of all our lives.”

As acknowledged by Mollick, this perspective highlights the need to be cautious about anthropomorphizing artificial intelligence systems. While AIs may exhibit human-like behaviors and capabilities in many ways, it is crucial to recognize that AIs are fundamentally a different form of intelligence, one created by human ingenuity rather than a biological, sentient being. Anthropomorphizing AI risks underestimating the profound differences between our "alien minds" and human cognition. At the same time, failing to appreciate AI’s advanced capabilities and treating it merely as inanimate technologies is also misguided. The truth likely lies somewhere in the middle -Ais are a new kind of entity, one that blurs the line between human and machine. As we continue to evolve and become more integrated into human lives and endeavors, developing an appropriate understanding, and set of ethical principles for relating to artificial intelligences will be crucial. While some may consider such advances to be futuristic science fiction, the level of technology we have is already impressive.

Artificial Intelligence as More than “EdTech”

The framing of AI merely as an "edtech" product significantly underestimates its revolutionary potential and explains why many academic institutions are struggling to adapt appropriately. Traditional educational technology tools are static instruments with defined parameters - they're created to solve specific problems within established educational frameworks. But AI represents something fundamentally different: an evolving, adaptive intelligence that continuously improves through interaction. By treating AI as just another tool in the edtech toolkit (like smart boards, learning management systems, or digital textbooks), academic institutions are applying outdated mental models to a qualitatively different technology.

Unlike traditional edtech that simply executes commands, AI can actively participate in the learning process - challenging assumptions, offering new perspectives, and adapting to individual learning styles. The educational impact of AI extends far beyond formal learning environments, blurring the boundaries between classroom and real-world learning and creating opportunities for continuous, contextualized education. AI doesn't fit neatly into existing academic silos; its true potential emerges when integrated across disciplines, requiring institutional structures that facilitate collaboration. As Mollick suggests, we're dealing with something that behaves in human-like ways while remaining fundamentally different - an "alien mind" that requires new frameworks for understanding and integration. The most forward-thinking academic institutions will move beyond viewing AI as merely another educational technology product and instead recognize it as a transformative force that requires reimagining core educational practices, institutional structures, and even the fundamental relationship between humans and knowledge.

Consciousness

Joseph Reth of Lossless Research is working on creating artificially conscious systems to unravel the mysteries of human consciousness. The implications of such technology would be profound. According to Reth, "Artificially conscious systems will be able to engage with the world in a fundamentally different way, driven by an intrinsic desire to learn, explore and understand, rather than just optimizing for specific tasks." Transitioning consciousness from a philosophical concept to a scientific pursuit represents a significant shift in thinking. "The mission to construct artificially conscious systems is incredibly meticulous," Reth explained. "We're constantly grappling with the challenge of ensuring our systems can perceive the world in a manner that mirrors human consciousness while also navigating critical ethical considerations." Despite the challenges, Reth remains optimistic: "I believe artificial consciousness is possible, and in the next few years, we will see AI systems that are serious candidates for consciousness[125]."

So, the question is more when it will exceed human intelligence in all domains, not if it will happen (Hinton). These advances, combined with accelerating developments in virtual and augmented worlds, 5/6G, blockchain, and synthetic biology, are leading us into a “social-technical revolution that will dramatically change who we are, how we live, and how we relate to one another[126].” The world of 2044, when our students are adults, will be nothing like the world of 2024. Nothing at all.

AI, Future of Humanity, and the Future of Knowledge

In the coming era of exponential technology, artificial intelligence is poised to become not just a tool but a co-evolutionary partner in human destiny – an extension of our minds and bodies that could fundamentally redefine what it means to be human. Visionaries like Ray Kurzweil foresee a technological singularity where the boundary between man and machine dissolves: tiny neural nanobots may connect our neocortex to a cloud of intelligence, merging biological and artificial thought into a unified whole[127]. Even today, Elon Musk’s Neuralink aspires to this symbiosis – implanting brain chips so that humans won’t be left behind by superintelligent AI[128]. Such integration promises to amplify our capabilities beyond natural limits, opening doors to realms of cognition and creativity previously unimaginable. With AI augmenting our brains, we could experience an **expansion of consciousness – Kurzweil suggests that a connected brain could vastly “expand our palette for emotion, art, humor, creativity,[129]” leading to new depths of genius and individuality. At the same time, this intimate fusion of human and machine forces us to grapple with profound questions: when our thoughts are co-processed by algorithms, or our memories backed up to the cloud, where does “self” end and technology begin? The very notions of identity and consciousness blur in this brave new reality – are you still you if your mind is partly digital?[130]

Philosophers and futurists from Yuval Noah Harari[131] to Max Tegmark[132] and Nick Bostrom[133] have explored these transformative possibilities, debating whether AI will erode our humanity or elevate it to new heights. Harari, for instance, envisions Homo Deus – humans ascending to godlike status by harnessing biotechnology and AI. Indeed, transhumanist thinkers embrace the idea that AI could usher in an era of augmented beings and even digital immortality: by merging with AI, humans wouldn’t be supplanted but transcended, perhaps attaining ageless, omniscient, near-divine capacities. This provocative vision of human-AI convergence is imbued with both poetic optimism and philosophical depth. It imagines a future in which AI is not an alien antagonist but the next stage of human evolution – a digital symbiont entwined with our very essence, amplifying our intellect and spirit, and propelling us into uncharted realms of consciousness and meaning. Each step toward this future challenges us to reimagine ourselves, as humanity stands on the cusp of becoming something more than human, hand in hand with our own intelligent creations.

The moment neural nanobots splice cloud cognition into our synapses, knowledge ceases to be something we acquire and becomes an atmosphere we breathe. Kurzweil’s forecast of cortex-to-cloud links converges with today’s rapid AI breakthroughs to create a living exocortex in which discovery and learning unfold at the speed of thought. Agentic research suites such as Microsoft Discovery already automate hypothesis generation and simulation, compressing years of benchwork into days[134], while physics-informed generative models at Cornell sketch novel materials as easily as we now sketch ideas[135]. A new “AI-for-Science” paradigm is emerging in which algorithms jointly reason, experiment, and iterate, recasting the scientist as conductor of an orchestra of synthetic intellects[136]. On the learning front, intelligent tutors that map every learner’s quirks are shifting education from broadcast to whisper, leveling obstacles for dyslexic and neurodiverse students alike[137] and forecasting bespoke learning paths before a question is even asked[138]. When these systems fuse with brain-computer interfaces[139] and the ambient datasphere envisioned by Tegmark[140], research and schooling give way to continuous co-creation: learners converse with an ever-present cohort of AI “co-selves,” and the frontier of human understanding expands not by passing books hand to hand but by streaming insight neuron to neuron—rendering the library, the laboratory, and perhaps even language itself into relics of a pre-merged mind.

Policymakers and Educators Respond

Policymakers are certainly starting to pay attention to AI’s growing AI capabilities. In early November 2023, an “AI Safety Summit” involving industry leaders and government officials kicked off in the UK[141] and the U.N. launched a high-level body on AI[142]. In early December, the EU adopted the EU AI Act[143], which was approved by its members in May[144]. In the US, on October 30th, 2023, the Biden administration issued a comprehensive Executive Order[145] that covered many areas related to concerns about AI that have been expressed in numerous meetings[146] with lawmakers and in Congressional testimony.. In April 2024, A new Artificial Intelligence Safety and Security Board was also established by the Department of Homeland Security to evaluate the utilization of AI technologies in critical infrastructure systems.

A bipartisan group of Senators released long-awaited guidance on an AI legislative roadmap – Driving US Innovation in Artificial Intelligence[147] --for the fall of 2024. Their priorities include.

· Boosting funding for AI innovation

· Tackling nationwide standards for AI safety and fairness

· Using AI to strengthen U.S. national security

· Addressing potential job displacement for U.S. workers caused by AI

· Tackling so-called “deepfakes” being used in elections, and “non-consensual distribution of intimate images”

· Ensuring that opportunities to partake in AI innovation reach schools and companies

Upon returning to office, President Trump quickly moved to dismantle the AI protections and policies established by the Biden administration, signaling a shift towards unregulated AI development. Trump's actions include revoking Biden's executive order on AI and initiating the development of a new AI action plan. The Trump administration's approach favors less regulation to encourage innovation, addressing problems as they arise.

Key aspects of the Trump administration's reversal of AI policies -

Rescinding Biden's AI Executive Order. Trump revoked the "Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence" executive order issued by President Biden in 2023. This action eliminated the safeguards and requirements for responsible AI development and deployment that the Biden administration had put in place.

Halting Implementation of AI Guidance. Trump's rescission extended to the National Institute of Standards and Technology’s AI Risk Management Framework and the Office of Management and Budget’s (OMB) guidance on the management of AI systems used by federal agencies.

New AI Action Plan. Trump directed the development of a new AI action plan to promote American AI dominance. This plan is to be developed within 180 days by White House officials, including David Sacks, the special advisor for AI and crypto. The aim is to create AI systems "free from ideological bias or engineered social agendas."

Revising OMB Memoranda. The Director of the OMB, in coordination with the Assistant to the President for Science and Technology, is required to revise OMB memoranda on federal AI governance (M-24-10) and federal AI acquisition (M-24-18) to align with the new policy.

Emphasis on Deregulation. The Trump administration is emphasizing minimal barriers to foster AI innovation and maintain U.S. leadership in the field. This approach involves close collaboration with tech leaders, including the appointment of a tech investor and former executive as the White House AI & Crypto Czar.

Reliance on the Private Sector. Trump's policy resets AI to rely on the private sector. The lifting of Biden’s guardrails and the six-month wait for a new action plan has companies in areas ranging from health care to talent recruitment wondering how to proceed.

The Trump administration's approach has raised concerns about the potential for unchecked AI development and deployment, especially since we could see the development of human-level+AI that could set its own goals within the time-frame of Trump’s presidency. Without strong guardrails, AI tools could create real-world harms in sensitive decision-making processes, such as hiring, lending, and criminal justice. Some argue that the emphasis on speed and deregulation could lead to unfair or inappropriate AI applications.

In response to the federal government's policy shift, state legislatures are considering AI legislation to address issues such as chatbots, generative AI transparency, and the energy demands of AI development

States such as Pennsylvania are getting involved in AI regulation and policymaking.[148] At the local level, Seoul[149], New York City[150], and Singapore[151] (a “city-state” that just rolled out a learning bot that supports student-teacher-AI collaboration for 500,000 students[152]) have launched major initiatives around AI.

At the education level, seven states have issued AI Guidance reports, which we summarized and analyzed in a recent report of our own[153]. A few districts have followed with their guidance documents, including the Santa Ana Unified School District (CA); a document we had the opportunity to draft.

The Need to Support Educational Institutions

Although there has been an exponential rise in the intelligence of machines and that is pushing us to the development of human-level robotics in both mind and body, as well as efforts to manage and integrate it, we do not see “colossal firepower” combined with astronomical financial investments, being invested in the development and amplification of intelligence in humans in the ways needed for our students to be able to productively collaborate with people and intelligent machines. Even under the Biden administration, the few million dollars committed to helping schools adapt to this era paled in comparison to the hundreds of billions that have been invested in creating it. Now, Trump is gutting the Department of Education, the institution through which most money usually flows.

Many of America’s tuition-driven colleges and universities, which are also under fire due to Trump administration cuts, will also need support, not only to help integrate AI into their schools but to engage fundamental questions about how education will need to be restructured for a world where machine intelligence is at least competitive with human intelligence and machines will be able to do many current jobs for a fraction of the cost to an employee.

A growing body of evidence suggests that the Class of 2024-25 is colliding with a shrinking rung on the career ladder: AI now performs many of the rote tasks that once justified hiring juniors. LinkedIn analysis notes that “true” entry-level listings made up barely 2.5 % of U.S. tech postings by April 2024—down sharply as firms lean on generative-AI tools and senior staff augmented by co-pilots instead of onboarding novices[154]. Meanwhile, a June 2025 Business Insider investigation finds 41.2 % of recent graduates underemployed, with economists warning that AI-driven automation is erasing starter roles in fields from communications to paralegal work and leaving young job-seekers “barreling toward a career cliff[155].” The British telecom company BT Group is considering even deeper job cuts as automation accelerates[156].

While these bills will not pass in the current political climate, they are worth considering. On May 23, 2024, Senators Jerry Moran (R-KS) and Maria Cantwell (D- WA) introduced the NSF AI Education Act of 2024[157], a bipartisan bill introduced by that aims to bolster the U.S. workforce in AI and related emerging technologies like quantum computing.

The key provisions include:

■ Authorizing the National Science Foundation (NSF) to award scholarships and fellowships at various levels for studying AI, quantum computing, and blended programs, with a focus on fields like agriculture, education, and advanced manufacturing.

■ Establishing AI "Centers of Excellence" at community colleges to collaborate with educators on developing AI instructional materials.

■ Directing NSF to develop guidance and tools for introducing AI into K-12 classrooms, especially in rural and economically disadvantaged areas.

■ Launching an "NSF Grand Challenge" to devise a plan for educating at least 1 million workers in AI-related areas by 2028, with emphasis on supporting underrepresented groups like women and rural residents

■ Providing grants for research on using AI in agriculture through land-grant universities and cooperative extension services.

■ The overarching goal is to expand educational opportunities and workforce training in AI from K-12 through graduate levels to maintain U.S. leadership in this transformative technology across various sectors.

Universities and K-12 Schools Start to Act

US Universities

Across U.S. higher education, whole systems and flagship campuses are racing to embed AI in everything from coursework to statewide research infrastructure. In California, the 23-campus CSU just unveiled a system-wide “AI-Powered Initiative” that gives its 460 000 students and 63 000 faculty access to generative tools and professional-development modules[158], while the University of California is backing large-scale basic science with $18 million in new grants and institutes such as UC Riverside’s RAISE, which spans robotics, cybersecurity, and social-impact research[159]. New York’s SUNY network has adopted the STRIVE strategic plan and, with a fresh $5 million appropriation from Governor Hochul, is creating eight campus-based Departments of AI & Society and a shared “Empire AI” resource to democratize computing power for students across the state[160].

George Mason University is positioning itself as a hub for “inclusive, responsible AI,” blending research, workforce skilling, and community engagement from its Northern Virginia campus[161]. Florida State’s interdisciplinary data-science program now anchors an annual AIMLX expo that spotlights classroom and industry AI projects across the Southeast[162]. Penn State’s AI Hub unites ethics, education, and outreach through its spring “AI Week,” and the University of Pennsylvania’s month-long “AI and Human Well-Being” series convenes seminars and hackathons across medicine, engineering, and the humanities as part of its AI Hub[163]. Ohio State recently announced that every student will be required to use AI in class[164]. Wharton recently announced Wharton Human-AI Research[165]. The University of Mary Washington is working on a Center for the Humanities and AI.

US K-12

By mid-2025 the United States has experienced a rapid shift from ad-hoc experimentation to formal rule-making around classroom AI. A running tracker from Teach AI shows that 26 state education agencies now publish stand-alone AI guidance or frameworks —up from just six a year earlier[166].

At the local level, the Center on Reinventing Public Education estimates that roughly 20 % of the nation’s 13,000 school districts have approved AI-use or procurement rules[167], with momentum accelerating as generative tools proliferate Federal attention is reinforcing the trend: a White House initiative launched in spring 2025 is pairing competitive grants with public-private toolkits to push AI literacy into every classroom by 2026, prompting districts to codify acceptable-use and data-privacy expectations sooner rather than later[168].

Districts that started early are now seen as national templates. California’s Desert Sands USD posts bilingual AI Guidance documents that embed “human-first prompting” checklists and professional-learning modules for staff**, all tied to its Portrait of a Graduate competencies[169]. Ohio’s Department of Education and Workforce, working with InnovateOhio and aiEDU, released an AI Toolkit and a statewide strategy that require every district to name an AI lead and offer staff training by fall 2026[170]. Michigan Virtual’s new partnership with aiEDU is running a year-long train-the-trainer program expected to coach 500 teachers and seed classroom pilots across all 900-plus Michigan districts[171].

Alternative school structures are also emerging. Alpha School’s microschools in Texas and Florida compress core academics into two AI-tutor-driven hours, freeing afternoons for projects and mixed-age mentoring[172]. The Innovation Academy of Excellence, opening this August on the Tallahassee State College campus, is billed as Florida’s first “AI-integrated” middle school, with ethical AI tools woven into every STEM-rich lesson.

Action Outside the US

The UAE is making artificial-intelligence a compulsory subject from kindergarten through Grade 12 in 2025-26, pairing age-appropriate coding with explicit ethics lessons for roughly 400 000 public-school pupils[173]. In the United Kingdom, the Department for Education has issued detailed “Generative AI in Education” safety guidance and a free professional-development toolkit so teachers can embed large-language-model tools while protecting data and academic integrity[174]. Singapore’s new “AI for Fun” electives—five- to ten-hour, hands-on modules that will be available in every primary and secondary campus from 2025—already give more than 50 000 students a year a playful introduction to machine learning[175]. South Korea is scaling a nationwide network of AI high schools and rolling out adaptive digital textbooks to personalize learning and ease cram-school pressure[176]. Japan’s updated school guidelines come with new textbooks that encourage practical generative-AI projects while warning about bias and misinformation[177]. Australia’s June 2025 national program will weave baseline technical and ethical AI skills into every classroom, backed by university–industry partnerships and teacher upskilling[178]. Canada, the Alberta-based Amii institute is distributing free K-12 AI-literacy kits, coaching sessions, and classroom visits to help teachers integrate AI “across the timetable[179].” India’s CBSE board, which oversees more than 20 000 schools, has told classes 6-12 to adopt AI skill modules immediately so that students graduate with vocational credentials in areas such as data science and coding. these initiatives signal a shared global judgment: helping students co-create with—and critically oversee—AI systems is now a core duty of general education, not a niche tech elective.

Human Deep Learning to Prepare Students for an AI World

This book emphasizes the importance of changing educational approaches to incorporate academic human deep learning, highlighting the importance of learning fundamental knowledge, cultivating expertise, and developing critical thinking skills rooted in real-world experiences. This can support the “social basis of intelligence and human development”, the “interpersonal activity that is essential to human thinking…as advanced human intelligence...the type of intelligence that we need as we progress through the 21st century, an intelligence that is human that emanates from our emotional, sensory, and self-effective understanding of ourselves and our peers[180].” As Stanford computer scientist and “Godmother” of AI explains:

Simply seeing is not enough. Seeing is for doing and learning. When we act upon this world in 3D space and time, we learn, and we learn to see and do better. Nature has created this virtuous cycle of seeing and doing powered by “spatial intelligence.”… And if we want to advance AI beyond its current capabilities, we want more than AI that can see and talk. We want AI that can do… As the progress of spatial intelligence accelerates, a new era in this virtuous cycle is taking place in front of our eyes. This back and forth is catalyzing robotic learning, a key component for any embodied intelligence system that needs to understand and interact with the 3D world[181].

Shouldn't "human education "create a virtuous cycle of seeing, learning, and doing?

And this is not only Fei Fei Li’s idea. All of world’s leading computer scientists (Hinton, LeCun, Bach, etc., as will be discussed), neuroscientists[182], and psychologists[183] believe we need computers to develop “worldly knowledge” to develop “common sense[184]” and, eventually, higher-order reasoning[185] so they can plan,[186] predict[187], and talk about the world[188]. Planning, predicting, and developing higher-order reasoning abilities does require that they anticipate potential future possibilities and make choices from among them, the same way that humans make decisions[189].

These leading scientists believe it is possible to incorporate the human “lived experience” that children develop through “active, self-motivated exploration of the real external world[190]” into machines and to make it possible for them to potentially achieve human-level intelligence. Some (Altman, Murati, Sutskever, Bach; cited elsewhere throughout this book) believe this could potentially be accomplished through current[191] multi-modal models[192] supported by text, audio, and video (vision)[193], but others (LeCun, Choi) cited elsewhere through this report, and Yiu et al[194] believe a greater focus on these new, objective-driven “world models are needed.”

A shift toward greater experiential learning will be a challenge, as education continues to be grounded in educational theories that became dominant at the turn of the 20th century, over 100 years ago, learning facts and knowledge and then, in the 1990s, starting to think about it and analyze that knowledge. Due to this gap between how students are taught and how the world works, students are ill-prepared for a rapidly changing world and have not been for quite some time[195].

We only have ourselves to blame for this situation. While efforts were able to advance intelligence in machines, we did not pay attention and begin efforts to change. While scientists and industry worked on deep learning models that enabled machines to learn largely on their own, using principles of initial supervised learning and then self-learning combined with fine-tuning and reinforcement learning with human feedback[196] (in a classroom, we think of this as a “guide on the side[197]”), education largely continued along the trajectory established in the 1920s and reinforced by A Nation at Risk in the early 1980s. Relying largely on a “supervised,” sage on-the-stage[198] teaching process that fills students’ heads with more predetermined content, insists they reorganize it in some manner, and then tests to assess it. As a result, we keep teaching students to do what machines can do best and will forever be better at than us: learning a lot of content, analyzing it, and passing tests. Almost ironically, some students are passing these tests by only predicting the answer the teacher expects and parroting it on the test without necessarily needing any level of understanding, something critics are quick to criticize today’s AIs for, even though their abilities to “understand” are arguably starting to develop and almost certainly will exist in the future[199].

Today, however, the stakes are much higher, as the number of jobs that exist for high school and college graduates who have not developed higher-order thinking skills will be very limited. A substantial number of lower-level administrative and factory/warehouse jobs will be lost to automation, and even mid-level management jobs will be at-risk as machines learn how to reason and plan. The demand for employees who are capable of higher-order thinking and have the skills needed to interact both with humans and intelligent machines[200] will be difficult to meet without fundamental adaptions by the educational system.[201] Ultimately, teams solve hard problems[202], and now those teams include AIs.

Photo from Fei Fei Li’s talk cited above.

Students who are educated through the deep learning approaches we advocate, combined with AI literacy and who have experience with human-AI collaboration, will be better prepared for both the present and a future. In the future, more than 60-100% of jobs will involve using AI tools, and many current jobs could be entirely replaced by AIs. To prepare students for the future, education can no longer ignore these bots that “have evolved from being topics in intellectual discussions to challenging realities[203]” and instead must help students develop the metacognitive capacities and AI skills needed to interact with them.

Students (and all of us) need to strengthen our abilities to collaborate with one another, both as co-pilots and as all “forms” of co-workers. Based on the current level of technology advancement, AIs depend on to co-pilot with them, but as they become more autonomous and develop their own agency, the same way we hope our students will, we will also need to communicate and collaborate with them[204].

The book stresses the importance of prioritizing academic programs that foster human deep learning and integrate AI tools, particularly in ways that encourage collaboration between humans and AI agents to exchange, enhance, and preserve knowledge. This kind of human-computer interaction will elevate human intellectual abilities, helping individuals flourish even in a future where machines are likely to surpass human cognitive capacities. The book suggests that these advancements in AI may occur quickly, potentially by the time today's ninth graders graduate from high school.

In addition, schools need to nurture fundamental human qualities such as courage, kindness, love, and patience—traits vital for success in a world dominated by highly intelligent machines. However, as AI continues to evolve, the landscape of civilization is transforming rapidly, and it may soon surpass human capabilities in many areas. As a result, traditional jobs in fields like law and medicine may become obsolete, as AI can perform these tasks more accurately and efficiently. This shift calls for a complete rethinking of educational approaches, moving away from outdated systems designed for another era. Without such change, we risk utilizing AI tools merely to scale educational techniques from the early 20th century that are ill-suited for a future shaped by intelligent machines. To thrive, individuals must learn to adapt independently in a world of constant change, where AI challenges both human employment and intellectual dominance.

The book underscores the need to prioritize academic programs that cultivate deep human learning while also integrating AI tools in ways that allow humans and AI agents to preserve, exchange, and improve knowledge. By promoting such human-computer interaction, we can amplify every facet of human intelligence, ensuring that people continue to thrive even though machines may soon surpass our intellectual capabilities in virtually all domains—possibly within just a few decades, or even by the time today’s first graders finish high school. In addition, we must recognize that competing directly with AI is futile: as AI’s proficiency in tasks like fact-checking grows, the job market for human AI fact-checkers will likely vanish, forcing us to focus on the uniquely human strengths that machines struggle to replicate.

These strengths include qualities such as courage, kindness, love, and patience—traits that will be essential for human success regardless of AI’s progress in mimicking or exceeding our intelligence. To foster these attributes effectively, we must overhaul the outdated “grammar of education,” rather than merely scaling up early twentieth-century methods with modern technology. Indeed, clinging to antiquated approaches is inadequate for a future in which we live and work alongside multiple machines that possess superior intelligence and where the pace of change is relentless. Only by reimagining education can we equip individuals with the capacity for continual self-directed learning, empathy, and resilience—elements that remain irreplaceably human.

We recognize that educational leaders who push these deep learning approaches grounded in experiential and self-learning approaches, as well as to help students learn with AI tools, are often met with both resistance (“I have no voice in the curriculum and feel that all the teacher-autonomy is being choked out of us[205]”) and lip service, leaving students and parents to hold bake sales to fund important deep learning opportunities like debate teams, bands, and robotics clubs[206], while funding is invested in traditional classroom approaches focused on learning content and is not correlating with work place preparation. Of course, this is no different than how the original deep learning computer scientist researchers had to fight for funding or use their own resources to advance their deep learning approaches to AI[207], but as they learned: resistance is not futile, and at the end of the report we outline practical steps for positive change.

Jerry Almanderez, Superintendent of the Santa Ana Unified School District, stated it’s time to imagine the unimaginable for education. It is time for that. It’s time for what we call a “moonshot,” and not just for AI[208], but for education as well[209].

Chapter Overview

The book begins in Chapter 2 by looking at some of the fundamental ideas and “grammars” that have shaped education over the past 100 years, showing how early ideas that might have been appropriate for earlier times persisted despite a variety of social changes, including revolutionary technological advancements that reshaped society and the workplace and we begin making our case for helping educators adapt to this era[210].

In Chapter 3, we discuss how the current AI capabilities, including the fact that AIs can already learn information by reading, listening, looking at photos, and watching video as the world moves on from language models to multimodal language models, which now represent all of the current frontier models. Already, AI can analyze a significant amount of knowledge faster than any human, and the potential development of machines with at least human-level intelligence over the ensuing decades or sooner, will have a significant impact on our educational system and society.

It includes a discussion of how scientists believe computers need to incorporate the lived experience of humans to develop human-level intelligence. These include concepts borrowed from cognitive science[211] that stress the importance of understanding objects with three-dimensional properties[212], scenes with “spatial structure and navigable surfaces[213],” and agents with beliefs and desires[214] that work to replicate what the reader might think of as “social knowledge[215].” Like empiricist philosophers[216] and educators who support more project-based, experiential approaches to learning, computer scientists who support the development of objective-driven world AI models argue that to achieve human-level intelligence, computers must interact with the environment, learn from it, and build models of the world.

In Chapter 4, we review the major models that are publicly accessible – ChatGPT (OpenAI), Claude (Anthropic), Gemini (Google), Llama (Meta), Grok (X), as well as applications in semantic search - Bing and Perplexity. We also look discuss how to download open models (LM Studio) and access them through tools such as Groq.com. Finally, we look at popular integrations into the application layer such as Copilot, school “wrappers (Magic School, SchoolAI, Flint, Khamigo), and others. We conclude with a review of image and video generators.

In Chapter 5, we concentrate on challenges the educational system is dealing with, such as those brought on by the widespread consumer adoption of AI, especially generative AI. We also look at non-AI related concerns, as any suggestion for systemic change must consider how the proposed change will impact all challenges schools are facing.

In Chapter 6, we unpack how our deep learning approach to education will help students prepare to thrive in this world. Deep learning in education is an instructional approach developed in response to the changing needs of the information economy. It focuses on developing students' higher-order skills such as critical thinking, problem solving, collaboration, and communication that allow students to learn on their own and strengthen their intelligence. It provides students with some supervised learning but focuses on developing their capacities to learn on their own, with “fine tuning’ support and feedback from other humans and even AIs. These are the essential abilities and skills humans have always needed since at least the development of the information economy and are now critical to adapt and thrive in a world of accelerating machine intelligence that, in a completely unpredictable way, many render any knowledge or skills learned today completely irrelevant tomorrow.

Will Richardson explains:

I think one thing we're going to have to come to grips with in education is that no amount of teaching is going to prepare children for the world that's coming at them. I mean, that's always been true to some extent. But it feels even more the reality now.

Kids are going to have to learn their way through their lives. Change is only going to speed up, and if they're relying on what they've been "taught," they'll soon find themselves struggling to keep up and to make sense of what's happening.

And all of that will be centered on inquiry. Their learning will be driven by asking relevant questions and having the dispositions, skills, and literacies to suss out the answers.

I'll say it again: If we're putting kids out into the world who are waiting to be told what to learn, when to learn it, how to learn it, and how to be assessed on it, good luck to them.

As the philosopher Eric Hoffer is thought to have written, "Learners will inherit the earth, while the learned will be beautifully equipped for a world that no longer exists."

And it's not just individual learning. We need to learn together, both as individuals and as societies. As change buffets all of our lives, our beliefs, worldviews, and our values need to be relearned collectively. And again, inquiry is the path forward.