Chapter 2. Developing Intelligence in Humans: 1765-Present (Emerging Cyborgs)

From Humanity Amplified

This is an updated version of Chapter 2 of Humanity Amplifed. The updated full volume is available to all of our paid subscribers (see the link at the top of stefanbauschard.substack.com). Thanks to all of you for supporting the blog — there are now over 3,000 subscribers, and it gained exactly 100 in the last week alone.

If you are interested in helping students learn more about AI, encourage them to participate in the GlobalAI Debates. I’m also running a summer online course through NAVI education (navieducation.org, info@naviconsultingny.com) that I’m co-teaching with a business school graduate. Our focus will be on entrepreneurship, business development, and using AIs as your first employees. I’m running a similar program for middle school students in Tampa, FL the last two weeks of July.

We once named ourselves for our intelligence—homo sapiens, the wise ones. Across centuries, we built educational ecosystems to develop and transmit that intelligence in ways suited to each era—from oral traditions to industrial-age classrooms. But today, that very intelligence is being redefined, extended, and even rivaled by the machines we’ve built. In an era where algorithms generate new knowledge, where synthetic organisms challenge the definition of life, and where brain-computer interfaces blur the line between mind and machine, the old boundaries of education, identity, and agency are rapidly eroding. The question is no longer just how we cultivate intelligence, but what kind of intelligence we are preparing students for. Are we equipping them to compete with machines, collaborate with them, or become something altogether new? How will we educate individuals with hybrid intelligences?

Early Efforts to Develop Human Intelligence

For hundreds of years, humans have celebrated intelligence and focused on the question of how to advance it. The term ‘homo sapiens,’ which is how we classify ourselves as a species, means “humans” (homo), the “wise” or “knowledgeable” (sapiens). In 1758, Swedish physician and zoologist Carl Linnaeus published Systema Naturae,[i] in which he developed a hierarchical classification system for various organisms, including humans. He placed humans in the genus Homo and specifically named our species Sapiens to emphasize our unique cognitive abilities.

It is not at all surprising that we ended up classifying our species based on our intelligence, given that human societies have consistently prioritized the cultivation of knowledge and reasoning. This emphasis is evident in our educational history, which spans thousands of years—many believe the first structured educational institution was established as far back as the Middle Kingdom of Egypt.,[ii] nearly 4,000 years (2061-2010 BC) before Linnaeus penned his work.

This ancient commitment to education has only intensified over time. Societies around the world have determined that education is so important that it is now compulsory in all but four countries,[iii] with the goal of creating equal opportunity for all.[iv] Providing equal and equitable access has always been a goal of education.[v] Many individuals now spend a quarter of their lives in educational institutions, and, collectively, society has invested billions of life years and trillions of dollars in improving human intelligence with the goal of advancing the quality of human life and our species.

Throughout history, we have used our intelligence to build tools that have augmented our capabilities. The earliest stone tools, marking a pivotal leap in human evolution, allowed our ancestors to hunt, cut, and process food more efficiently, leading to a shift in diet and lifestyle. The development of agricultural tools, such as plows and irrigation systems, facilitated large-scale food production, leading to settled communities and the growth of civilizations. The 15th-century invention of the printing press by Johannes Gutenberg democratized knowledge, making books more accessible and contributing to the spread of literacy, scientific knowledge, and the Reformation[vi]. The Industrial Revolution brought forth machinery and tools like the steam engine, spinning jenny, and power loom[vii], transforming manufacturing processes and fueling economic growth. Innovations in medical tools and equipment, including the microscope, stethoscope, and X-ray machine, have enhanced our ability to diagnose and treat diseases, improving healthcare and life expectancy[viii].

Developing Intelligence through Education in the Agricultural Era and the First Industrial Era (1765-1869)

For most of the millennia, humans spent most of their time foraging for food, with their brains focused most of the day on how to eat. Early tools, such as the hammerstone, helped them increase their productivity.

Starting in the 1700s, several innovations and improvements in farming tools and practices emerged during this period. Notable inventions include Jethro Tull's seed drill (1701), which allowed for more efficient planting of seeds, and Andrew Meikle's threshing machine (late 18th century), which mechanized the separation of grain from stalks. This led to the development of more sophisticated agriculture approaches, but most people still worked in farming, though the numbers declined. In the late 1700s, approximately 90% of people were still focused on farming day-to-day. By the mid 1850s, it was approximately 60% of the population. Students spent most of the day on the farm, learning how to be farmers. They were the industrial era’s apprentices and today’s interns.

Farm children received an apprenticeship education, which involved imitating adults in the skills needed to run a family farm. Youngsters learned by watching and doing. Boys tended the animals, cleared the land, repaired machinery, and helped their fathers with the harvests. Meanwhile, girls worked alongside their mothers as they learned to cook, clean, sew, and garden. This education was not offered for the benefit of the children but because necessity required all members of the family to contribute their labor to putting food on the table and clothes on their backs[ix].

It is not easy to cleanly separate history into eras, but during this time we started to enter the first industrial revolution, where coal and its mass extraction, as well as the development of the steam engine and metal forging, completely changed the way goods were produced. As a result, the agriculture era bled into the First Industrial Era, which also started some transition into the first remnants of modern schooling. Students were taught rudimentary reading and writing skills they would need to farm and sell goods in markets[x] that were starting to emerge.

Developing Intelligence through Education for the Second Industrial Era (1870-1968)

The transition to the second industrial era[xi] was accompanied by a greater decline in agriculture work and a movement of labor to cities in search of employment in large factories. As the shift from an agrarian to industrial landscape began taking form, the National Education Association convened a group of educators, the Committee of Ten, led by Charles W. Eliot of Harvard University and William Harris, U.S. Commissioner of Education, to determine the direction of public education[xii]. Whether this outcome was intentional or not is widely debated; however, it is the work of this committee that laid the foundation of the traditional public-school system, outlining subject matter knowledge along with recommended years per subject area and creating a standardized approach to education. As noted in the book, they wanted a system where “every subject which is taught at all in a secondary school should be taught in the same way and to the same extent to every pupil so long as he pursues it, no matter what the probable destination of the pupil may be, or at what point his education is. To ease that would facilitate education for the masses and shape a uniform workforce powering the era of industrialization[xiii].”

This approach didn’t spring from nowhere, and it didn’t come with bad intentions. In fact, it came from three values: equality, efficiency, and supporting preparation for an industrial workforce. As in every era, values shape our future.

Advocates of this approach at the time focused on the practical need to provide education to millions of people, including newly arriving immigrants[xiv] and those who poured into cities. This was a part of the drive for “mass schooling” that was part of the progressive era[xv] of social reform[xvi]. It included a strong push to provide millions of students with equal opportunity[xvii] for education, and they had to build it all basically from scratch. Their starting place was the one-room schoolhouse, where students only learned basic literacy, and now they needed to be prepared to work in industry. To do this, they needed to learn a lot of facts: "The old education, except as it conferred the tools of knowledge, was mainly devoted to filling the memory with facts. The new age is more in need of facts than the old, and of more facts, and it must find more effective ways of teaching[xviii].” Practically speaking, theorists suggested that the only way to do this efficiently was through a common and standardized curriculum as well to prepare teachers in mass to teach it, an approach that persists to this day[xix]. Things needed to be simple. “To go beyond what someone needed to know in order to perform that role successfully was simply wasteful.[xx]”

The dominant theories of the day reinforced these practical needs. In the early 1900s, education system was focused on the need to teach students a lot of facts, simplify jobs, and prepare students for work in industry, especially the factory.

This push for efficiency in education[xxi] was also influenced by the dominant Taylorist management[xxii] school of thought that focused on the optimization and simplification of jobs to increase productivity. Social efficiency theory, which supported fast efforts to maximize things for society’s benefit, reinforced both Taylorist management and its application in education, and psychologists John Watson, Edward Thorndike, and B.F. Skinner created a psychological basis to support this theory[xxiii]. The needs and values of the time had a big impact on these decisions. Ahmed explains that “ideas from industrial revolution transformed into ideas for industrial education so that learners’ skills at the end of schooling met the needs of employers in factory assembly lines.[xxiv]”

Although the desire to create a “factory” model of education is heavily criticized today, it was not unreasonable for education at that time to focus on the knowledge and skills students needed to work well in a factory[xxv], just as education in the 1990s and early 2000s helped students develop information management skills. As we will see in a later section, the development of job skills is one of the reasons for the calls we will make later.

This standardized approach also won out over other ideas. The competing views of John Dewey[xxvi] and his successor Charles Hubbard Judd at the University of Chicago ultimately led to schooling and learning to defined as the same. In 1896, Dewey opened the Laboratory School, where the mission was to create a school that could become a cooperative community while developing in individuals their own capacities and satisfying their own needs. Furthermore, he imagined a curriculum that would engage students in real-world problems. These ideas did not win out, but they are the foundations of ideas that we will argue for later in this book.

It is also worth noting that while many point out that education has not changed in 100 years (you will see this basic approach to education carry through for the next 100 years), essentially entrenching a grammar of schooling[xxvii] that created a divide between active education as a life-long pursuit and schooling, where students are immersed in traditional classroom environments from ages 5–18/21. Although some argue that system wide change is impossible, this era proves it is not the case[xxviii]. This era proves that when values and interests converge and when leaders step up to initiate change, system-wide reform is possible.

Developing Intelligence in the Third Industrial Era (1969-2000) Using Second Era Education Methods

The debates of that era were largely focused on K-12 education. As technology advanced after World War II, we started to enter the third industrial era.[xxix] We had put men on the moon, developed nuclear weapons, had our first computers, and started to seriously consider the possibility of artificial intelligence.[xxx] We had more students go to college and created the GI Bill after World War II to subsidize tuition for students. Students studied, memorized, and thought, contributing to the development of the hard sciences. Federally guaranteed loans made university education possible for all[xxxi].

We pushed our education system to develop these technologies, and computer science advanced. It was a slow take-off, but as the education system remained focused on students learning content and learning was measured by time spent in the classroom plus standardized test results, the information age/technology revolution enabled by that focus on science and computers after World War II began to take off. The first hard drive that had more than 2 gigabytes (2GB) of capacity was the IBM 3380 (2.52GB), which was as large as a refrigerator and cost approximately $100,000. Nonetheless, it was a radical improvement over the 1950s 5MB “disk.” Of course, storage capacity improved through the 80s and 90s with CD-ROMs, flash memory, cartridges, hard drives, compact flash disks, video discs, and zip drives. The 2000s saw flash drives, optical disks, the first 1 terabyte hard drives, and the launch of cloud services such as DropBox and Amazon Web Services (AWS). Today, our iPhones have 500-1,000 GB of storage. Over that time, the amount of information produced and permanently recorded exploded.

In the 1970s, we started entering a third wave of technological advancement, known as the information society, which was part of a “third wave” of technological advancement, a "post-industrial society” that followed the “agricultural revolution” and the large polluting factories. There was more information and more facts than one could possibly manage thanks to databases that resided on computers, local CDs, and then the internet. It seems students were supposed to learn it all, or at least learn the subset of information taught to them in school.

During this time, the United States did try to advance its educational system. As a percentage of the Gross Domestic Product (GDP), education spending rose from 2 percent in 1950 to 3.6 percent in 1971. It declined a bit in the 1970s, bottoming out at 3.1 percent in 1984.[xxxii] But education did not improve. In 1983, the government released A Nation at Risk[xxxiii], which was deliberately designed to stoke the public’s angst about the decline of the nation’s public schools and relied heavily on a decline in across-the-board standardized test scores for its critique.[xxxiv] Society’s response to the report triggered an even greater rise in education spending, and leaders pushed more of the same approach to education that it pushed for the industrial era:

It focused on content, expectations, time in the classroom…about setting high standards and expectations in education, and that we should have the same expectations for all kids. Out of that would come the effort to create standards in each of the content areas. The report sparked a discussion about the need for extended schools days, weeks and calendars…For the next 35 years, these are things that would be the focus of reform efforts in in K-12…They created the framework for debate about the need for testing and accountability[xxxv]

And it kept pushing for the second industrial-era approach. Efforts of this era expanded the demand and use of testing, trying both nationally (NAEP) and at the state level to push progress to ensure that students mastered standardized knowledge,[xxxvi] continuing the approach developed in the early 1900s.

As we saw in the previous era, there were certainly overall tensions as the one era passed from the previous: In 1970, the top three skills employers asked for were reading, writing, and arithmetic[xxxvii], but in 1971, the US Army produced a document about the importance of developing soft skills[xxxviii]. And although the “industrial model,” from the last generation dominated, critics such as Paulo Friere (1978) criticized the “banking model” of education, where children were treated as empty vessels where teachers deposited knowledge (AKA “facts”)[xxxix]. A bit later, in a 1993 book, Teaching for Understanding, Milbrey McLaughlin, and Joan Talbert wrote of their book’s proposal: “These visions depart substantially from conventional practice and frame an active role of students as explorers, conjecturers, and constructors of their own learning. In this new way of thinking, teachers function as guides, coaches, and facilitators of students’ learning through posing questions, challenging students’ thinking, and leading them in examining ideas and relationships.”

There were, of course, efforts made to strengthen the development of critical thinking and reasoning in the sea of information that educators realized students were drowning in. It was common to find articles in the early 2000s about the need to teach management information skills and critical thinking in the information age,[xl] Although the Common Core State Standards (CCSS)[xli] are often criticized for reinforcing this standardized approach to education, they did encourage students to engage in critical thinking, problem solving and creativity[xlii]. National [xliii] and international organizations such as the Organization for Economic Cooperation and Development (OECD)[xliv] started discussing “21st Century Skills,” which include collaboration, critical thinking, and communication. Apple funded XQ: The Super School Project competition to reinvent schools[xlv].

That SAT encouraged educators to teach students these skills by adding an evidenced-based reading and writing section in 2014[xlvi]. Students were encouraged to read information, think about it, analyze it, and interact with it in creative ways, including by writing papers and reports[xlvii]. Advocates started to push inquiry and project-based approaches[xlviii]. They are pushing these approaches not only to move away from assignments that can be easily produced by AI tools but also to develop essential durable skills such as critical thinking and collaboration[xlix].

In 2015, the Every Student Succeeds Act was enacted by Congress, replacing No Child Left Behind (NCLB) in an effort to shift control over school performance back to the states, encouraging a move away from standardization in summative assessment, but still measured school success based on standardized test scores, requiring states to “test students in reading and math in grades 3 through 8 and once in high school[l].”

Since the Obama administration (2004-2012) continued Reagan and Bush era policies, a theme of higher standards and accountability, driven by a “race to the top[li],” equating school success with standardized test scores, continued. Federal funds for Title I and the Free and Reduced Lunch program would be distributed in a way that would force compliance with federal regulations[lii] that demanded progress.

While these education reforms were designed to strengthen the link between education systems and economic mobility, in many ways, the opposite happened, leading to an era of standardization driven by testing with a desire to improve “accountability[liii].” This has created an environment that nurtures the antithesis of the skills and dispositions required by the workforce in the age of the information explosion and now automation.[liv]

And the standardization doesn’t just apply to the curriculum but to the organization of the school day itself. Sir Ken Robinson explains:

Schools are still pretty much organized on factory lines; ringing bells, separate facilities, specialized into special subjects, we still educate children by batches (you know we put them through the system by age group). Why do we do that?

Why is this assumption that the most important things kids have in common is how old they are? It's like the most important thing about them is their date of manufacture. Well, I know kids that are much better than other kids in different disciplines, or different times of the day, or better in small groups than large groups, or sometimes want to be on their own. If you're interested in the model of learning, you don't start from this production line mentality.

It's essentially about conformity, and it's increasingly about that as you look at the growth of standardized testing and standardized curricula. It's about standardization. I believe we have to go in the exact opposite direction. That's what I mean about changing the paradigm[lv].

Of course, we are not arguing there has been no change in the education system; and it is certainly the case that the “factory” model can be overplayed. There is certainly less of a focus on memorization, schools have developed many non-academic approaches, educators pay more attention to how material is taught, and schools have made more and more efforts to focus on the individual. What we are arguing for is something much more radical.

As will be discussed in the section on intelligent tutoring systems, this will now happen regardless as whether brick and mortar schools enable it. Fortunately, today, organizations push for project-based approaches that “that encourage peer-to-peer knowledge sharing and focus on real-world problem-solving with AI[lvi],” as all problem-solving in the present+ involves solving them with AIs. There is strong evidence of the effectiveness of these approaches[lvii].

Developing Intelligence in the Fourth Industrial Era (2000-Present) Using Education Methods from the Second Era

Industry 4.0, also known as the Fourth Industrial Revolution, represents the latest phase in industrial advancement and is focused, among other things, on connectivity – connecting everyone through devices so that we can access and interact, first with information (1980-2003) and then with each other (2004 (Facebook) – present)).

Although the connections were initially facilitated first by desktop-to-desktop, then laptop-to-lap-top, eventually smart/mobile phone use also grew.

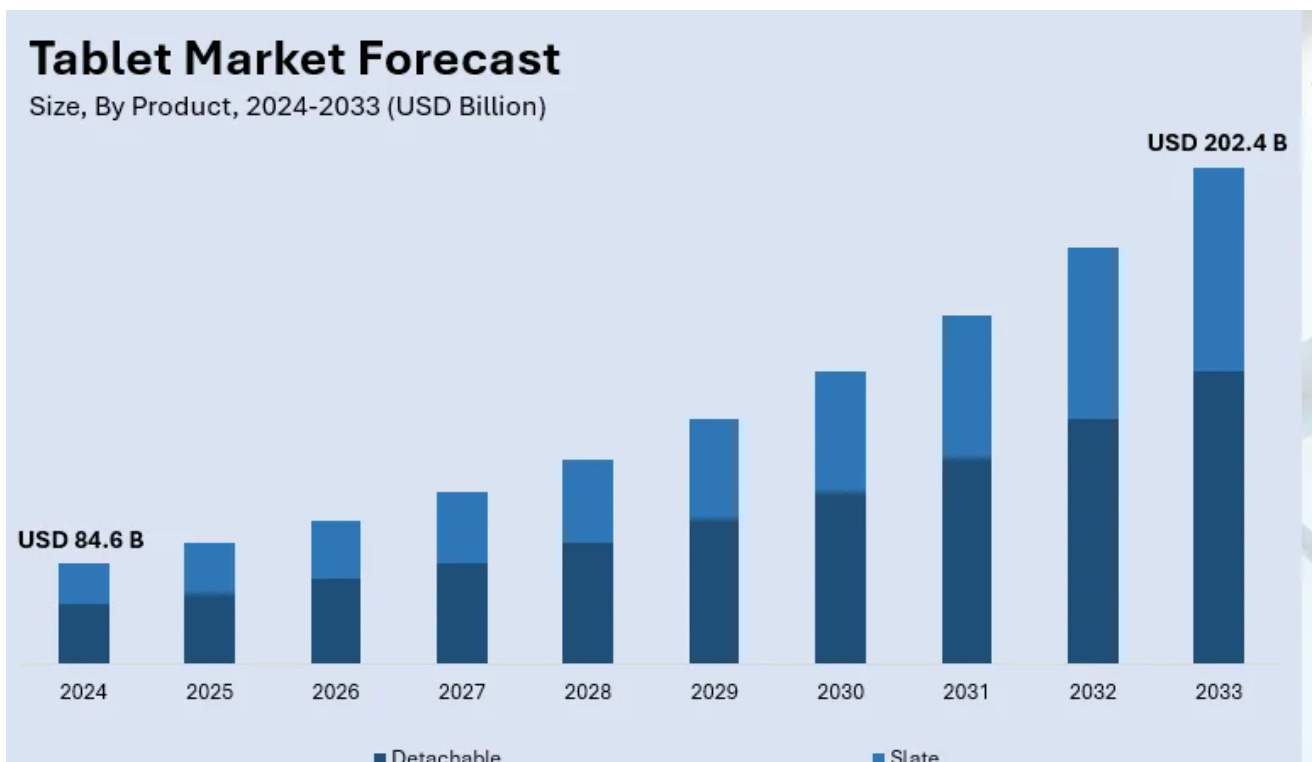

The same trend can be seen in tablets such as iPads[lix].

This is expected to continue growing.

In education, an estimated 90% of middle and high school students have these devices and 84% of elementary students have the devices[lx].

Devices such as desktops and laptops, cell phones, and tablets are all “things” that are connected to the internet and can collect, share, and receive information (“Internet of Things” (IoT)[lxi]. The number of “things” that can connect, share, and receive information has grown, however, and now includes our cars, toys, Smartwatches (e.g., Apple Watch, Fitbit), fitness trackers, smart glasses (e.g., Google Glass), health monitoring wearables, smart thermostats (e.g., Nest), smart lighting systems, smart locks, security cameras, smart appliances (refrigerators, ovens, washing machines), voice assistants (e.g., Amazon Echo, Google Home), remote patient monitoring devices, smart pill bottles, connected cars, smart parking meters, fleet management devices, industrial machinery with sensors, asset tracking systems, smart agriculture equipment (e.g., moisture sensors, automated tractors), smart TVs, connected toys, drones, smart speakers, smart grids, smart street lights, waste management systems (e.g., smart trash cans), water supply monitors, smart retail shelves, NFC payment systems, inventory trackers, weather stations, ocean buoys for tsunami warnings, air quality monitors, livestock wearables, agricultural drones, precision farming systems, pet trackers, smart doorbells, connected water bottles. We now have billions of connected devices[lxii] that are collecting, sharing, and receiving information. Many of these now include supplemental vision support, including binoculars that can identifies species[lxiii], and their usability can be improved by technologies such as ChatGPT[lxiv]. Imagine your lamp with a large language model[lxv].

Since these connected devices not only share information with us but also collect it, we have so many more facts for students to learn than we did in 1918, when The Curriculum was published.

This chart measures data in zettabytes. As a point of comparison, your iPhone most likely has 254-512 gigabytes of storage. There are a trillion gigabytes in a zettabyte. We generate approximately 125 trillion gigabytes of information every year.

Fortunately, unlike in 1918, we don’t have to rely on students needing to learn and make sense of all these facts. Industrialization 4.0 is also characterized by advanced data analytics, including the use of classical rules-based AI systems and now deep learning approaches to help make those predictions. The focus has shifted from mere automation to a comprehensive integration of systems across production and supply chains[lxvi], leading to more flexible manufacturing processes, enhanced human-machine collaboration that could help us make sense of all the information so we wouldn’t have to rely on humans to learn trillions of facts, and the ability to harness vast amounts of data for better decision-making and innovation. And we now have machines that can converse with us in natural human language about (and because of) all the information that is stored and that we are ubiquitously connected to. The information age made the age of machine learning and intelligence possible.

The collection of this information made it possible to develop and advance today’s AIs. Why? Because underneath every AI model is data that helps make predictions. As will be explained below, the more data a system has, the better predictions and judgments it can make. This includes everything from predictions related to what stocks to pick to how to best respond to your query or prompt.

The explosion in information was public, but largely behind the scenes, practitioners continued to work on the development of artificial intelligence we saw emerge, which is also known as the replication of human-level intelligence in machines. This had been a goal of computer scientists since at least the 1950s, but scientists were always stymied by a lack of memory or storage in computers, and they argued internally over the best approaches to developing this intelligence. But as information storage and computing capacity expanded radically over the third and fourth industrial revolutions, radical advances became possible, and, as we all now know, we now have computers that can interact with us.

This happened because Geoffrey Hinton, Yann LeCun, and Yoshua Bengio, now known as the “Godfathers” of AI, pushed for an approach that was based on modeling the processes of the human brain and developed an approach based on “neural networks,” an idea that can be traced back to the 1940s when scientists had the idea of creating mathematical representations of the 86 billion neurons and 100 trillion connections[lxvii] in the human brain[lxviii]. By the early 2010s, they began to focus on Deep Learning, or using multiple neural networks[lxix]; this was made possible by large datasets created by the information economy, and the expanded memory and processing power of computers overcame previous technological barriers[lxx].

This “deep learning” is what has made technologies such as ChatGPT and continued likely radical advances in AI possible, as they enable the machines to learn on their own when they are exposed to greater sets of data and provided with more computational power,[lxxi] something referred to as “scaling” that many believe there aren’t limits to[lxxii]. Conceptually, it’s not complicated. An input neuron layer receives data—a piece of text, for example. A middle layer processes the data using prediction, and the output lawyer relays the prediction. Over time, the model adjusts its answers and makes better predictions, and the more data it is fed, the better its predictions. The total number of adjustments is represented by the total number of parameters. ChatGPT3.5 has 175 billion parameters[lxxiii]. The total number of parameters in ChatGPT4 has not been disclosed but is estimated to be approximately 1.7 trillion[lxxiv]. The Chinese company Alibaba has developed a model that some claim has 10 trillion parameters[lxxv]. China now has at least 130 LLMs, representing 40% of the global total and behind only the United States' 50%[lxxvi].

In this machine age, we will not just see advances in artificial intelligence but also advances in new technologies, many of which are accelerated because of AI. These included robotics[lxxvii] and the development of synthetic biology, “an emerging field of research where researchers construct new biological systems and redesign existing biological systems[lxxviii].” There is obviously a lot of potential for synergy between artificial intelligence in machines and the creation of synthetic biological systems, including the development of intelligence within these systems.[lxxix] We are already approaching a point where Brain Computer Interfaces (BCI) can improve the functioning of existing biological systems[lxxx]. We will also see developments in gene editing[lxxxi], quantum computing (see appendix F), micro bots that will swim in the body[lxxxii], and bots that will assert claims of legal identity[lxxxiii]. Combined, these technologies will trigger radical changes in our world[lxxxiv].

You can see from the discussion in the last few sections how technology development has accelerated rapidly over the last 100 years. This change has already been rapid, but as noted by McKinsey in a 2021 report (citing Peter Diamandis who attributes the quote to Ray Kurzweil[lxxxv]) written even before the recent rapid advances in AI, the rate of technological change over the next 10 years is likely to exceed the rate of change over the last 100 years[lxxxvi].

We need an education system that prepares today’s youth for this change, and it needs to be one that fortifies their intelligence as well as their capacity for love, endurance, and courage.

And how are we developing intelligence in this 4th industrial revolution? We are developing human intelligence in this era largely the same way we developed it in the second industrial revolution: by monitoring time spent on tasks and memorization of facts, with some information management/organization and critical thinking related to the information imported from the third industrial era.

And how are we going to handle the 5th given that we haven’t yet adapted to the 4th?

Developing Intelligence in the Fifth Industrial Era or a ‘Cambrian Explosion’?

Introduction

Intellectuals and technologists increasingly argue that we are entering a Fifth Industrial Revolution—an era not merely defined by new tools, but by a fundamentally new relationship between humans and intelligent machines.[lxxxvii] While the Fourth Industrial Revolution introduced artificial intelligence, robotics, and cyber-physical systems, the Fifth—often referred to as Society 5.0—envisions a world where human life and machine intelligence are deeply intertwined, evolving together in symbiotic[lxxxviii].

Already, we are starting to see his new era is defined by the fusion of digital, physical, and biological systems. At the heart of this transformation is the integration of AI with biomanufacturing, synthetic biology, robotics[lxxxix],” and advanced materials, driving breakthroughs across industries and enabling solutions that address both productivity and societal well-being. In this Fifth Industrial Era, we’re witnessing the fusion of digital, physical, and biological systems as a defining hallmark of progress. AI-powered digital twins are revolutionizing biomanufacturing by enabling real-time simulation and control of cellular and chemical processes—optimizing parameters such as temperature, pH, and nutrient delivery to achieve efficiency gains[xc].

Parallel advances in biofoundries—automated facilities integrating DNA synthesis, gene editing, and metabolic engineering—are dramatically accelerating the prototyping cycle for engineered organisms, enhancing scalability and precision in synthetic biology[xci]. These systems operate through an iterative feedback loop