Designing Schools AI Bootcamp; Educating4ai.com; Co-Editor Navigating the Impact of Generative AI Technologies on Educational Theory and Practice.

TLDR

**There was a lot of talk about learning bots. This talk included the benefits of 1:1 tutoring, access to education for those who don’t currently have it (developing world), the ability to do things for which we currently don’t have enough teachers and support staff (speech pathology), individualized instruction (it will be good at this soon), and stuff that it is already good at (24/7 availability, language tutoring, immediate feedback regarding argumentation and genre (not facts :), putting students on the right track, comprehensive feedback, more critical feedback).

*Students are united. The student organizers and those who spoke at the conference have concerns about future employment, want to learn to use generative AI, and express concern about being prepared for the “real world.” They also all want a say in how generative AI is used in the college classroom. Many professors spoke about the importance of having conversations with students and involving them in the creation of AI policies as well.

*I think it’s fair to say that all professors who spoke thought students were going to use generative AI regardless of whether or not it was permitted, though some hoped for honesty.

*No professor who spoke thought using a plagiarism detector was a good idea. Every single professor I head comment on them, even one who had strong opposition to generative AI, thought the detectors should not be used.

*Everyone thought that significant advancements in AI technology were inevitable.

*Many people pointed out that overall knowledge of the existing technologies (especially outside of ChatGPT) amongst faculty is very limited and that they want and need professional development.

*Professors were unsure as to what to suggest regarding universities buying the tools for faculty and staff. Institution-wide subscriptions obviously address equity concerns, as those with means do purchase them, but some expressed concern over lack of training to use the tools (and their abilities will change constantly) and what indirect endorsement it may signal.

*Almost everyone expressed being overwhelmed by the rate of change.

KEY TAKEAWAYS FOR ME

These are some new ideas I hadn’t thought of previously that I think are critical

*Educators are worried that AI tools will take their jobs, but students are also worried about the future of jobs and that they will be at a competitive disadvantage in the job market if they don’t know how to use AI tools properly. One student highlighted that students and professors have shared concerns about this and should work together.

*One professor noted that while teachers and professors cannot change all of their instructional methods and assessments quickly, they should change key assessments and those that are most vulnerable to being completed by AI. Over time, and through an iterative process, changes can be made.

*I enjoyed hearing so many people talk about the development of communication and critical thinking skills in students and even of argumentation and organizing arguments in writing. GAI has rejuvenated the importance of teaching debate for me, and I’m working on an academic paper with some friends about the importance of using debate in and outside the classroom as an instructional and assessment method in an AI world.

Introduction

Today I attended the majority of the AIX Education conference, which was organized by undergraduate students from the University of Illinois, Lakewood, NYU, and Stanford who understand their education and world will be heavily impacted by AI, especially generative AI.

As a speaker in K–12 today, I had the chance to witness firsthand a small amount of the massive amount of work they did to organize a conference with 60+ speakers and 4400 attendees.

I believe it is one of the largest, if not the largest, conferences organized to date on this critical subject, and, again, it was organized by students.

These are the highlights based on what I saw.

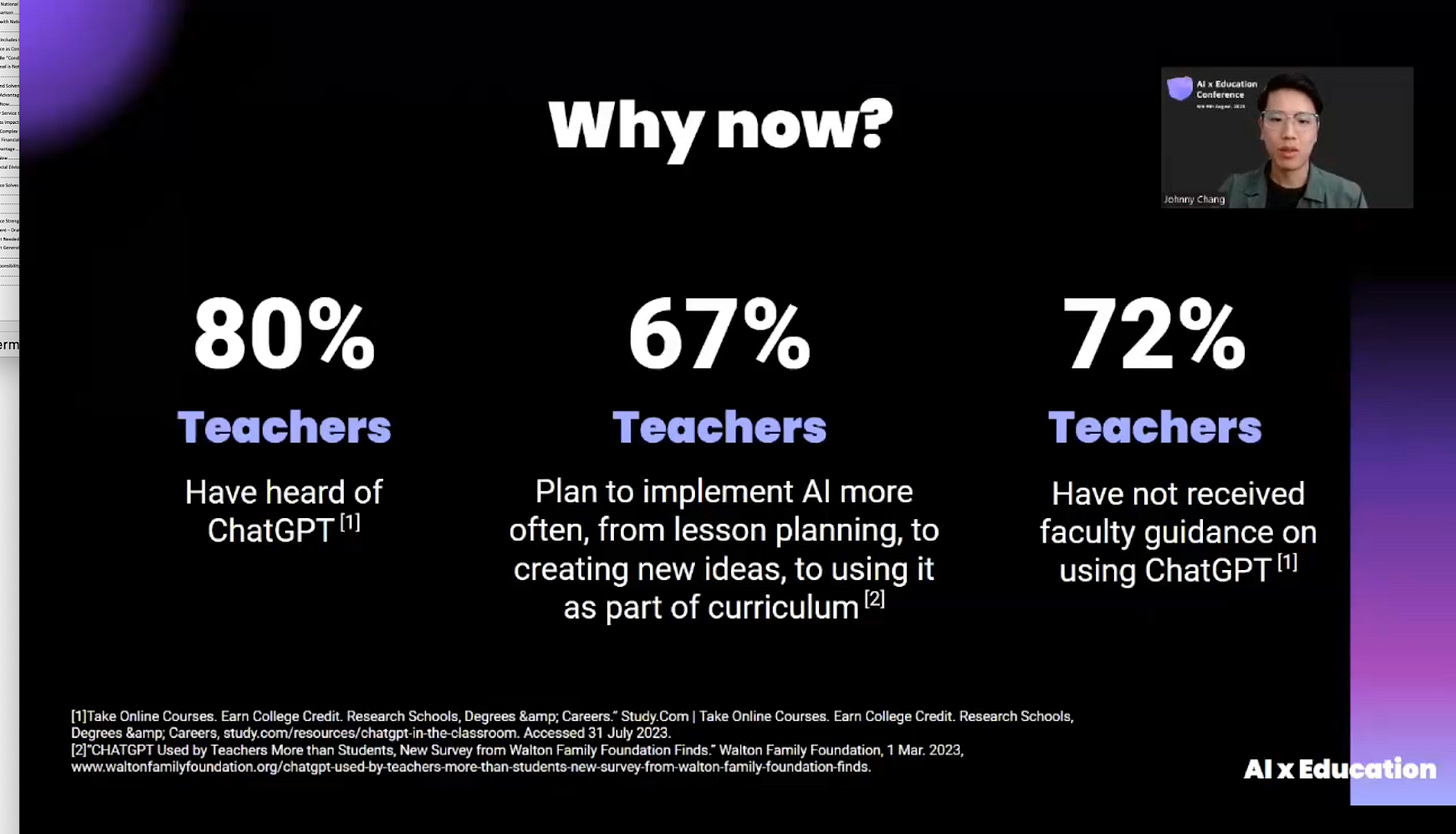

(1) Teachers and professors are aware of AI and AGI and want to use it in the classroom, but very few have received professional support to do so.

So, yes, there is a massive gap between teacher interest and training.

(2) College students are using AI whether their professors like it or not :). It seems it’s use has to be accounted for/assumed and efforts need to be made to positively integrate it into the classroom.

(3) Common Use Cases and limitations were discussed

Uses

Brainstorm

Feedback – resume, work (specific feedback)

Summarize

Research (perplexity)

Stats visualization (CI, i assume)

Lesson plans, including a full 6 weeks of plans

Limits

Bias

Hallucination

Overreliance

Inequity

(4) Dr. Kristen DiCerbo showed one of the first pieces of technology that was used in education. If a student got four answers right, they got a piece of candy.

Although she didn’t mention it, one thing I’ve been thinking a lot about is how social media engages students and how teachers struggle to keep students engaged, especially relative to social media. The idea of students being “rewarded” by GAI bots seems great, but what kind of rewards might they get?

(5) Dr. Dicerbo pointed out that AI, and especially GAI (the focus of the conference), cannot solve all of the problems in education but that it can help with some of them.

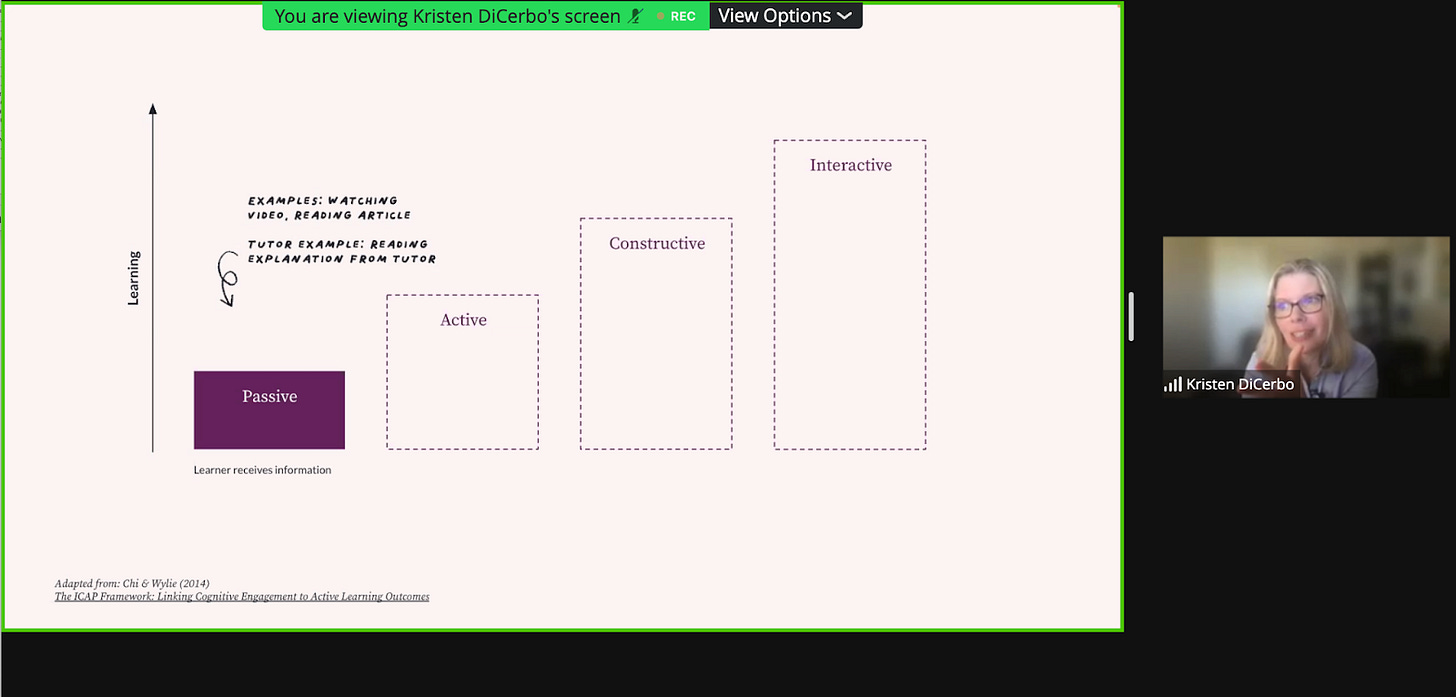

Active or cognitive engagement These technologies have the potential to engage students at an individual level in interesting ways. Obviously, engagement alone is not enough to make learning happen, but learning won’t happen without engagement.

Students who are almost there As Dr. Dicebo noted, students learn the most when they are challenged with material that is right on the edge of what they know. GAI learning bots can provide the challenge and support the student

with Immediate feedback and answers. Bot tutors can provide immediate feedback, not just in terms of the answers but also by helping to coach or tutor students through a back-and-forth dialogue. This is the first time this has been practical (at least eventually).

(6) Dr. Diceberbo revealed a new college essay writing tool

(7) Dr. DiCerbo pointed out the importance of interacting or dialoguing with the models.

(8) She highlighted some new educational models that support the transition from passive, active, constructive, and interactive learning and how those support education.

(9) She discussed the important role of human teachers in supporting teachers.

(10) She did acknowledge that we really need to have conversations about the fact that ChatGPT (and similar models) is another school that students can learn at and that students will use these technologies in their jobs.

(11) She pointed out that these tools cannot

Know what happened in your class

Know what happened in your community

So they cannot help students “cheat” on that.

(12) Whatever school policy you have, it should be clear

13) We need conversations because it’s all new to everyone.

(14) How do you choose which system(s) to use?

(a) What happens to the data?

(b) What safety measures have been implemented?

© How can adults know what students are doing?

(15) Students questions: Will this reduce our imagination and creativity?

(16) Dr. Cirebo pointed out that Khan Academy/Khamingo has a lot of restrictions to prevent students from just getting answers, but they can obviously get those in other places.

(17) Teachers and professors cannot change everything at once, but they can start with some changes.

(18) Dr. Jinjun Xiong from the National AI Institute explained that there are not enough AI speech pathologists to support all the needs of students, but that AI solutions (AI screeners, who may have early signs of this problem, providing ability-based intervention for each child to support teachers) may be able to help identify and solve these problems.

There are often conversations about AI competing with humans, but in this case, there aren’t enough humans to support student needs.

Currently, even the best AI technology cannot do this, but they are working on solutions, and we should continue to advance AI.

One interesting thing this brings up about AI screeners is privacy.

(19) There is nothing in the structure of an LLM that requires it to be true.

(20) Dr. Juliue Hockenemeier, an AI professor at the University of Illinois, points out that no matter what she does in class, students will use the LLMs to do their work. She points out that this means we can no longer know what students are actually learning and what work they are doing, even with the inherent limitations of LLms. This also creates inequity between those who use it and those who don't, because those who are using it are producing better work.

(21) Dr. Xiong pointed out that educators really need to understand what LLMs can’t do well and what they do well. In terms of use, he says everyone needs to participate in conversations about its use

(22) Jan Bartkiowak, a student at Minerva University, who is focused on AI in education and business education, thinks current business education is too passive, theoretical, and devoid of emotions and marketing.

He says education means learning in a way that uses AI to amplify human capabilities and not mental competencies that can by automated by AI. These are ways he says it can be done n–

And he shared examples of how he is using it

He said professors should continually consult with students about how generative AI is used in the classroom. He hopes that if the classroom is a more positive place then students will just want to learn.

And he shared some tools everyone might consider using.

He makes a strong case for using generative AI in business education, and I hope he has a chance to work with Ethan Mollick some day.

(23) Chinaat You, a Learning Designing MA Student at Stanford, talked about how he created a bot that basically was able to imitate his professor, but stressed that only through our interpretation of AI output that we can turn its output into knowledge.

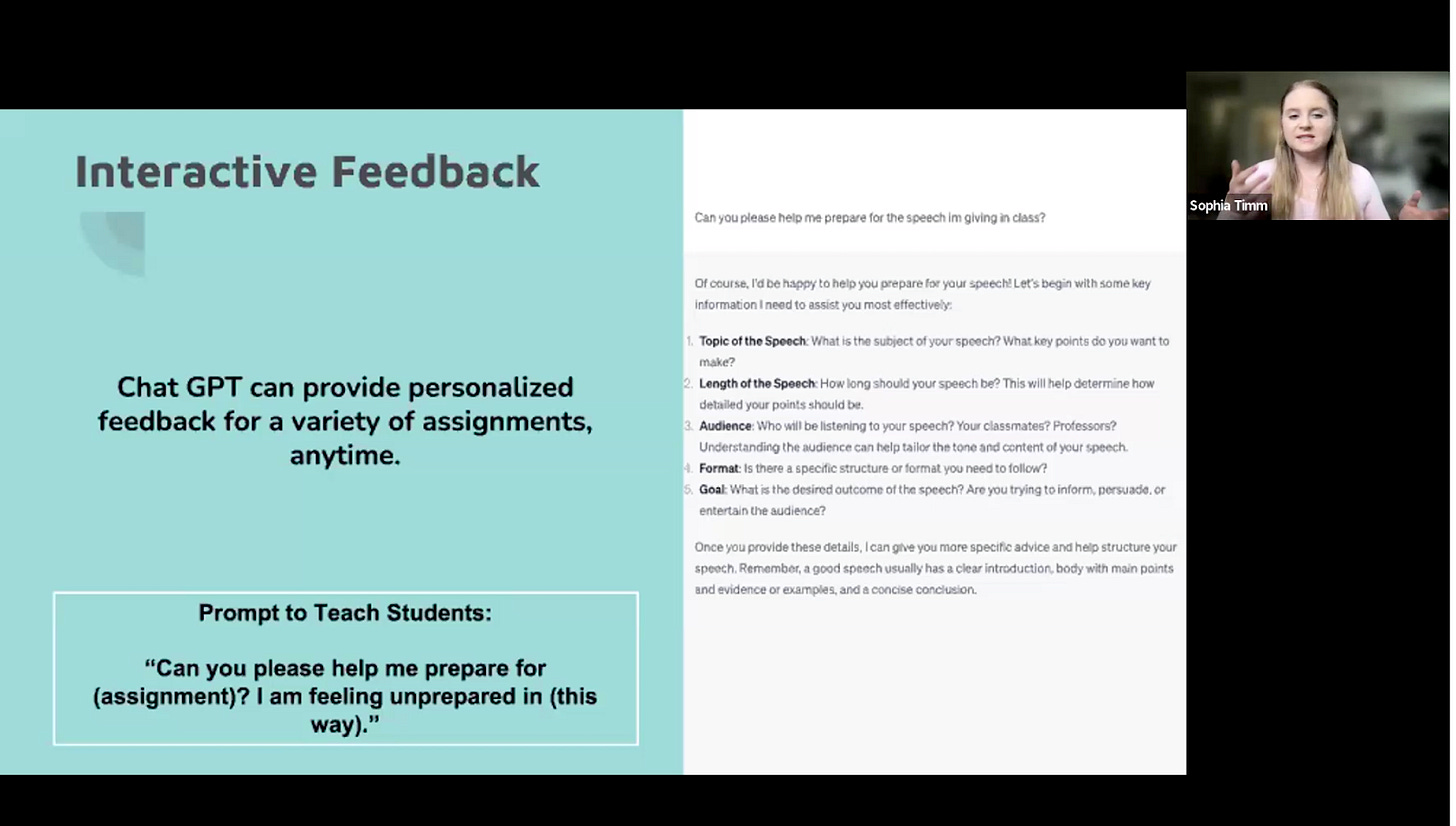

He explained that something that took 5 hours on Excel took 2 minutes with ChatGPT Code interpreter

(24) Sophia Timm spoke about the importance of equity in education and and how students who have access to GAI technologies and learn to use them have a large advantage in school and work over those who don’t. She suggests we actively take action to reduce inequity and even use AI to do that.

(25) Sophia concluded by pointing out that if we are going to say we are lifelong learning then we should be life long learners and learn about generative AI

.

(26) Fien vAN Dien Hondel, a student from the Netherlands who is now at Minerva in California highlighted how effective at ChatGPT is at language tutoring and the importance of training to use it at work,

She argued we should focus on durable skills: critical analysis,

Seh highlighted why we should not rely on ChatGPT and that it needs continued critical improvement.

(27) Fien also stressed the importance of professors listening to students about their concerns related to generative AI and using it in the classroom.

(28) Professor Mike Yao pointed out how much potential generative AI can be used as a learning companion but also pointed out that it can be used by less motivated ways to avoid doing important work.

(29) Professor Yao also questioned how students may know if the output is valid if they don’t have enough knowledge to assess that to begin with.

(30), Jeff Shatton, who is an associate professor of business administration who works on learning bots, pointed out that wealthy students already have 1:1 tutoring and that in many developing countries, students do not have affordable educational opportunities, and even those that do are stuck in classrooms with 80 kids (Capetown, 2010). Given this, he thinks learning bots will lead to large advances in educational equality.

He also thinks the bots will advance to help students develop soft/durable skills.

He openly wondered if he'd have a job in 10 years as a college professor, given the anticipated power of these learning bots

(31) Anthony Auman pointed out that there is nothing we can do to punish student use short of a confession and that students are going to use it regardless, so they might as well learn to use it properly.

(32) Autman says some professors are going back to paper and oral exams.

(33) Autman claimed that when he gave his class permission to use ChatGPT for class work, only 20% used it (I’m not sure how he knew that).

(34) Autman made a strong argument that people still want to express themselves.

(35) John Haberstoh, a history professor, expressed concern about AIs taking over reading and writing. He doesn’t support using plagiarism detectors (he’ll never use them again) and hopes that class policies and dialogue with students will mean they only use it when appropriate. He’s doubling down on communication with students to express learning expectations and purposes.

He suggested some different ideas for how professors might approach it.

(36) Professor Sidney Dubrin talked about how GAI has thrown open the rules/norms/expectations of what we do and how we do it..

(37) Dubrin clarified two conceptualizations of AI: Conceptual AI and Practical AI. Conceptual AI is about if we should use it and its society-wide impacts. Applied AI is about we use it in specific instances and what it means.

(38) Dubrin discussed what it means for reading. Cliff Notes, watching movies, online summaries have always challenged if students actually read the text.

Previously, we’ve issued writing assignments to see if they’ve done the reading assignments. Given the ubiquitious development of summarizers, how can we know if they read it they use generative AI?

The growing number of platforms makes this a greater problem – ReadGen, Claude will read a book.

How does interacting with these technologies change and how what read?

Professor Watkins (below) talked about the SciSpace reading applications and meeting summarizers such as OtterAI

(39) Mark Watkins, professor of writing and director of AI Center or Teachers,

He highlighted some tools that are designed to help students write.

I thought his tracing analogy was useful. Is using a writing assistant the same as an artist tracing?

He pointed out that ChatGPT is not designed as a writing assistant

He highlighted some tools that are specifically designed to help students with writing.

He also suggested a framework for guiding students through the writing process with generative AI.

He also suggested some reading assistance technologies, such as common summarizers in Claude2 and Perplexity.ai

(40) Professor Lance Eaton talked about writing in the College Unbound program he works in and how he’s been working on studying generative AI related to his programs. College Unbound taught two courses on AI literacy in the spring and is working to test school policies that were developed from those courses in the fall. He talked about how important it was that the conversation occur with students.

(41) Eaton talked about how we shifted from the oral to the written and that we are now managing a new shift that incorporates AI.

(42) Eaton also discussed how the technologies sort of compress or suppress the diversity of language.

(43) Eaton pointed out that learning is a black box—that we don’t really know what really works and that there is no one model that works for everyone. Teaching and learning with GAI will really force us to focus on these teaching and learning questions

(44) Dubrin stressed the importance of students learning to write.

(41) Watkins pointed out that most educators don’t know about these tools; they only know about ChatGPT, and they need to learn about these technologies. He suggests slowing things down, but obviously that is hard.

(45) Eaton pointed out that AI might be a more objective grader.

(46) Eaton pointed out that it can help struggling (for a variety of reasons) learners better access learning by building a bridge to more complicated subjects and helping them accomplish tasks such as a cover letter that have nothing to do with the job.

(47) Graduate student Anvi Patel said students, like professors, are worried about the future of jobs.

(48) Professor Tanya Means argued that we should focus on what people are good at and what machines are good at and not try to get students to do what machines are good at.

Conceptually, this is a good approach, but that gap will obviously narrow over time. I think we also need to help students learn how to work with machines that are as good or better at what humans can do. We work with people all day who are often better at doing some of the things we do.

(50) I thought Means made a strong point that while professors cannot adapt all assignments and assessments to make sure there is integrity in every assessment in a world of generative AI, they should work to adjust the critical ones

(51) Profess Kang made the point that students need more skills than how to use ChatGPT/

(52) Jina Kang – need more than one skill, not just ChatGPT and highlighted critical thinking as a skill

(53) Someone (my notes lost track) explained that students need fundamental knowledge to think critically and that we can’t skip that step.

(54) Some additional methods to reduce the chances of students relying on AI to write papers were discussed

Developing intrinsic motivation

Fact checking ChatPGT/GenAI work while the tools are still weak

Trying to evaluate the nuance that only humans can bring

Projects that are hard for AI to do – preparing a report for a specific board, writing a memo to a specific boss, doing a research paper for a specific boss (Means)

Using AI to do basic work, such as prototyping solutions and then improving on it with human nuance

(55) A CS student pointed out that learning to code is only 10% about coding. 90% is problem solving

(55) The importance of learning to learn (Means) was discussed

(57) Their is for plagiarism detectors to work (Cope, professor of education policy). No alternative but to use it in the classroom.

(58) Current tools are mediocre, we are better. But the tools will improve and have an impact. If we learn to use these tools we may be okay. We don’t know what jobs look like in 2-5 years and exactly how these tools will be used in jobs, but they will be used there and will have a big impact on the job market.

(59) Teaching creative, communication, and critical thinking skills is key. (Angrave)

(60) Blind reliance on the tools is bad (hallucinations, no learning) (Dhingreja0)

(61) Students are developing their own bots using OpenAI’s API (Dhingreja0)

Thank you for such a detailed takeaway. What stood out for me is how many times this came up for students: the uncertainty of tomorrow's jobs. It's so good to have student voice.