A Review of the Major Models: Frontier (M)LLMs, Deep Researchers, Canvas Tools, Image Generators, Video Generators

Frontier AI Models: The Engines of Modern AI

Frontier AI models represent today's most capable AI systems, excelling across coding, writing, research, reasoning, and understanding multiple types of content simultaneously. Originally called Large Language Models (LLMs) when they primarily processed text, these systems are now more accurately described as Multimodal Language Models (MLLMs) + agents that take action. Unlike earlier AI that handled only text, these models process words, images, audio, and video through a unified architecture that treats all information as part of the same underlying "language."

This unified approach enables remarkable capabilities: the same model can describe what's happening in a photo, respond to a spoken question about that image, or explain a mathematical concept while highlighting relevant parts of a diagram—all seamlessly integrated rather than pieced together from separate specialized systems.

But these models go beyond generating output—they can take action. Through function calling, they autonomously decide when external tools would be helpful, request specific information or computations, and weave the results into their responses. A model might query a database for current statistics, perform complex calculations, code in Python, or search the web for recent developments, then incorporate this fresh information into a comprehensive answer.

This combination of multimodal understanding and tool use transforms static AI models into dynamic agents that can perceive, reason, and act across different types of information and real-world data sources.

Note: Beyond Only Next Word Prediction from a Trained Data Set

While these models are built on a foundation that predicts the next word or token in a sequence, they've evolved far beyond simple prediction through reinforcement learning, tool integration, strategic planning, and emerging memory systems.

Reinforcement learning is a training method where AI systems learn by taking actions and receiving feedback on whether those actions were helpful or harmful—similar to how humans learn through trial and error. This process teaches models not just to generate plausible text but to produce outputs that are useful, accurate, and aligned with human preferences.

The integration of tools further distances these systems from mere word prediction. When a model chooses to search the web, perform a calculation, or query a database, it's making strategic decisions about what information it needs in order to respond well—rather than simply continuing a text pattern. The underlying predictive architecture remains, but now it supports more advanced reasoning behaviors: pausing to gather facts, reconsidering its approach, and synthesizing results from multiple sources. These are actions that go far beyond just guessing the next word.

The addition of strategic planning gives these models even more power. Many are now able to break a task into multiple steps, decide which tools to use, and sequence their actions intelligently—much like a human assistant would. This agent-like behavior allows them to solve complex problems, combining knowledge gathering with reasoning to produce thoughtful, tailored responses.

Memory and personalization are also emerging features in today’s most advanced models. Some can remember information from prior interactions, recall user preferences, and maintain context across sessions. This turns the AI from a stateless generator into a dynamic partner capable of improving over time.

While none of this guarantees perfect accuracy, it does represent a fundamental shift. These models are no longer limited to knowledge frozen at training time. They can now access current information, verify facts in real-time, and incorporate fresh data into their reasoning. Rather than being constrained to static training patterns, they can dynamically retrieve and process new information—making them far more capable of handling current events, evolving knowledge, and situations that weren’t present during training.

In short, today’s models still rely on next-token prediction under the hood, but layered on top are feedback loops, tool use, planning abilities, and memory systems that enable them to act less like text generators and more like reasoning, goal-directed assistants.

Note 2: Power Beyond the Models: The Application Layer

The power of AI is also not limited to the capabilities of the underlying models. The true potential is often realized through specialized applications that integrate these models into a purpose-built workflow. For instance, while frontier models like Claude Opus 4 and ChatGPT o3-pro possess formidable coding abilities, a dedicated application like the Cursor code editor significantly enhances a developer's productivity. Cursor moves beyond the conversational chat interface by deeply integrating AI into the local development environment. It allows a developer to reference all of their local files, highlight code for specific actions like refactoring or debugging, and generate new code with full project context, creating a more seamless and powerful experience than simply copying and pasting code from a separate chat window.

This principle extends to many other domains. Research assistants like Perplexity are not just search interfaces; they create a structured environment for inquiry with features like follow-up questions and cited sources that go beyond a single model response. Presentation tools like Gamma or Tome use AI to automate the entire process of creating a slideshow from a simple prompt, handling layout, design, and image sourcing. In each case, the application provides a specialized user interface and workflow that harnesses the model's power for a specific task, demonstrating that the greatest impact of AI comes from the synergy between the frontier model and a well-designed application.

1. A Trend Towards Specialization

The market is now seeing a trend toward specialization, with companies releasing multiple model variants tailored for specific tasks, balancing performance, speed, and cost.

Comparative Table: Frontier AI Models

2. Deep Research: Autonomous AI Research Agents

Deep Researchers are a class of AI agents designed to automate multi-step, in-depth research. They break down complex questions, autonomously search for information, analyze sources, and synthesize findings into structured, well-cited reports.

Core Features:

Agentic Planning: They create detailed research plans and execute them systematically.

Autonomous Search: They can browse the web and access academic databases without manual intervention.

Iterative Analysis: They gather and refine information in cycles, adapting their approach as new insights emerge.

Structured Reporting: The output is typically a comprehensive, cited report, often exportable to formats like Google Docs.

Comparative Table: Deep Research Capabilities

New Tools and Enhanced Capabilities

The deep research space is expanding with new, specialized tools.

Avidnote: A comprehensive AI research assistant designed specifically for academics, supporting the entire research lifecycle within a private environment.

Deepwriter AI: A tool aimed at professionals in medical, financial, and technical fields, capable of handling real-time web searches and PDF analysis with robust citation management.

Perplexity AI Updates: Perplexity has enhanced its research capabilities with features like an Academic Filter for scholarly articles, a Reasoning Effort Parameter to balance speed and thoroughness, an Asynchronous API for long-running queries, and Image Upload for multimodal search.

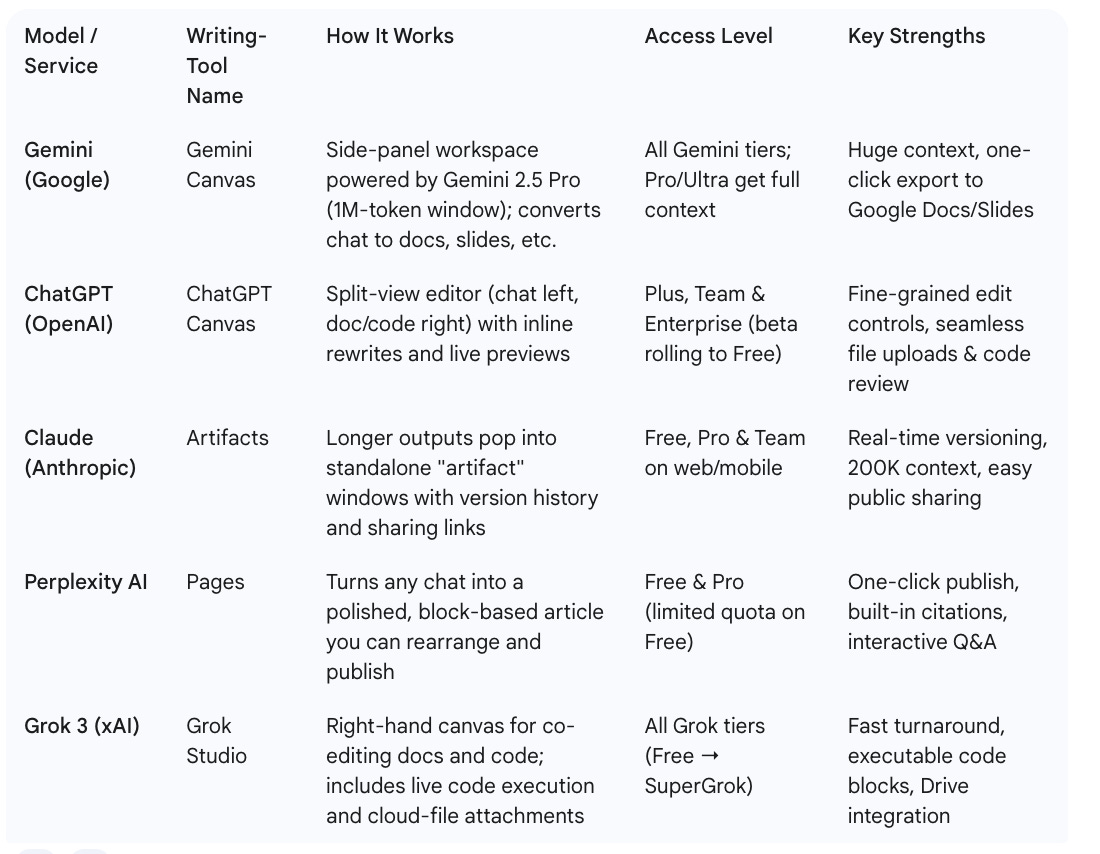

3. Canvas Writing Tools: Your AI Co-Writer

Writing-oriented "canvas" tools provide a home base for long-form creation with AI. Instead of a simple chat, the model's output appears in a dedicated document panel, similar to Google Docs or Notion, where you and the AI can co-author the content in a shared space.

How It Works:

Direct Editing: You can type, paste, and drag-and-drop content directly on the canvas, and the AI will adapt to your changes.

Prompt-Based Editing: Highlight text and give the AI commands like "tighten this" or "add APA citations" for instant revisions.

Comparative Table: Writing Tools

4. Image Generation: The Creative Revolution

AI image generation has become a core creative tool, transforming text descriptions into stunning visuals.

Comparative Table: Top AI Image Models

5. Video Generation: The Next Frontier

AI video generation is revolutionizing content creation for filmmakers, marketers, and educators, turning text or images into video clips.

Comparative Table: Leading AI Video Models

Commercial Considerations

Most video generation providers offer tiered pricing, with free options being limited. Costs reflect the substantial computational resources required, and many platforms have become more restrictive with free plans in 2025.

Conclusion and Future Outlook

The landscape of AI in 2025 has moved from novelty to an essential component of academic, creative, and professional workflows. As these technologies evolve, we can expect further integration between text, image, and video, along with a greater focus on ethics and ownership. For anyone looking to leverage these tools, understanding the specific strengths of each system is crucial for selecting the right technology for the task. The most successful applications will come from those who strategically combine multiple AI capabilities to enhance human creativity.

Thank you Stefan. Very helpful to have a summary of the current state of play like this.

Will bookmark it until your next summary!