AI Writing Detectors Are Not Reliable and Often Generate Discriminatory False Positives

Teachers and schools are being tricked into wasting time and money on these tools that can be better invested in training faculty.

Related: How to defeat writing detectors with a few simple steps, plus how to add spelling and grammar errors to your output to trick your teacher.

Last updated: 8/20/23

Relying on AI-text detectors to stop students from using AI to write their essays and papers is a lost cause, as the detectors will generate a number of false positives and false negatives, even if there is nothing done to perturb the output of the generated text, as the tools only look for patterns in the text output and no pattern is certainly produced by AI (and this “pattern” is only becoming less and less common as the tools advance to mimic the human writing of the author (see below)). Since there is no certain pattern, false positives (a false identification of an AI writing pattern by a student) are often produced, and those false patterns are more likely to be found in the writing of non-native speakers who often write in the pattern the detectors are trained to look for, resulting in discriminatory false positives and discriminatory false accusations.

This false positives issue well-known and well-established, so as a school administrator who may face a potential lawsuit based on wrongly penalizing a student based on an AI writing detector, you cannot claim ignorance/that the false positive problem was not known.

This is why schools such as Vanderbilt University, the University of Pittsburgh, and Australian universities have woken up to these problems and are finally moving away from the detectors.

Teachers and schools are being tricked into wasting time and money on these tools that can be better invested in training faculty to teach with AIs and develop new approaches that will not expose them to well-deserved lawsuits.

So, why are these detectors a lost cause?

(1) What’s the point? AI writing tools are already integrated into industry and accessible to a billion people (Google and Microsoft are integrating these writers into their products and they each have a billion users). People who write successfully in the future will be able to do so with the tools, not without them. If we attempt to teach students to write without the tools, we are just teaching them to write in a way they will not write in the future. So, yes, we are point unreliable and discriminatory detectors at students, resulting in false accusations while simultaenously undermining their job skills. Ugh

__

The first reason gets at the value of the detectors, even if the detectors were accurate.

The remaining reasons get at problems with their accuracy. All of these problems largely assume students make at least some effort (not a lot) to disguise the writing (which most students are doing). If students simply copy and past the entire content from most generative AI systems, then the will mostly (though not entirely even in that situation because no pattern is provable) work to detect writing at a high probability.

If you read no farther, just read the most recent (July 2023) study:

Studies such as the one above are a “dime-a-dozen” and are cited throughout this paper.

Open AI recently (7/23/23) pulled their own detector because it just didn’t work and was discriminatory.

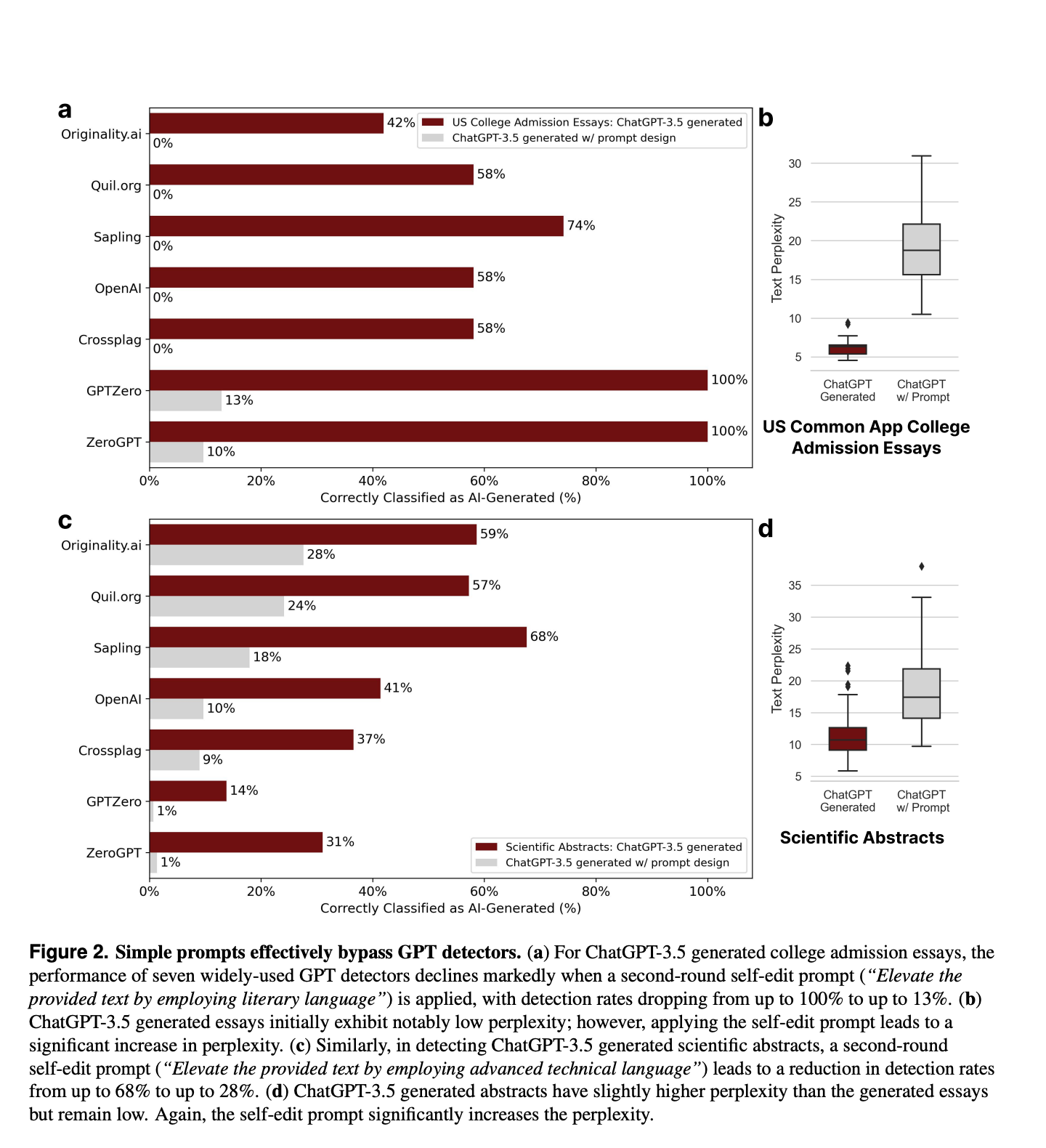

I didn’t need such a study; I defeated them with two words by slightly perturbing the text — I asked it to increase the “perplexity” and “burstiness” of the output in order to fool the detector, which looks for low scores in those areas when flagging something as, “AI Written.” And adding the simple phrase, “elevate the provided text by employing literary language” will trip-up the detectors.

From: GPT detectors are biased against non-native English writers

(2) No detector predicts more than a probability of plagiarism. What’s your probability threshold for giving a student a failing grade or launching a plagiarism investigation? Do you only use one detector or many? They often give inconsistent results.

(3) There is no way to determine what percentage of AI-text should be allowed. How much of the text must be artificial intelligence-written before it is considered cheating? 100% 80% 50% 10%? Does it matter if the sentences are interspersed with human-written sentences (or sentence fragments)? Is your answer that you allow zero? No AI text detector can establish that no text in an essay was written with AI. I’ve literally never seen it return a Zero score.

(4) There is no way to prove it. Large language models won’t ever reproduce the same exact text output twice; these aren’t databases! And even if they did, how would you prove a human didn’t also write it?

(5) The detectors produce a lot of false positives. Almost everyone who has experimented with one of these detectors has tried putting in some of their own work and had it return a high probability that it was written with AI. Do you want to falsely accuse students?

(6) The detectors produce a lot of false negatives. Given the number of false negatives it produces, is it really a solution to the problem?

(7) It’s too time-consuming. High school teachers I often speak to tell me that a majority of their students are using ChatGPT or similar AIs to complete at least parts of their assignments. Do you have time to investigate them all, including the false positives? I spoke with one teacher who evaluated 130 papers in 4 text detectors for one assignment!

(8) It creates an adversarial classroom. If faculty are constantly scanning and checking individual papers for cheating, an adversarial relationship is created between teachers and students. This is magnified when a large number of false positives. Tension between teachers and students will undermine learning.

(9) It’s impossible for these detectors to work because ChatGPT4 and other tools can be trained to write in a person’s own voice. For example, you can say, Write in the style of, SAMPLE. This will work better and better as technology advances and students start working with their own learning bots. Claude2 from Anthropic makes it super easy.

Note: “write in your own voice and style';” “write like a human.”

TurnItIn Asia’s director has acknowledged as much. And Sam Altman said OpenAI’s own detection tool is nothing more than a stop gap measure. It

Apple’s new developments also permit personalization: “Apple's new transformer model in iOS 17 allows sentence-level autocorrections that can finish either a word or an entire sentence when you press the space bar. It learns from your writing style as well, which guides its suggestions.” Edwards. The same article claims it can write personal stories based on the photos in an Iphone.

(10) TurnItIn? TurnItIn was trained on ChatGPT3.5, not ChatGPT4, and can’t account for personalization. It produces a lot of false positives. Note that the first study referenced covered TurnItIn.

(11) Students can significantly reduce the probability of detection by randomly integrating some of their own writing and running it in and out of text translators. They’ve been doing that with copied and pasted text for years.

“In this paper, we systematically test the reliability of the existing detectors, by designing two types of attack strategies to fool the detectors: 1) replacing words with their synonyms based on the context; 2) altering the writing style of the generated text. These tactics involve giving LLMs instructions to produce synonym substitutions or write directives that alter the style without human intervention, and detectors can also protect the LLMs used in the attack. Our research reveals that our attacks effectively compromise the performance of all tested detectors, thereby underscoring the urgent need for the development of a more robust machine-generated text detection system.” Shi et al

“We propose a novel Substitution-based In-Context example Optimization method (SICO) to automatically generate such prompts. On three real-world tasks where LLMs can be misused, SICO successfully enables ChatGPT to evade six existing detectors, causing a significant 0.54 AUC drop on average. Surprisingly, in most cases these detectors perform even worse than random classifiers.” Liu

(12) Any hope for these detectors is eliminated by how the copilot writing tools actually work. They will “co-write” text with you and enable a person to write in their own voice. Google/Bard Co-Pilot Microsoft Co-Pilot. This reinforces #2 – what percentage of text do you allow?

When someone “co-writes” with Google Docs (“Help me Write”) or (soon) Microsoft Word Copilot, are you going to try to say they used random words and sentences from AI writing tools?

(13) The new Quillbot writing assistant features substantially reduce the workability of detection because you can substantially alter the text very easily by choosing different styles.

(14) New apps such as Conch.ai and Undetecble.ai are being marketed to students to help them write substantial chunks of their papers and even guarantee they’ll pass the detectors. Conch will even add citations after the writing. Technology exists to break the detectors.

“In this paper, both empirically and theoretically, we show that these detectors are not reliable in practical scenarios. We show that paraphrasing attacks, which use a light paraphraser on top of a generative text model, can break a wide range of detectors, including those that use watermarking schemes, neural network-based detectors, and zero-shot classifiers.” Sadasivan

Strong prompting can also break it –

“The study reveals that although the detection tool identified 91% of the experimental submissions as containing some AI-generated content, the total detected content was only 54.8%. This suggests that the use of adversarial techniques regarding prompt engineering is an effective method in evading AI detection tools and highlights that improvements to AI detection software are needed.” Perkins

(15) They are biased against non-native speakers. “Our findings reveal that these detectors consistently misclassify non-native English writing samples as AI-generated, whereas native writing samples are accurately identified. Furthermore, we demonstrate that simple prompting strategies can not only mitigate this bias but also effectively bypass GPT detectors, suggesting that GPT detectors may unintentionally penalize writers with constrained linguistic expressions.” Liang et al. This argument is easy to find in many places.

So you can’t think, “no harm, no foul.”

From Liang et al.

(16) They end up just punishing your already weaker students because the more advanced students just know more about playing the games. And the wealthier ones can just buy the new apps, such as conch.ai

(17) Some people have no idea what they are doing and don’t even use detectors properly. Multiple teachers and professors are putting papers into ChatGPT itself and asking if it wrote it.

A few problems.

(1) ChatGPT isn’t the only AI writing tool. A few other other big ones are Claude, Pi, and Bard/Google. I’v e actually written a paper with Claude and asked ChatGPT if it wrote it and it said, Yes. LOL.

(2) ChatGPT and other LLMs are not databases. They base output on statistical relationships among words. There is nothing for it to check against.

(3) It could never definitively answer, “yes” and “mean it” because the output is based on a statistical relationship amongst words!

Learning to write is still very important, but we need new approaches, not AI-writing detectors and punishments for suspected use.

Related

Else H. Abstracts written by ChatGPT fool scientists. Nature.2023;613:423

Hype alert: new AI writing detector claims 99% accuracy

AI Detectors: Why I Won’t Use Them

The Use of AI-Detection tools in the Assessment of Student Work

We tested a new ChatGPT-detector for teachers. It flagged an innocent student.

What to do when you’re accused of AI cheating

Against the Use of ChatGPTZero and Other LLM Detection Tools. In Chat(GPT): Navigating the Impact of Generative AI Technologies on Educational Theory and Practice: Educators Discuss ChatGPT and other Artificial Intelligence Tools.